NLU & NLG Should Go Hand-In-Hand

Traditional NLU Can Be Leveraged By Following A Hybrid NLU & NLG Approach

This article considers how Foundation LLMs can be used to leverage existing NLU investments, and improve chatbot experiences.

Considering the Conversational AI landscape and the inroads LLMs are making, there has been a few market phenomenons:

Traditional NLU based chatbot frameworks are adopting Foundation LLM functionality. But mostly in an unimaginative way to generate intent training examples, bot response fallback dialogs or rewriting bot messages. The only exception here is Cognigy, and to some degree Yellow AI.

LLMs are mostly being used in a generative capacity, and not in conjunction with existing predictive capability.

The predictive power of traditional NLU engines with regard to intent classification should not be overlooked.

Hybrid NLU and LLM based NLG implementations are not receiving the consideration in deserves.

Leverage the best of NLU, which is intent detection, with the most accessible LLM feature, which is response generation.

This article considers the following:

Increasing the granularity and sophistication of existing inbound user utterance NLU models, by complimenting it with NLG.

Using human supervision to monitor generative responses in the same way intents are monitored and maintained.

Engineered and curated LLM prompts can be used to create organisation specific fine-tuned LLM models.

Recently Stephen Broadhurst and Gregory Whiteside demonstrated an effective hybrid NLU/NLG approach which combines traditional intent-based logic with dynamic LLM responses.

Three principles to keep in mind for supervision hints:

Inbound supervision is generally more efficient than outbound supervision.

Light supervision can be very effective in generating responsive and contextual bot messages.

Highly directive guidance which is very reliable, can lead to much narrower responses.

Consider the following scenario…

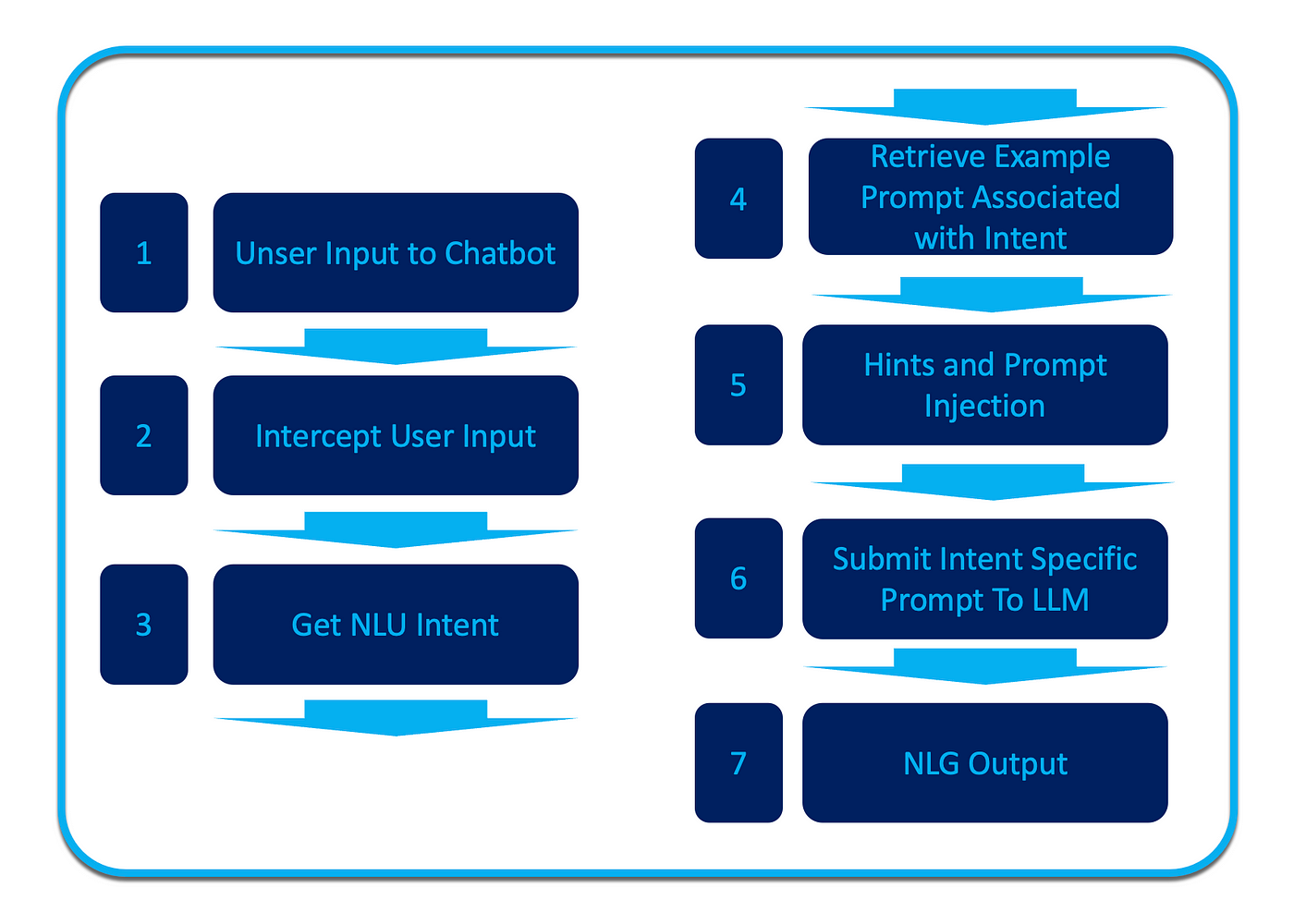

The user utterance (1) is intercepted as is the case with virtually all chatbots (2), and the intent is retrieved (3).

Step (4) is where example prompts, or hints are retrieved which is associated with the specific intent. These hints are leveraged by the LLM to generate accurate responses sans any hallucination.

It is also at step (4) where the hints or prompt whispering can be reviewed and maintained. This is not done in real-time, but can be seen as an ongoing process of maintenance, analogous to intent management.

In typical prompt engineering fashion, (5) the hints are sent to the LLM (6)together with relevant entities injected into the prompt via a process of templating.

The LLM generate the response (7) which is sent to the user. Ideally the NLU/NLG trainers will have a daily cadence of reviewing the generative results and update the prompt templates for improved hint-giving and templating.

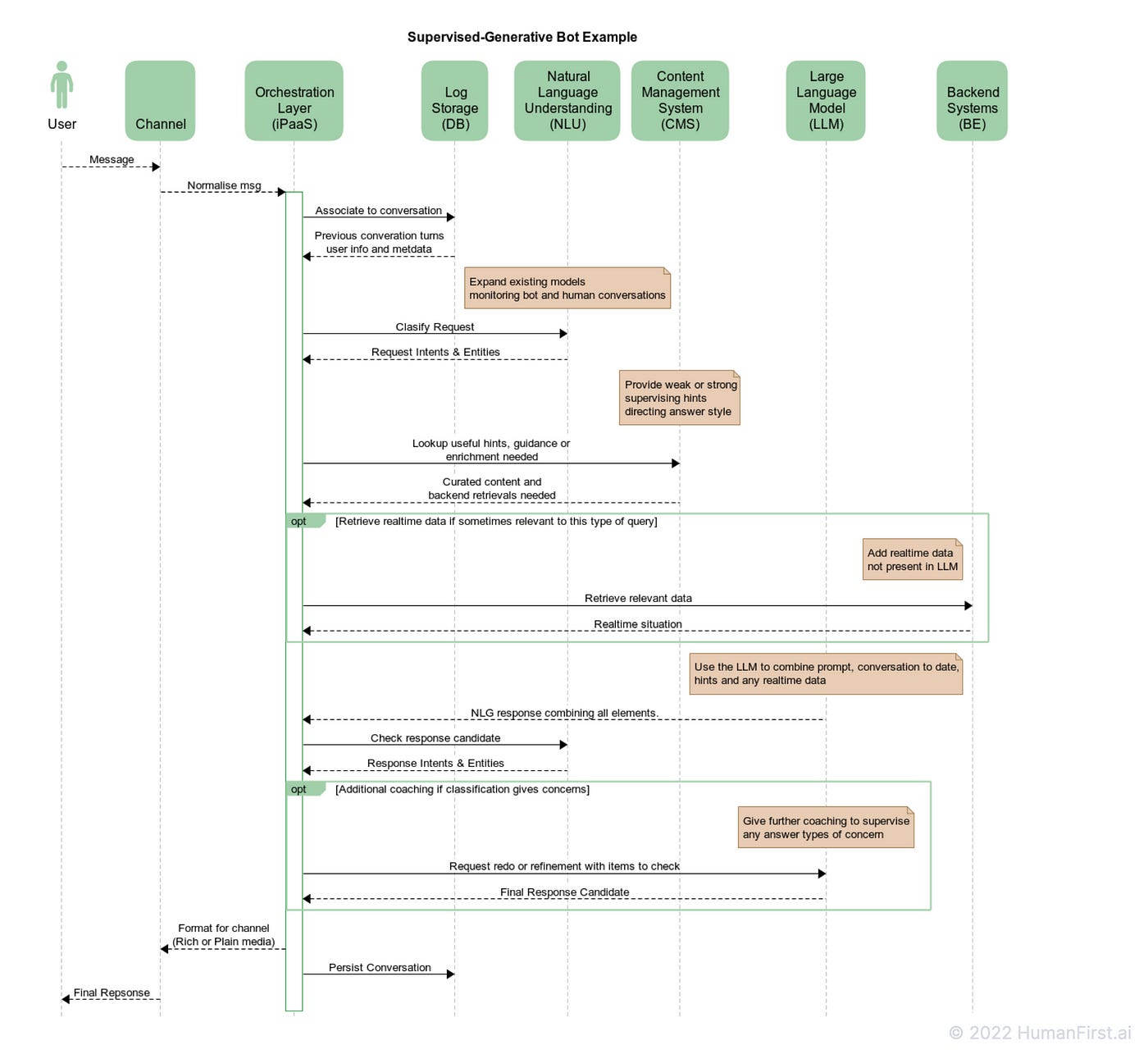

Below is a detailed diagram of a supervised generative bot process flow:

In Conclusion

I have always maintained that companies will converge on a good idea; this is evident in current chatbot architecture. There are variations, but the basic chatbot architecture is universal.

This tried and tested structure of chatbots and the predictive power of NLU engines cannot be neglected and needs to be leveraged by this hybrid approach.

⭐️ Please follow me on LinkedIn for updates on Conversational AI ⭐️

I’m currently the Chief Evangelist @ HumanFirst. I explore and write about all things at the intersection of AI and language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces and more.