Now You Can Toggle OpenAI Model Determinism

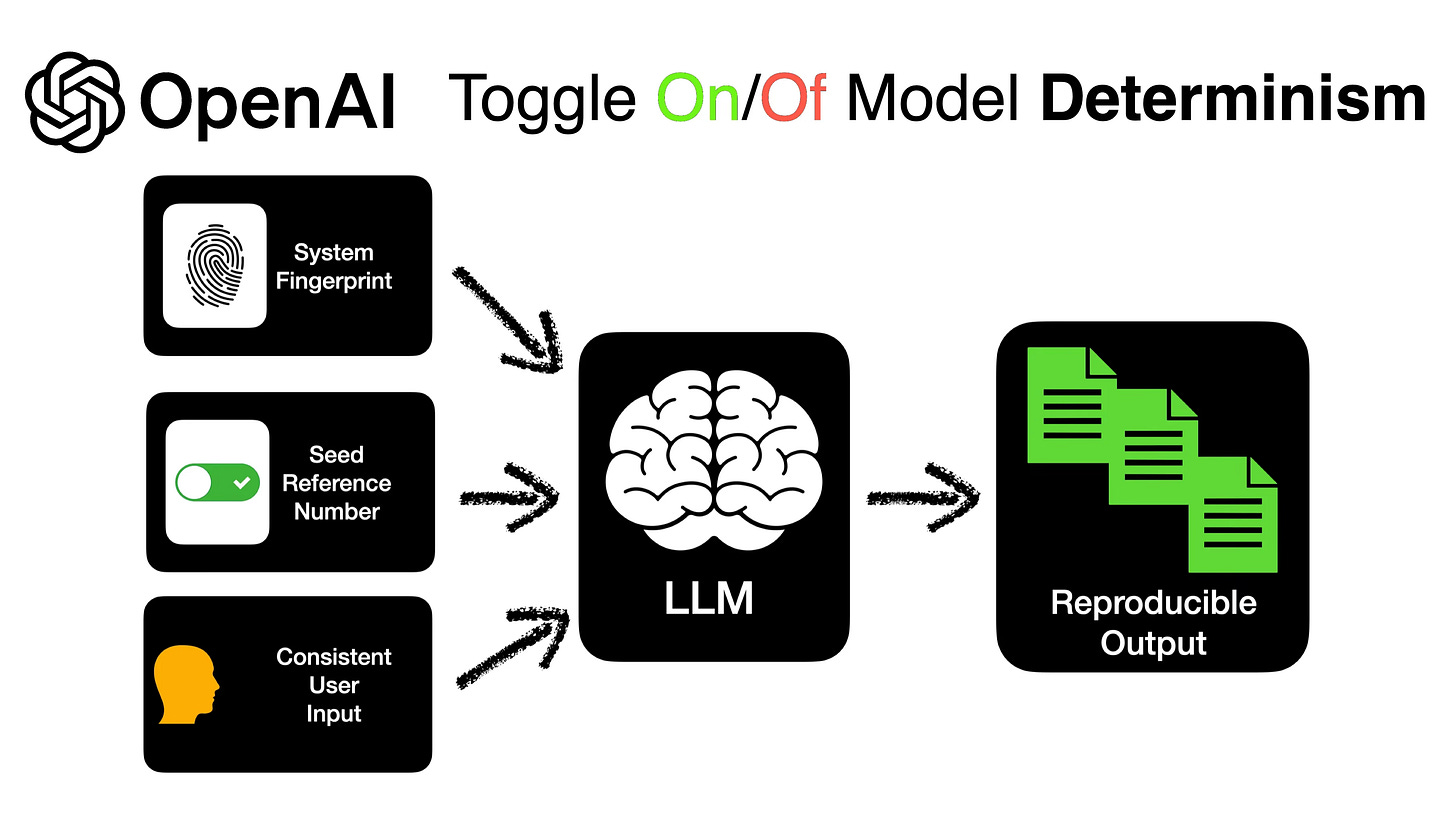

A new beta feature from OpenAI enables reproducible responses from a model, where consistent completions are generated when the same user instruction is given.

Background

LLMs have always been non-deterministic, which means the model produces different outputs for the same user input.

For conversational UIs in general, this is not a bad thing, as models improve, so does the responses, and the level of flexibility is also convenient for any chatbot or voicebot implementation, as a message abstraction layer is not required.

But there are instances where a deterministic model is required; one such use-case is debugging and testing a system, where a specific customer journey needs to be replicated. An integral part of this is to have the LLM respond with the same text.

There also may be instances where the optimal response is found for a certain query, and there is a need to reproduce this response for all users.

Practical Examples

Considering the image below, the OpenAI Playground is shown with the same user input, but as we all know, with varying non-deterministic responses.

Below is a simple Python Notebook application, notice that here the seedparameter is set to 123. The seed number is an arbitrary number, which creates an association between the user input, and the system_fingerprint .

pip install openai

os.environ['OPENAI_API_KEY'] = str("your api key goes here")

# This code is for v1 of the openai package: pypi.org/project/openai

from openai import OpenAI

client = OpenAI()

response = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[

{

"role": "system",

"content": "You are a helpful assistant that generates short stories."

},

{

"role": "user",

"content": "Generate a short story about a journey to Mars"

}

],

temperature=1,

max_tokens=150,

top_p=1,

frequency_penalty=0,

presence_penalty=0,

seed=123

)

print(response)Below is the first run with the seed number defined…

First Run

"Once upon a time, in the not-too-distant future, a group of brave adventurers

set their sights on the red planet, Mars. Their journey was fueled by a

burning curiosity and an insatiable desire to explore the unknown.

Led by Captain Rebecca Anderson, a seasoned astronaut with a heart full of

dreams, the team embarked on a mission unlike any other. They boarded the

state-of-the-art spacecraft, Orion, which was equipped with advanced

technology and provisions to last the duration of their expedition.

As they left Earth's atmosphere behind, a mixture of excitement and nervous

anticipation filled the air. The vastness of space stretched out before them,

an abyss of endless possibilities. Lights twinkled, reminding the crew of

the millions of galaxies waiting to be"

role='assistant',

function_call=None,

tool_calls=None))],

created=1699865854,

model='gpt-3.5-turbo-0613',

object='chat.completion',

system_fingerprint=None,

usage=CompletionUsage

(completion_tokens=150,

prompt_tokens=31,

total_tokens=181)Second Run

Below is the second run, with the exact same response.

"Once upon a time, in the not-too-distant future, a group of brave adventurers

set their sights on the red planet, Mars. Their journey was fueled by a

burning curiosity and an insatiable desire to explore the unknown.

Led by Captain Rebecca Anderson, a seasoned astronaut with a heart full of

dreams, the team embarked on a mission unlike any other. They boarded the

state-of-the-art spacecraft, Orion, which was equipped with advanced

technology and provisions to last the duration of their expedition.

As they left Earth's atmosphere behind, a mixture of excitement and nervous

anticipation filled the air. The vastness of space stretched out before them,

an abyss of endless possibilities. Lights twinkled, reminding the crew of

the millions of galaxies waiting to be"

role='assistant',

function_call=None,

tool_calls=None))],

created=1699866200,

model='gpt-3.5-turbo-0613',

object='chat.completion',

system_fingerprint=None,

usage=CompletionUsage

(completion_tokens=150,

prompt_tokens=31,

total_tokens=181))In Closing

Making use of the seed parameter the outputs are reproducible. This feature is in beta and the vision of OpenAI is that this feature will assist in simulations for debugging, creating comprehensive unit tests and achieving a higher degree of control over model behaviour.

To receive deterministic outputs across API calls, the following is important:

Set the

seedparameter to any integer of your choice and use the same value across requests you’d like deterministic outputs for.Ensure all other parameters (like

promptortemperature) are the exact same across requests. Especially the prompt.

⭐️ Follow me on LinkedIn for updates on Large Language Models ⭐️

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.

Only applicable to the existing client.chat.completions instance I suppose.