OpenAI API Deep Research

With the introduction of the OpenAI API for Deep Research, OpenAI also lifted the veil on the internal workings of ChatGPT.

In Short

The deep research API documentation reveals that quite a bit of activities take place behind the API.

This is again sounds a warning to enterprise-like implementations that an API is really a black-box with the underlying functionality unknown.

There has been numerous studies showing how models change and driftbehind commercial APIs. Showing that users are really at the mercy of model providers.

This OpenAI publication also shows the sequence for a Deep Research query on ChatGPT. Giving insights to what happens under the hood.

The idea of the ChatGPT GUI is to abstract away any complexity and present a simple UI.

But, it is quite interesting to see how there are actually three model calls being done under the good with ChatGPT. two lightweight models and then the deep research model.

ChatGPT uses a helper model (like GPT-4.1) to clarify your intent and collect details like preferences or goals before starting research. This step customises web searches for more relevant results.

This step is omitted from the API; hence the developer can choose to what extent they want to customise the workflow.

The prompt rewriter uses another lightweight model (for example gpt-4.1) to expand or specify user queries before passing them to the research model.

This goes to show how much happens under the hood.

ChatGPT Inner-workings

The image below shows the inner-workings of ChatGPT Deep Research functionality. I imagine other ChatGPT functionality will also perform the similar multi-Model orchestration.

It goes to show how complex the inner-workings of ChatGPT and illustrates an important principle…

And that is that complexity needs to live somewhere, it can be presented to the user to decipher and figure out. Or the complexity can live under the hood, or behind the UI.

Handling the complexity is then offloaded to the developer to orchestrate the user experience on behalf of the user.

Consider how there are three language models involved. One for disambiguation and clarification.

A second to optimise the prompt for the research model.

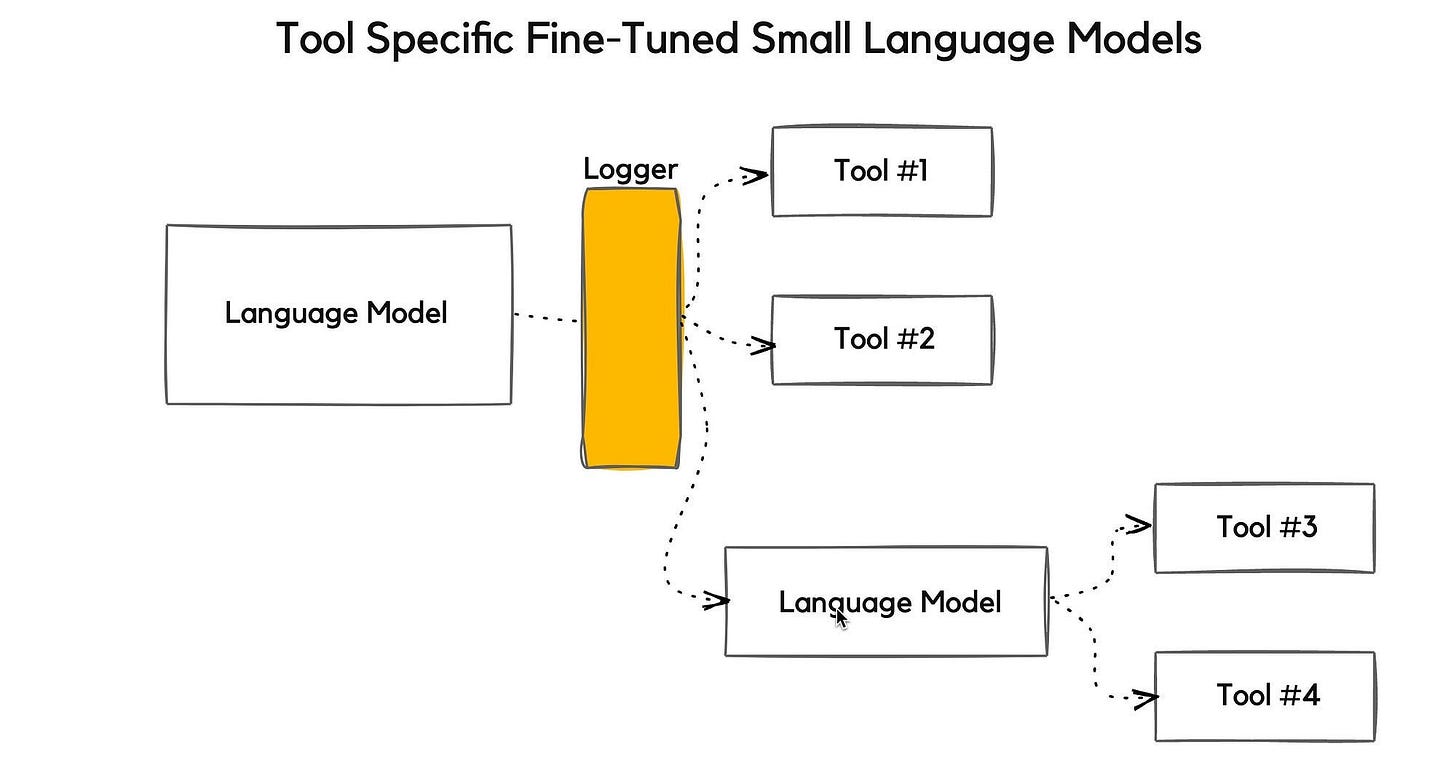

I believe a lot can be learned from this approach, showing that a single model is not always (in most cases) the answer. And that multiple custom smaller and less capable models.

As the image below shows, NVIDIA has shown an approach where a language model is trained to accurately identify which tool to make use of for which step or sub-step.

o3-deep-research-2025-06-26

Optimised for in-depth synthesis and higher-quality output

o4-mini-deep-research-2025-06-26

Lightweight and faster, ideal for latency-sensitive use cases

OpenAI Deep Research API Architecture

The architecture of the API is very much modelled on ChatGPT, but there is the omission of the disambiguation query which developers then are free to omit or customise.

This setup gives developers full control over how research tasks are framed, but also places greater responsibility on the quality of the input prompt.

The Abstracted Experience

ChatGPT is the friendly face of OpenAI’s tech — a web or app interface where you type a question and get an instant response.

It’s designed for accessibility, no coding required, just natural conversation.

Behind the scenes, it abstracts away the heavy lifting.

For instance, if your query is vague like “Help me plan a trip to France,” ChatGPT might ask follow-ups for details on budget, dates, or preferences.

This clarification happens seamlessly via an intermediate model, ensuring relevant results without you seeing the gears turn.This abstraction makes ChatGPT perfect for everyday users.

It handles safeguards, prompt optimisation, and tool integrations (like web searches) invisibly.

But it comes at a cost: limited transparency.

You can’t inspect intermediate steps, customise tools, or integrate private data easily. Outputs are conversational, not always structured for professional use, and there’s no programmatic control for scaling or automation.

Exposing the Details

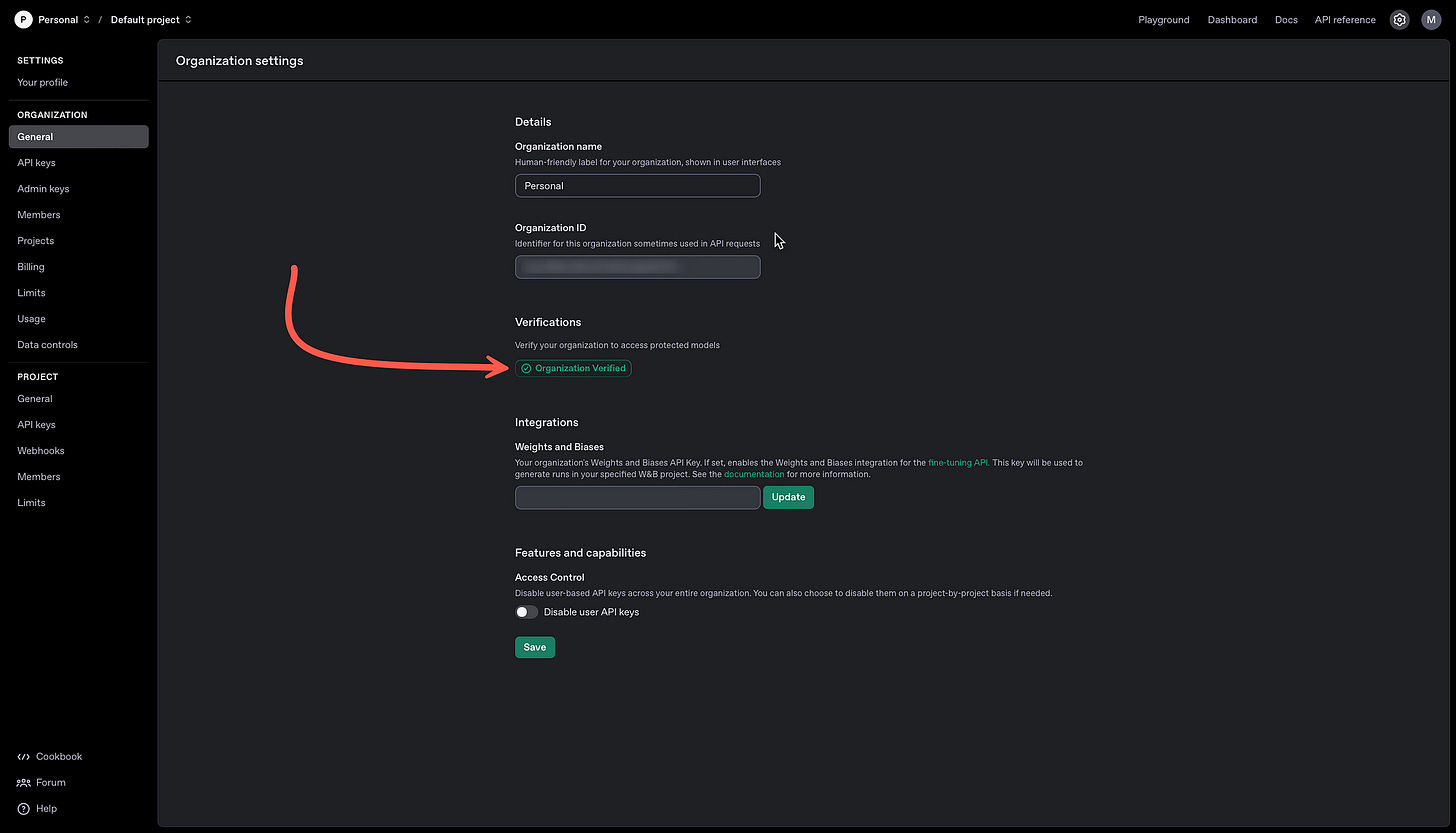

The OpenAI API gives a deeper level of control and the ability to add this to your application.

It’s a programmatic interface where you authenticate with an API key, define system messages, and configure tools explicitly.

For deep research, the API uses specialised models like o3-deep-research-2025–06–26 for high-quality synthesis or o4-mini-deep-research-2025–06–26for speed.

Key Advantages of Using the API

Why bother with the API’s complexity? The payoffs are huge for advanced users:

Customisation

Tailor workflows to your needs — e.g., integrate MCP for querying internal docs during travel planning or market analysis.

Depth & Accuracy

Handle complex queries like economic impacts with decomposed tasks, web tools, and synthesised reports. Outputs include bibliographies and data summaries ready for charts.

Scalability

Run asynchronously for batch processing; embed in apps for automation.

Transparency

Inspect every step, from raw searches to code executions, ensuring verifiable results.

Innovation

Build beyond chat — think enterprise tools for healthcare policy or financial modelling.

Lastly…

ChatGPT democratises AI by hiding complexity, but the OpenAI API empowers you to master it.

For deep research, the API’s exposed details offer unmatched advantages in customisation and scalability.

Whether you’re a developer or researcher, understanding these layers will transform your AI experience and expectations.

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. From Language Models, AI Agents to Agentic Applications, Development Frameworks & Data-Centric Productivity Tools, I share insights and ideas on how these technologies are shaping the future.