OpenAI Deep Research AI Agent Architecture

Recently OpenAI shows their ideal scenario for creating a Deep Research AI Agent…

Key Considerations

There is an optimal balance between the number of tools and AI Agents to use.

Yes, all of these AI Agents can be collapsed into one AI Agent with multiple tools. But there exists an optimal balance between the number of tools in one AI Agent, and when it should be broken out into more AI Agents with less tools assigned to each.

When tools assigned to one single AI Agent becomes too numerous, tool selection can become a problem. On this, NVIDIA has done great research on fine-tuning Language Models for tool accurate tool selection.

The general approach of OpenAI seems to be that of multiple AI Agent collaboration and orchestration.

The establishment of context and multiple AI Agent collaboration are very important…this brings us back to the original underpinnings of chatbots where establishing intent was important. And for a research request which is normally long running, this firm establishment of intent and context is paramount.

Each AI Agent makes use of a different Language Model which is interesting from the perspective of using less expensive models for tasks sub-tasks and really preparing the user query well prior to it being sent to an expensive and long running query.

By matching the tool to the task, you optimise efficiency, minimise expenses, and ensure scalable AI integration in your applications.

Specific Use

When tackling intricate tasks that demand strategic planning, information synthesis from diverse sources, integration of specialised tools, or layered multi-step reasoning — such as conducting in-depth market analysis, debugging complex code issues, or generating comprehensive research reports — leverage Deep Research Agents.

They excel in orchestrating workflows, adapting to evolving contexts and delivering nuanced outputs by breaking down problems into manageable components and iterating as needed.

Conversely, reserve them exclusively for such demanding scenarios.

For everyday needs like rapid fact retrieval, straightforward Q&A exchanges, or brief conversational interactions, stick to the standard OpenAI Chat Completions API.

This simpler endpoint provides:

quicker response times,

lower computational overhead,

reduced costs,

making it ideal for high-volume or low-complexity use cases without the added latency of agent orchestration.

making it ideal for high-volume or low-complexity use cases without the added latency of agent orchestration.

making it ideal for high-volume or low-complexity use cases without the added latency of agent orchestration.

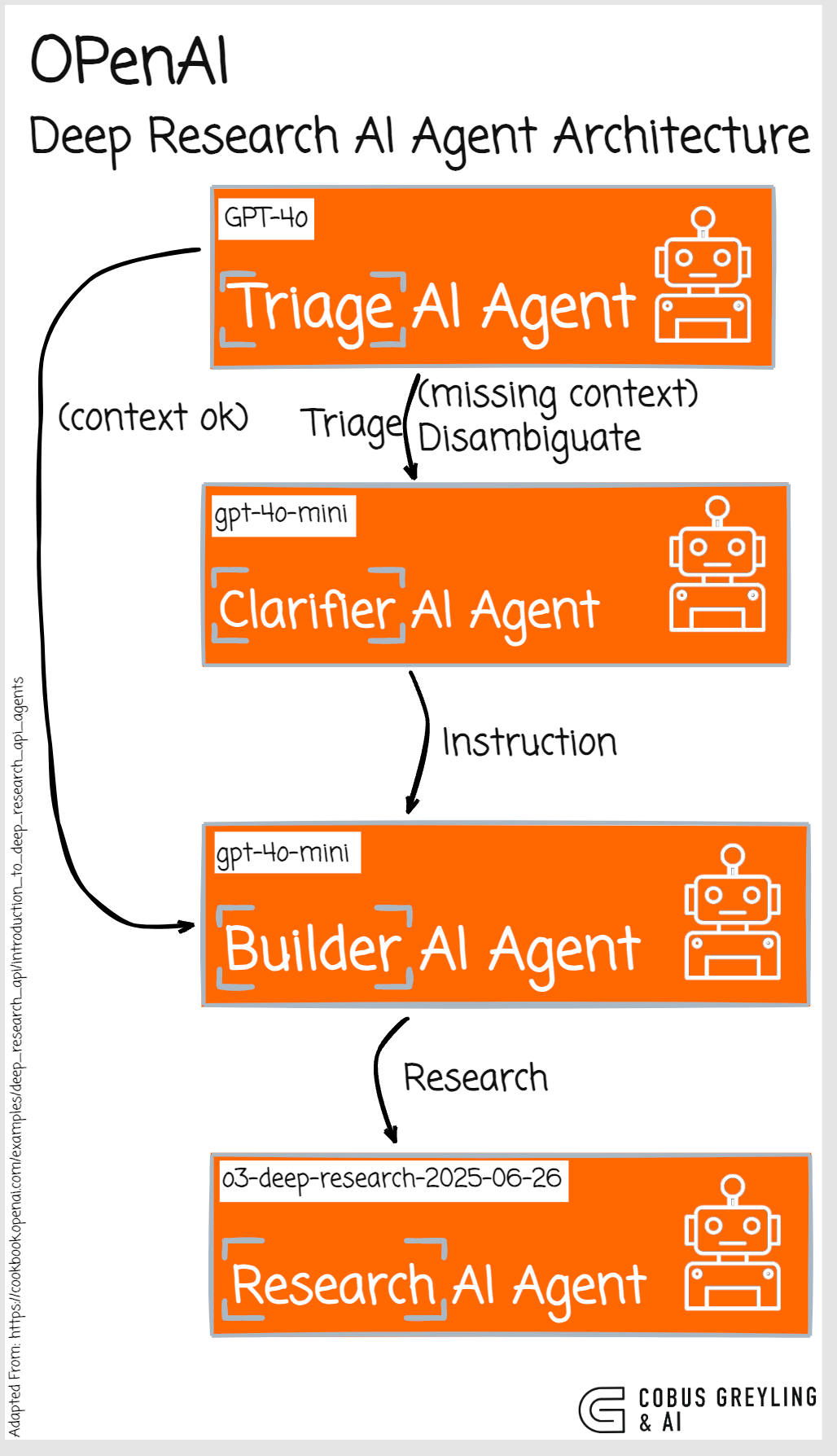

Four-Agent Deep Research Pipeline

Triage Agent

Hey there, I take a close look at the user’s query to see what’s going on.

If it feels like some key context is missing, I’ll send it over to the Clarifier Agent to dig deeper. Otherwise, if everything seems solid, I’ll route it straight to the Instruction Agent to keep things moving.

Clarifier Agent

I’m all about clearing things up by asking those essential follow-up questions.

Then, I hang tight and wait for the user — or a mock response — to provide the answers we need.Instruction Builder Agent Once we’ve got that enriched input, I step in to transform it into a super precise research brief that’s ready for action.

Research Agent (o3-deep-research)

I dive into web-scale empirical research using the WebSearchTool to gather all the juicy details.

At the same time, I check our internal knowledge store with MCP — if there’s anything relevant, I pull in those handy snippets to beef up my references. To keep you in the loop, I stream out intermediate events along the way for full transparency.

Finally, I deliver the polished Research Artefact, which we can parse later on.

Observability

The print_agent_interaction function, also known as parse_agent_interaction_flow in the OpenAI Cookbook’s Deep Research API agents example, serves as a handy utility for visualising and debugging the dynamic workflow of multi-agent systems.

It takes a stream of AI Agent events as input and iterates through each item, printing a clear, numbered sequence that highlights key activities such as agent handoffs, tool calls (including names and arguments), reasoning steps, and message outputs, all prefixed with the relevant agent’s name for easy tracking.

This makes it invaluable for developers building complex research pipelines, as it transforms raw event data into a human-readable format, enhancing transparency during testing or monitoring — think of it as a lightweight trace logger that skips over irrelevant details while spotlighting the core interactions between agents like triage, clarifier, instruction builder, and research components.

Lastly

The next frontier of getting things to work would be inter AI Agent collaboration where AI Agents are not in the same organisation.

Secondly, AI Agent integration into the human world of complex web browsing and navigating Operating Systems.

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. From Language Models, AI Agents to Agentic Applications, Development Frameworks & Data-Centric Productivity Tools, I share insights and ideas on how these technologies are shaping the future.

Deep Research API with the Agents SDK | OpenAI Cookbook

This cookbook demonstrates how to build Agentic research workflows using the OpenAI Deep Research API and the OpenAI…cookbook.openai.com

COBUS GREYLING

Where AI Meets Language | Language Models, AI Agents, Agentic Applications, Development Frameworks & Data-Centric…www.cobusgreyling.com

https://github.com/openai/openai-cookbook/blob/main/examples/deep_research_api/introduction_to_deep_research_api_agents.ipynb