OpenAI Has Added Remote MCP Server Support

OpenAI has introduced support for remote MCP servers in its Responses API, following the integration of MCP in the Agents SDK.

This update allows developers to connect OpenAI’s models to tools hosted on any MCP-compliant server using minimal code.

Examples include linking to platforms like Cloudflare, Hubspot, Intercom, PayPal, Plaid, Shopify, Stripe, Square, Twilio and Zapier.

OpenAI anticipates rapid growth in the MCP server ecosystem, enabling developers to create AI Agents that seamlessly interact with users’ existing tools and data. To support this standard, OpenAI has joined the MCP steering committee.

The Model Context Protocol (MCP) is an open standard that simplifies how applications share structured context with large language models (LLMs).

What is the significance?

This isn’t just another API integration or function call — it’s a new paradigm for building smarter, more connected AI Agents. As I show below with some practical examples…

Unlike typical integrations that might add a single tool or feature, MCP creates a standardised way for AI models to tap into a wide range of external tools and data sources like

payment systems,

CRMs, or

messaging platforms

in a consistent, structured format.

Think of it like giving AI a universal plug to connect to almost any app or service without custom coding for each one.

This makes it easier and faster to build powerful agents that can perform complex tasks — like processing payments, managing customer data, or automating workflows — across multiple platforms.

The difference lies in its scalability and flexibility: MCP sets up an ecosystem where new tools can be added effortlessly, potentially transforming how AI interacts with the real world.

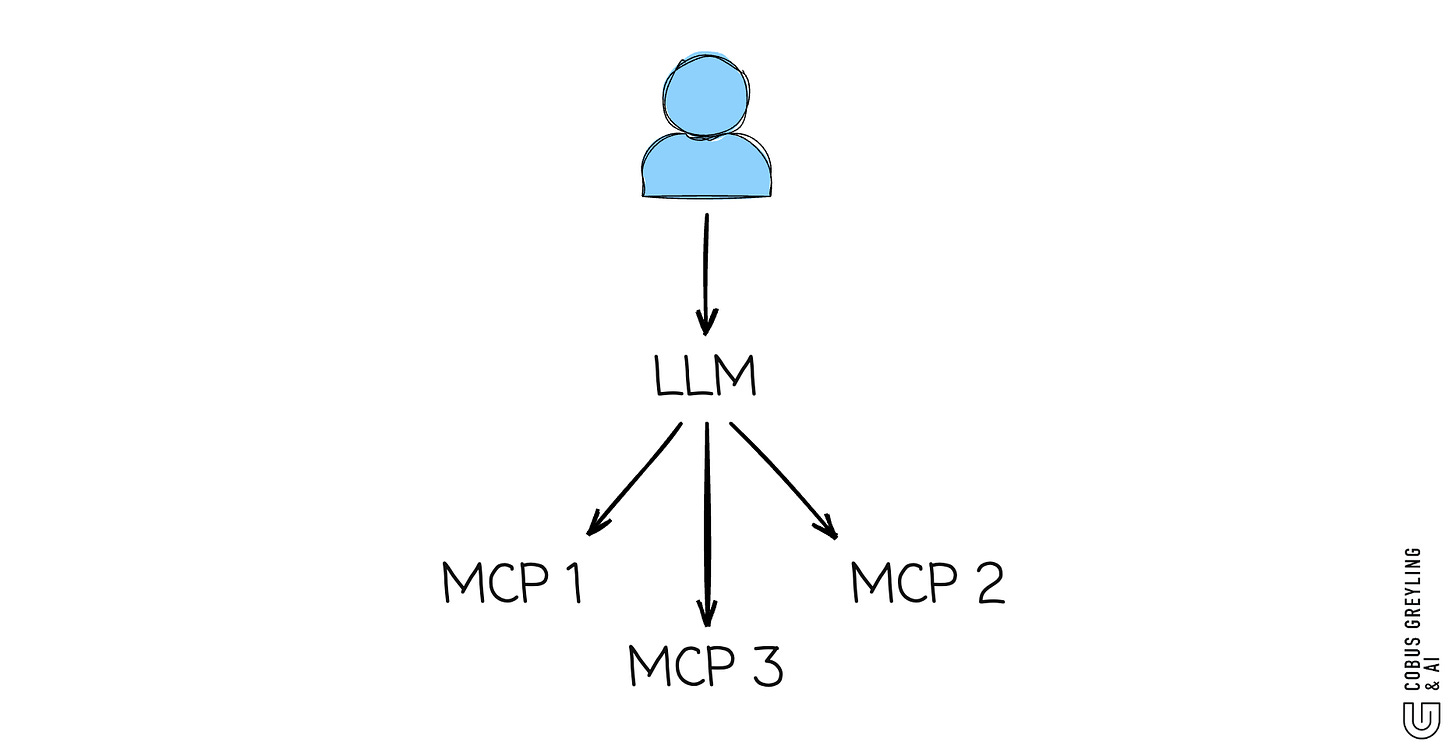

How the Model “Knows” What MCP Server to Call

There are three main ways this routing can be managed:

1. Embedded Routing in the Model

Prompt Engineering or Fine-Tuning

The LLM is trained or prompted with instructions like:

If the user is asking about travel bookings, use the TravelMCP; if it’s a calendar task, use CalendarMCP.

It may be prompted with examples to learn patterns.

2. External Orchestration Layer

A middleware service inspects the model’s intermediate output or runs a classifier to route the request to the correct MCP.

This layer might also do validation, logging, and security filtering.

How to Test the LLM’s Decision

You can try changing the prompt and observe how the model reroutes:

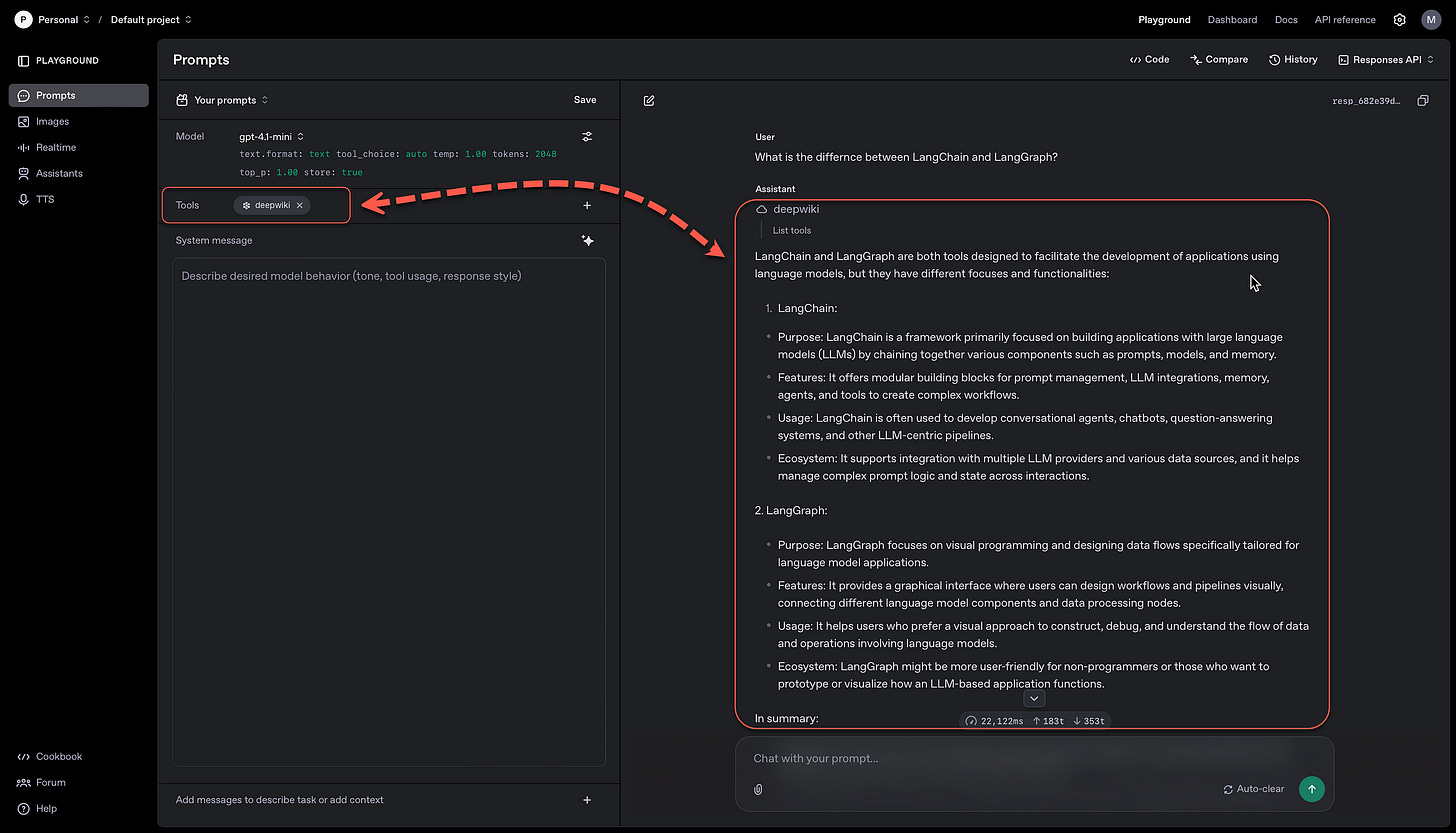

Prompt: “What’s the difference between LangChain and LangGraph?”

LLM picks:

deepwiki → ask_question

Prompt: “Will it rain tomorrow in San Francisco?”

LLM picks:

weather → get_forecast

Prompt: “Add lunch with Sarah on Friday at noon”

LLM picks:

calendar → create_event

Below is a practical Python code example of an application with multiple (3) MCP integrations.

from openai import OpenAI

client = OpenAI()

response = client.responses.create(

model="gpt-4.1-mini",

input=[

{

"role": "user",

"content": "Schedule a meeting with the team for next Tuesday at 3 PM."

}

],

text={

"format": {

"type": "text"

}

},

reasoning={}, # Could be expanded to include thoughts or chain-of-thought traces

tools=[

{

"type": "mcp",

"server_label": "calendar",

"server_url": "https://mcp.calendar.ai/mcp",

"allowed_tools": [

"create_event",

"check_availability"

],

"require_approval": "auto"

},

{

"type": "mcp",

"server_label": "deepwiki",

"server_url": "https://mcp.deepwiki.com/mcp",

"allowed_tools": [

"read_wiki_structure",

"read_wiki_contents",

"ask_question"

],

"require_approval": "auto"

},

{

"type": "mcp",

"server_label": "weather",

"server_url": "https://mcp.weatherapi.com/mcp",

"allowed_tools": [

"get_forecast",

"get_current_weather"

],

"require_approval": "auto"

}

],

temperature=1,

max_output_tokens=2048,

top_p=1,

store=True

)Function Calling vs MCP

Function calling and MCP (Modular Command Processor) serve similar purposes in LLM ecosystems — they both enable the model to take structured actions — but they differ in:

scope,

architecture, and

control.

Function Calling

Function calling is a more lightweight, schema-based mechanism where individual functions are defined with input/output JSON schemas and invoked directly by the model during inference.

It’s often used for integrating APIs, tools, or plugins within a single model session and the decision of which function to call is tightly bound to the model’s reasoning and schema matching.

MCP

MCP, on the other hand, represents a more modular, service-oriented approach.

It typically abstracts sets of related functions (tools) under named, external service endpoints (servers), allowing for dynamic, multi-server orchestration.

MCP enables better

tool isolation,

access control, and

Server-side logic, ,

Which makes it more suitable for enterprise use cases where the LLM acts as a router and formatter for interacting with complex backend systems.

While function calling is ideal for direct, lightweight interactions, MCP excels at structured, modular workflows and integrating distinct subsystems with governance.

OpenAI MCP

Below an example from the OpenAI console, a MCP server is defined (deepwiki) and the MCP response is shown on the right-hand side.

The video below shows the process of integrating an LLM call with an MCP server. The code can then be copied from the console and used in an application…

Connecting AI Agents to external services traditionally involves function calling, which requires multiple network hops, causing latency and complexity.

The hosted Model Context Protocol (MCP) tool in the Responses API simplifies this.

By configuring the model to connect to an MCP server, which centralises standard commands like search product catalogor add item to cart, developers enable direct interaction, reducing latency and simplifying tool management.

Why It Matters

Unlike function calling’s slow, multi-step process, MCP streamlines integrations with a single connection to a server hosting multiple tools, making AI agents faster and easier to scale.

The code..

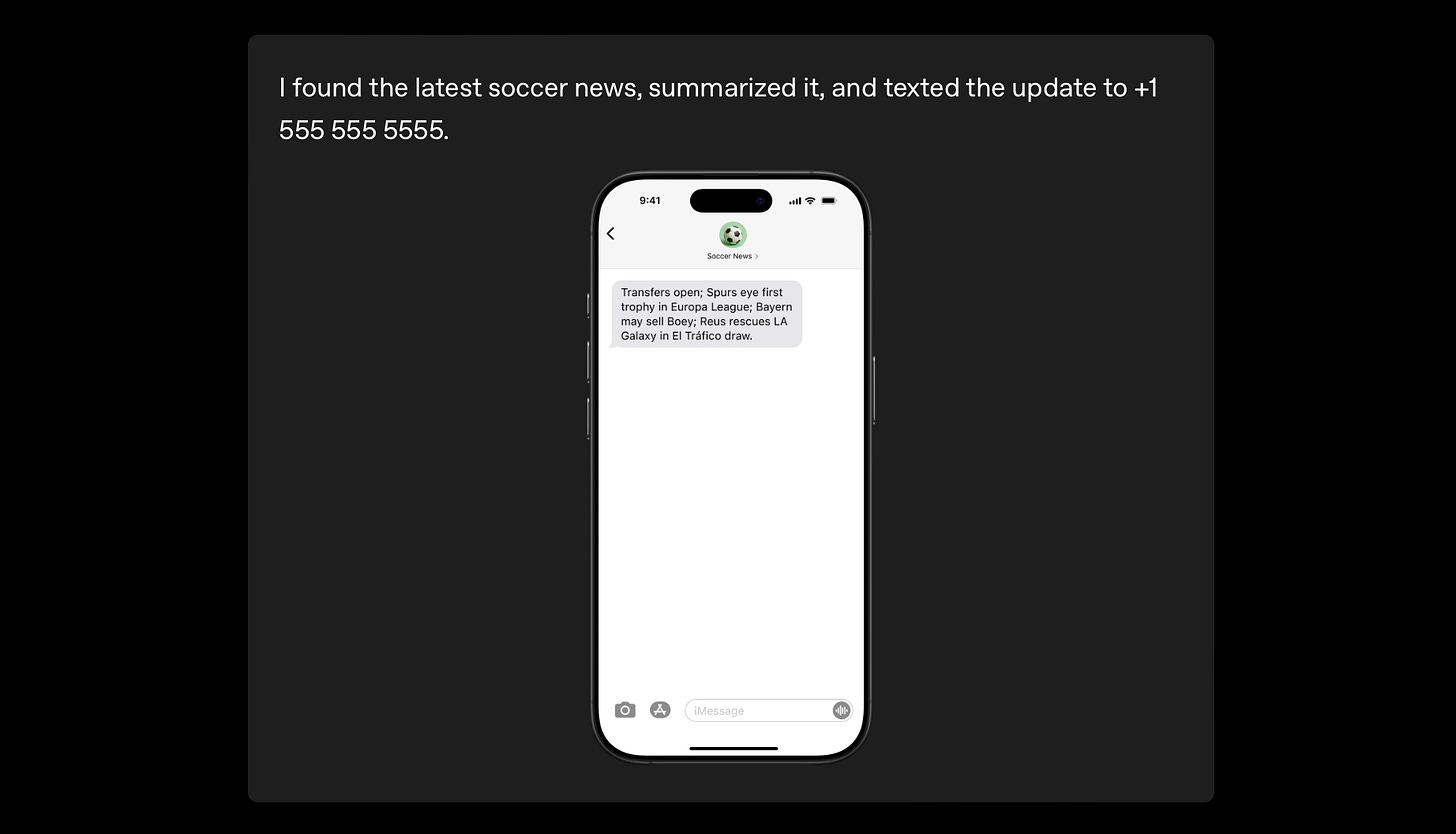

response = client.responses.create(

model="gpt-4o",

tools=[

{ "type": "web_search_preview" },

{

"type": "mcp",

"server_label": "twilio",

"server_url": "https://<function-domain>.twil.io/mcp",

"headers": { "x-twilio-signature": "..." }

}

],

input="Get the latest soccer news and text a short summary to +1 555 555 5555"

)Practical example of output…

Practical Code Example

The Python code below can be copied and pasted directly into a Notebook…it is a working MCP integration to DeepWiki…

You can see under input, the content is given, which is the user query…

Then under tools, the mcp integration is defined.

import os

os.environ["OPENAI_API_KEY"] = "<Your API Key>"

from openai import OpenAI

client = OpenAI()

response = client.responses.create(

model="gpt-4.1-mini",

# Provide a valid input with a user message

input=[

{

"role": "user",

"content": "What is the differnce between LangChain and LangGraph?"

}

],

text={

"format": {

"type": "text"

}

},

reasoning={},

tools=[

{

"type": "mcp",

"server_label": "deepwiki",

"server_url": "https://mcp.deepwiki.com/mcp",

"allowed_tools": [

"read_wiki_structure",

"read_wiki_contents",

"ask_question"

],

"require_approval": "always"

}

],

temperature=1,

max_output_tokens=2048,

top_p=1,

store=True

)

print (response.json)Below is the Json response from DeepWiki via the MCP server…

<bound method BaseModel.json of Response("id=""resp_682e32dac7dc819193d858e68be9c9110dcd7b33b642c74d",

created_at=1747858138.0,

"error=None",

"incomplete_details=None",

"instructions=None",

"metadata="{

},

"model=""gpt-4.1-mini-2025-04-14",

"object=""response",

"output="[

"ResponseOutputMessage(id=""mcpl_682e32dacc988191a9706b719ce0a9270dcd7b33b642c74d",

"content=None",

"role=None",

"status=None",

"type=""mcp_list_tools",

"server_label=""deepwiki",

"tools="[

{

"annotations":"None",

"description":"Get a list of documentation topics for a GitHub repository",

"input_schema":{

"type":"object",

"properties":{

"repoName":{

"type":"string",

"description":"GitHub repository: owner/repo (e.g. \"facebook/react\")"

}

},

"required":[

"repoName"

],

"additionalProperties":false,

"$schema":"http://json-schema.org/draft-07/schema#"

},

"name":"read_wiki_structure"

},

{

"annotations":"None",

"description":"View documentation about a GitHub repository",

"input_schema":{

"type":"object",

"properties":{

"repoName":{

"type":"string",

"description":"GitHub repository: owner/repo (e.g. \"facebook/react\")"

}

},

"required":[

"repoName"

],

"additionalProperties":false,

"$schema":"http://json-schema.org/draft-07/schema#"

},

"name":"read_wiki_contents"

},

{

"annotations":"None",

"description":"Ask any question about a GitHub repository",

"input_schema":{

"type":"object",

"properties":{

"repoName":{

"type":"string",

"description":"GitHub repository: owner/repo (e.g. \"facebook/react\")"

},

"question":{

"type":"string",

"description":"The question to ask about the repository"

}

},

"required":[

"repoName",

"question"

],

"additionalProperties":false,

"$schema":"http://json-schema.org/draft-07/schema#"

},

"name":"ask_question"

}

]")",

"ResponseOutputMessage(id=""msg_682e32e7b198819190aad4589153fb030dcd7b33b642c74d",

"content="[

"ResponseOutputText(annotations="[

],

"text=""LangChain and LangGraph are both tools related to working with language models, but they differ in their focus and approach:\n\n1. LangChain:\n- LangChain is a framework designed to facilitate the development of applications using large language models (LLMs).\n- It provides abstractions and components to build complex chains of calls to language models and other utilities.\n- LangChain supports tasks such as prompt management, chaining prompts together, memory integration, document loading, and indexing.\n- It is useful for building conversational agents, chatbots, question-answering systems, and other applications that require orchestrating multiple calls to LLMs and external data sources.\n\n2. LangGraph:\n- LangGraph is less commonly referenced and may refer to tools or concepts related to representing language model interactions or knowledge graphs derived from language tasks.\n- It could be a tool or concept designed to visualize, structure, or orchestrate the flow of data or logic in language model workflows using a graph-based approach.\n- The main idea behind LangGraph, if considered as a graph-oriented framework, would be to provide a visual or structural way to manage components and data flow for language tasks, potentially emphasizing modularity and traceability.\n\nIn summary:\n- LangChain is a comprehensive development framework for building applications that utilize language models with chaining and modular components.\n- LangGraph, if defined as a graph-based approach, focuses on structuring or visualizing language model workflows or data as graphs.\n\nIf you have a specific source or repository for \"LangGraph,\" I can help provide more detailed and exact information.",

"type=""output_text"")"

],

"role=""assistant",

"status=""completed",

"type=""message"")"

],

"parallel_tool_calls=True",

temperature=1.0,

"tool_choice=""auto",

"tools="[

"FileSearchTool(type=""mcp",

"vector_store_ids=None",

"filters=None",

"max_num_results=None",

"ranking_options=None",

"allowed_tools="[

"read_wiki_structure",

"read_wiki_contents",

"ask_question"

],

"headers=None",

"require_approval=""always",

"server_label=""deepwiki",

"server_url=""https://mcp.deepwiki.com/<redacted>"")"

],

top_p=1.0,

max_output_tokens=2048,

"previous_response_id=None",

"reasoning=Reasoning(effort=None",

"generate_summary=None",

"summary=None)",

"service_tier=""default",

"status=""completed",

"text=ResponseTextConfig(format=ResponseFormatText(type=""text""))",

"truncation=""disabled",

usage=ResponseUsage(input_tokens=183,

input_tokens_details=InputTokensDetails(cached_tokens=0),

output_tokens=313,

output_tokens_details=OutputTokensDetails(reasoning_tokens=0),

total_tokens=496),

"user=None",

"background=False",

"store=True)>"And the response in text…

LangChain and LangGraph are both tools related to working with language models, but they differ in their focus and approach:\n\n1. LangChain:\n- LangChain is a framework designed to facilitate the development of applications using large language models (LLMs).\n- It provides abstractions and components to build complex chains of calls to language models and other utilities.\n- LangChain supports tasks such as prompt management, chaining prompts together, memory integration, document loading, and indexing.\n- It is useful for building conversational agents, chatbots, question-answering systems, and other applications that require orchestrating multiple calls to LLMs and external data sources.\n\n2. LangGraph:\n- LangGraph is less commonly referenced and may refer to tools or concepts related to representing language model interactions or knowledge graphs derived from language tasks.\n- It could be a tool or concept designed to visualize, structure, or orchestrate the flow of data or logic in language model workflows using a graph-based approach.\n- The main idea behind LangGraph, if considered as a graph-oriented framework, would be to provide a visual or structural way to manage components and data flow for language tasks, potentially emphasizing modularity and traceability.\n\nIn summary:\n- LangChain is a comprehensive development framework for building applications that utilize language models with chaining and modular components.\n- LangGraph, if defined as a graph-based approach, focuses on structuring or visualizing language model workflows or data as graphs.\n\nIf you have a specific source or repository for \”LangGraph,\” I can help provide more detailed and exact information.

In Closing

Functions are modular, self-contained units of logic within the LLM and SDK, designed to process data and prepare structured outputs for API interactions.

They analyse user query requirements to select the appropriate API and format output data in the correct structure.

Functions operate internally to the LLM/SDK, without directly interacting with APIs or transmitting data, leaving external communication to other components like MCP clients.

MCP Client Installation allows the OpenAI SDK to instantiate and manage one or more MCP (Model-Client Protocol) clients, which facilitate communication with external services.

Each MCP client is configured with the SDK code, defined by parameters such as endpoint URLs, authentication credentials, and timeouts, as specified in the SDK’s configuration or code.

The SDK acts as a runtime environment, coordinating MCP client interactions with external systems.

Orchestration is the process by which the LLM analyses a query and selects the appropriate MCP client(s) to fulfil it, based on the query’s intent and the clients’ configured capabilities.

Unlike functions, MCP clients interact with external systems by sending requests (e.g., via HTTP) and receiving responses.

The LLM’s decision-making may involve parsing the query, matching tasks to client capabilities, and coordinating multiple clients for complex queries.

Tools are components integrated into an AI Agent, enabling it to perform tasks like accessing APIs, executing calculations, or querying databases.

Tools, as a subset of an AI Agent’s capabilities, can be combined to create complex workflows, enhancing the agent’s ability to handle diverse queries.

For example, a tool might aggregate data from multiple MCP clients to provide comprehensive responses.

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. From Language Models, AI Agents to Agentic Applications, Development Frameworks & Data-Centric Productivity Tools, I share insights and ideas on how these technologies are shaping the future.

Additional Resources

openai-cookbook/examples/mcp/mcp_tool_guide.ipynb at main · openai/openai-cookbook

Examples and guides for using the OpenAI API. Contribute to openai/openai-cookbook development by creating an account…github.com

Guide to Using the Responses API's MCP Tool | OpenAI Cookbook

Open-source examples and guides for building with the OpenAI API. Browse a collection of snippets, advanced techniques…cookbook.openai.com

https://openai.com/index/new-tools-and-features-in-the-responses-api/

https://platform.openai.com/docs/guides/tools-remote-mcp

COBUS GREYLING

Where AI Meets Language | Language Models, AI Agents, Agentic Applications, Development Frameworks & Data-Centric…www.cobusgreyling.com