RAG Foundry By Intel

RAG Foundry is an open-source framework from Intel. Designed to simplify the implementation & evaluation of Retrieval-Augmented Generation (RAG) systems.

It streamlines the process by integrating data creation, model training, inference & evaluation into a single workflow. The framework has proven effective in fine-tuning LLMs, like Llama-3 and Phi-3, by improving performance across various knowledge-intensive datasets.

Introduction

Considering the current language model landscape, several product and architecture principles have begun to crystallise in the market:

Open-Sourced Small Language Models (SLMs) for local hosting and inference. The availability of open-sourced Language Models paves the way for fine-tuning language models for highly niche tasks; like RAG specific implementations.

Orchestration of multiple models to constitute a solution.

AI Agents / Agentic Applications have come to the fore, with tools also focussing on rapid tool creation for AI Agents to make use of.

AI Agents are starting to leverage Multi-Modal Language Models / Foundation Models to interpret and navigate GUIs, which include phones, PCs, browsers, etc.

Pipelines are being created via no-code flow-builder tools, which are exposed via API’s to act as Agent Tools.

Language Model fine-tuning is performed with data which mimics the behaviour the model needs to exhibit. These include task decomposition and reasoning & training models to be better suited for Agent use.

Due to the specific requirements of fine-tuning, there is a rise in research and use of synthetic data. To create highly granular bespoke training data, Language Models are used to generate the data.

Various methods are being developed to create diverse, nonrepetitive and valuable synthetic training data.

Creating data for AI Agent specific fine-tuning includes incorporating into the data environments and planning tasks, synthesising expert-level decomposition and task trajectories (sequences of action-observation pairs) on these planning tasks. And instruction-tuning LLMs with the synthesised trajectory data.

RAG functionality is evolving, with Agentic RAG, quality benchmarking post generation and more. Graph data approaches and as seen in this article, RAG specific fine-tuning of models.

RAG Foundry

What is RAG Foundry?

RAG Foundry is an open-source framework from Intel, designed to augment large language models for Retrieval-Augmented Generation (RAG) use cases.

RAG Foundry streamlines the entire process by integrating data creation, training, inference, & evaluation into a unified workflow.

This facilitates the development of datasets via data augmentation processes, enabling more effective training and evaluation of large language models in RAG environments.

Why RAG Foundry?

This integration facilitates rapid prototyping and experimentation with various RAG techniques.

Enabling users to efficiently generate datasets and train RAG models using internal or specialised knowledge sources.

The framework’s effectiveness is demonstrated by augmenting and fine-tuning the Llama-3 and Phi-3 models with diverse RAG configurations, resulting in consistent improvements across three knowledge-intensive datasets.

Below the template for inserting relevant documents as context…

Question: {query}

Context: {docs}

Answer this question using the information given in the context above. Here is things to pay attention to:

- First provide step-by-step reasoning on how to answer the question.

- In the reasoning, if you need to copy paste some sentences from the context, include them in

##begin_quote## and ##end_quote##. This would mean that things outside of ##begin_quote## and

##end_quote## are not directly copy paste from the context.

- End your response with final answer in the form <ANSWER>: $answer, the answer should be succinct.Ragas

The team evaluated Exact Match (EM), accuracy and F1 scores.

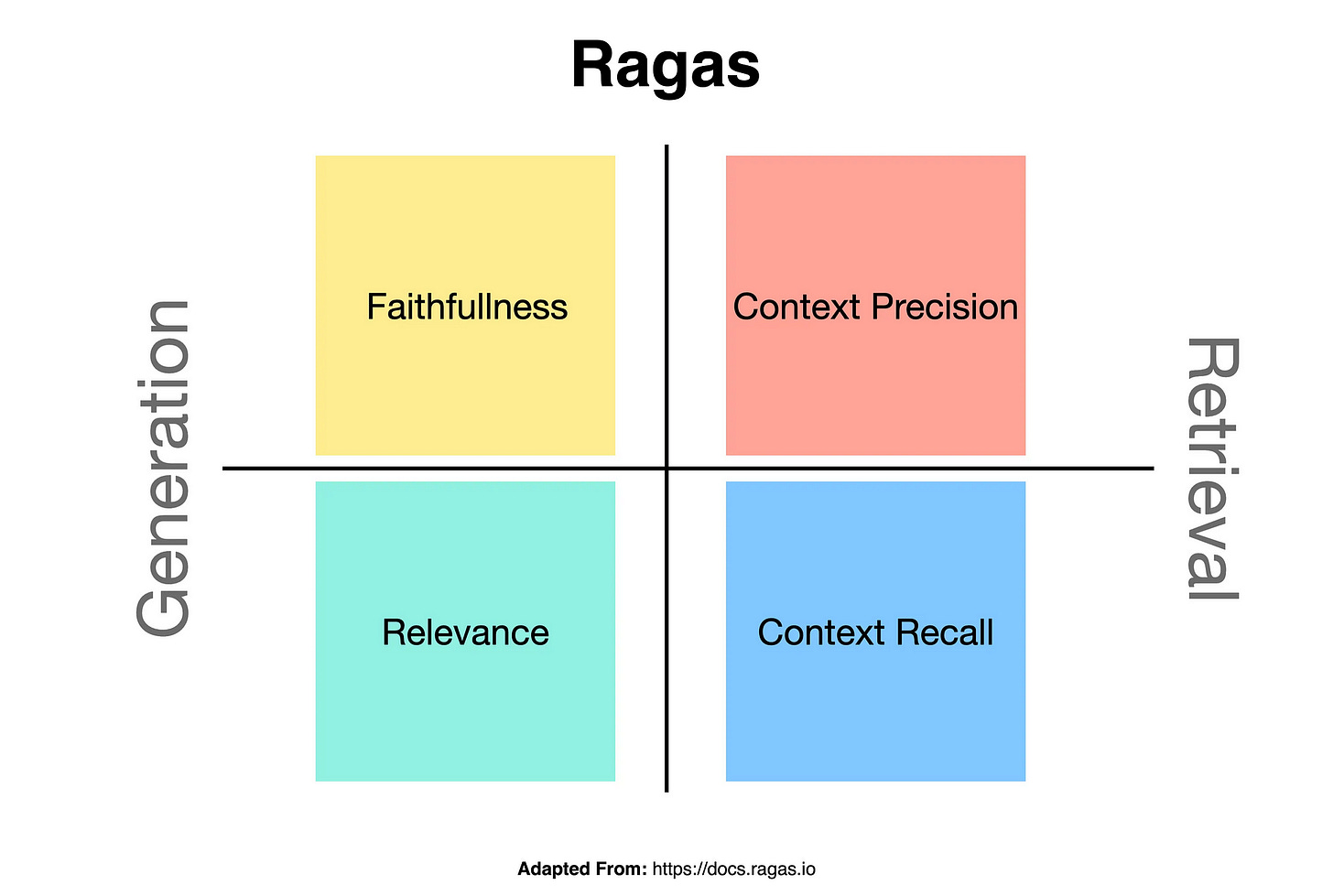

Additionally, they assessed two RAGAS metrics: Faithfulness, which measures the alignment between the generated text and the context, and Relevancy, which evaluates how well the generated text matches the query.

An embedder is required for the relevancy evaluation.

In Conclusion

As I have mentioned before, it is interesting to see how new technology is unfolding and how builders are converging on the same principles for implementing technology.

This development by Intel of fine-tuning models with diverse RAG configurations forms part of the latest trend of data design. Where data is designed in a granular fashion to closely mimic the task the model is intended for.

This development not only focusses on the improvement of the RAG implementation and fine-tuning the models, but also closing the feedback loop with a comprehensive evaluation process.

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.