RAG, Hallucination & Structure: Research By ServiceNow

Amongst Catastrophic Forgetting & Model Drift, Hallucination still remains a challenge.

Introduction

What makes this study so interesting is the fact that ServiceNow had a practical problem they wanted a solution to, and they share their findings via this paper.

Secondly, the paper considers the challenge of creating structured output from LLMs, which are really geared towards creating unstructured conversational output.

To some degree this approach strongly reminds of OpenAI’s JSON modeoutput, or OpenAI’s function calling.

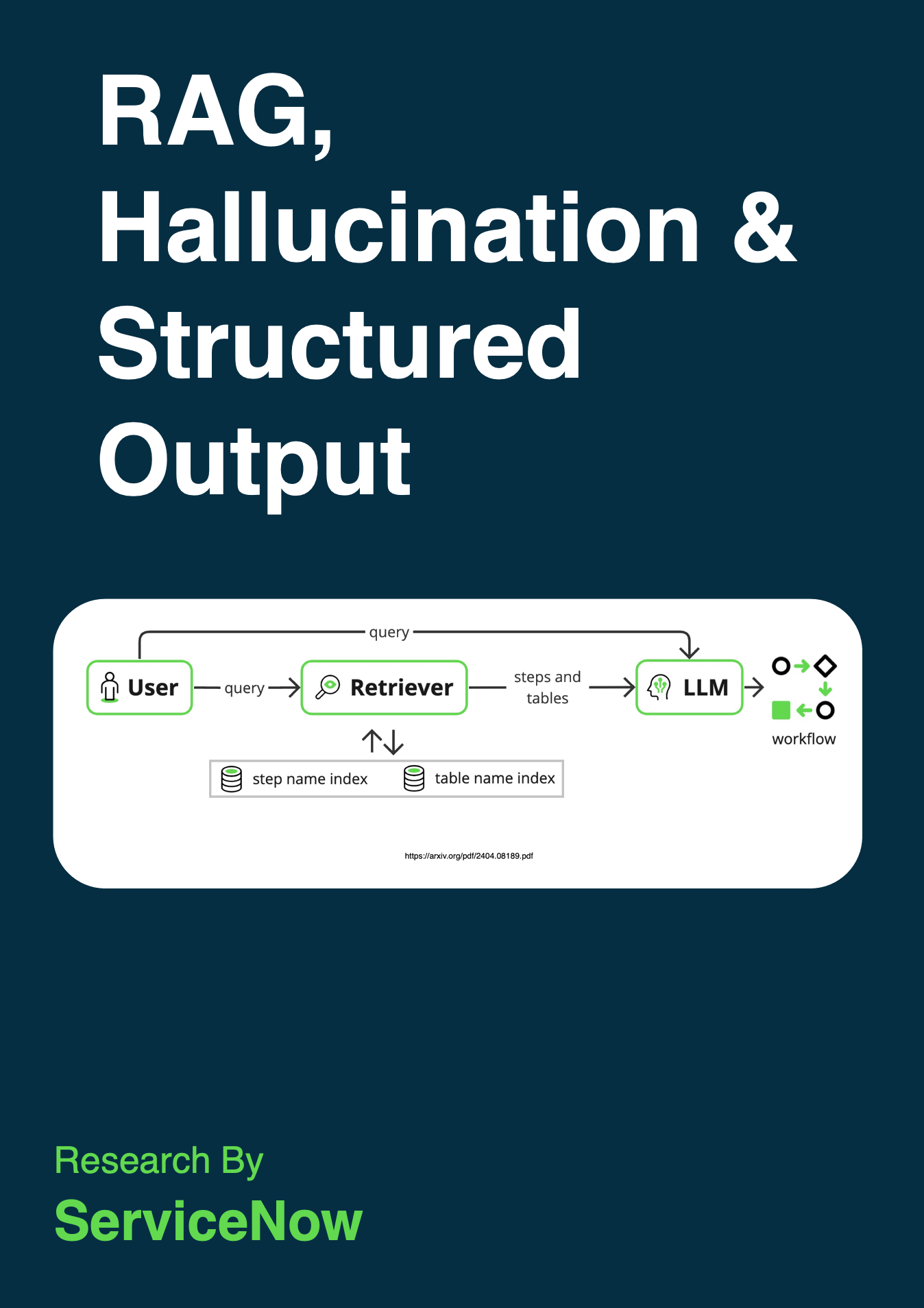

ServiceNow wants to deploy enterprise applications that convert user requirements in natural language into workflows. And they have devised a plan to improve quality of generated structured flows by making use of RAG.

This approach reduces hallucination and allows for generalisation for out-of-domain settings.

ServiceNow wants to create accurate workflows from natural language input in an attempt to simplify the User Interface for creating workflows and enabling novices.

While one could fine-tune the LLM per enterprise, this may be prohibitively expensive due to the high infrastructure costs of fine-tuning LLMs. Another consideration when deploying LLMs is their foot- print, making it preferable to deploy the smallest LLM that can perform the task.

Cambridge Dictionary chose hallucinate as its Word of the Year in 2023.

As seen in the image below, output workflows are represented as JSON documents where each step is a JSON object.

The study demonstrates how RAG allows deploying a smaller LLM while using a very small retriever model, at no loss in performance.

RAG For Structure

What makes this study different, is the fact that RAG is leveraged to create structured output, in the form of JSON. To some degree this approach strongly reminds of OpenAI’s JSON mode output, or OpenAI’s functioncalling.

However, the challenge with this implementation is that even-though the input is open and highly specified via graphic user interface affordances, the output can only form part of a limited and finite pool of steps.

In creating this workflow, ServiceNow first had to train a retriever encoder to align natural language with JSON objects.

Secondly, they train an LLM in a RAG fashion by including the retriever’s output in its prompt.

Hence there is a requirement that the retriever map natural language to existing step and database table names.

The study focusses on fine-tuning a retriever model for two reasons: to improve the mapping between text and JSON objects, and to create a better representation of the domain of the application.

Considerations

A number of considerations where raised for future work…

Changing the structured output format from JSON to YAML to reduce the number of tokens.

Leveraging speculative decoding and

Streaming one step at a time back to the user instead of the entire generated workflow. This is in keeping with recent agentic developments from LlamaIndex where a step-wise approach is taken with agents. Having a human-in-the-loop approach has many advantages and in the agent context the HITL portion, the human can be considered as an agent tool together with other tools.

Finally

The study proposes a strategy for using a Retrieval-Augmented Language Model (RAG) to address two key challenges in AI:

Reducing hallucination (the generation of incorrect or irrelevant information) and

Enabling generalisation (the ability to apply knowledge to new situations) in structured output tasks.

The study emphasises the importance of reducing hallucination for real-world AI systems to gain acceptance among users.

They highlight that the RAG approach allows for deploying AI systems in resource-constrained environments because it can work effectively with even a small retriever and a compact Language Model.

This implies that the system’s hardware and computational requirements can be minimised, which is crucial for practical applications in settings where resources are limited.

Additionally, the study points out areas for future research, suggesting that further improvements can be made by enhancing the collaboration between the Retriever and the Language Model.

This could be achieved through joint training methods, where both components are trained together to improve their interaction, or by designing a model architecture that facilitates better integration and cooperation between the two components.

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.