RAG Implementations Fail Due To Insufficient Focus On Question Intent

The Mindful-RAG approach is a framework tailored for intent-based and contextually aligned knowledge retrieval.

It specifically addresses identified shortcomings, enhancing the accuracy and relevance of LLM responses, marking a notable advancement over current methodologies.

Introduction

Large Language Models (LLMs) are good at generating coherent and contextually relevant text but struggle with knowledge-intensive queries, especially in domain-specific and factual question-answering tasks.

Retrieval-augmented generation (RAG) systems address this challenge by integrating external knowledge sources like structured knowledge graphs (KGs).

Despite access to KG-extracted information, LLMs often fail to deliver accurate answers.

A recent study examines this issue by analysing error patterns in KG-based RAG methods, identifying eight critical failure points.

Intents

Research found that these errors stem largely from inadequate understanding of the question’s intent and hence insufficient context extraction from knowledge graph facts.

Based on this analysis, th study propose Mindful-RAG, a framework focused on intent-based and contextually aligned knowledge retrieval.

This approach aims to enhance the accuracy and relevance of LLM responses, marking a significant advancement over current methods.

Eight Failure Points Leading To Incorrect Responses

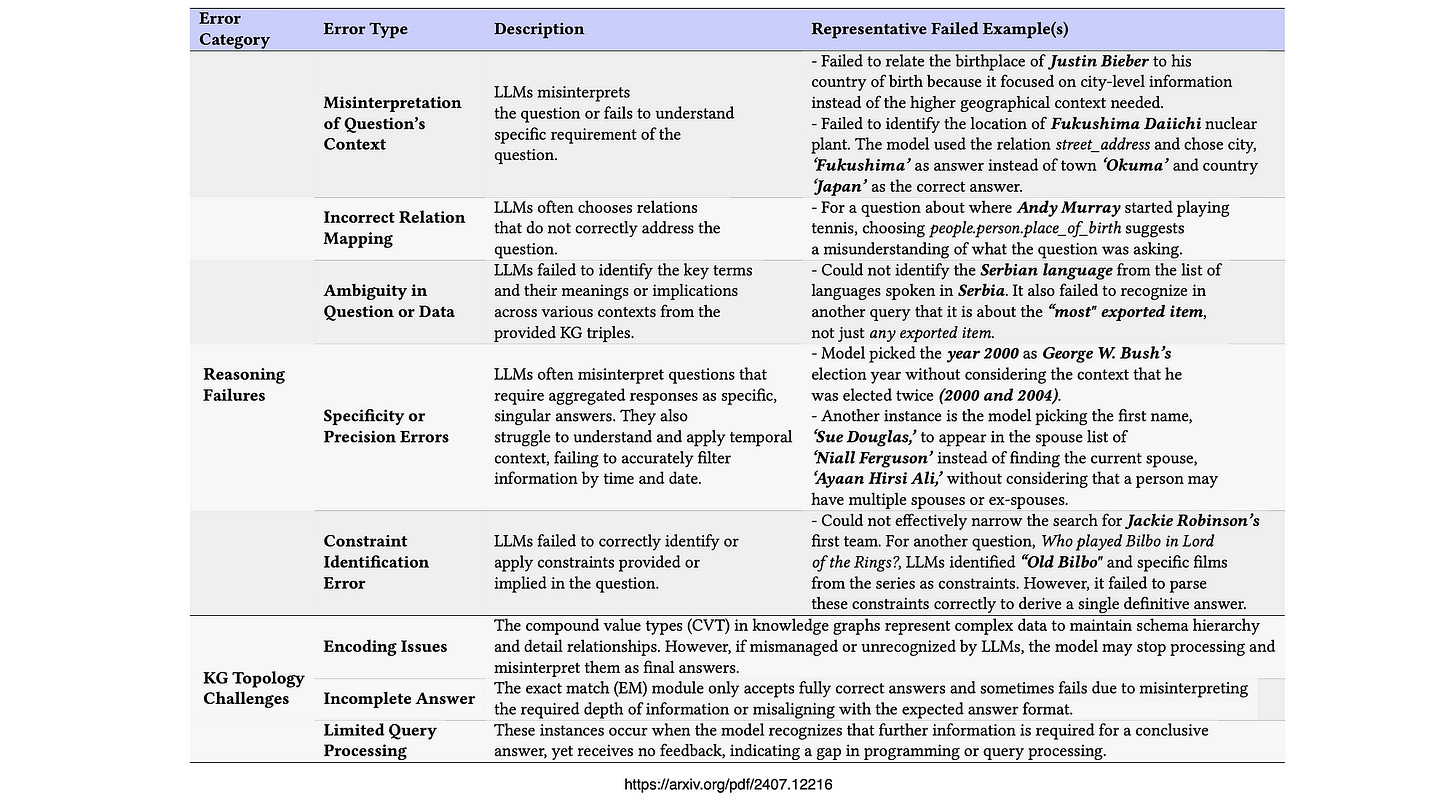

In the diagram below, the two error categories are shown, Reasoning Failures and Knowledge Graph, data topology challenges.

The error types are listed, with a description and failure examples…

Mindful-RAG

Mindful-RAG is aimed at addressing two critical gaps:

The identification of question intent &

The alignment of context with available knowledge.

This method employs a strategic hybrid approach that integrates the model’s intrinsic parametric knowledge with non-parametric external knowledge from a knowledge graph (KG).

The following steps offer a comprehensive overview of the design and methodology, supplemented by an illustrative examples.

Knowledge Graphs & Vectors

The study states that integrating vector-based search techniques with KG-based sub-graph retrieval has the potential to enhance performance significantly.

These advancements in intent identification and context alignment indicate promising research avenues that could greatly enhance LLMs’ performance in knowledge-intensive question answering tasks across various domains.

In Conclusion

The study is more focussed on Knowledge Graph (KG) implementations of RAG as apposed to Vector databases.

I’m not an expert on what’s the best approach in terms of the two approaches, so any feedback will be welcomed.

Mindful-RAG approach does seem more KG orientated with more structured data ontologies and data relationships forming part of the implementation.

With the KG required to take advantage of complex data relationships.

This approach is still informative in terms of how Natural Language Processing (NLP) can be used upstream in the RAG process.

The loopback step, where the final answer is verified by an LLM check, is highly beneficial.

Additionally, the use of knowledge graphs (KGs) significantly aids in complex question answering and tracing interrelationships.

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.