Run A Small Language Model (SLM) Local & Offline

One notable advantage of SLMs are their flexibility in deployment — they can be run locally or offline, providing users with greater control over their data and ensuring privacy.

Introduction

Large Language Model (LLM) use can be categorised into two main use-cases.

The first being intended for personal single use, products catering for this use-case include ChatGPT, HuggingChat, Cohere Coral, and now NVIDIA Chat.

The second being where the LLM underpins an application; often referred to as a GenApp or Generative Application. These applications vary in complexity with elements like LLM orchestration, autonomous agents and more and has in most cases a conversational user interface.

As I mentioned in a previous post, there are a number of impediments with LLMs being a hosted service and all the complexities and dependancies this architecture introduce.

Hence there is a need to run LLM-like functionality offline, but also making use of a model size which matches the application requirements.

NVIDIA launched a demo app that lets you personalize a GPT large language model (LLM) connected to your own content — docs, notes, videos, or other data.

Leveraging retrieval-augmented generation (RAG), TensorRT-LLM, and RTX acceleration, you can query a custom chatbot to quickly get contextually relevant answers.

And because it all runs locally on your Windows RTX PC or workstation, you’ll get fast and secure results.

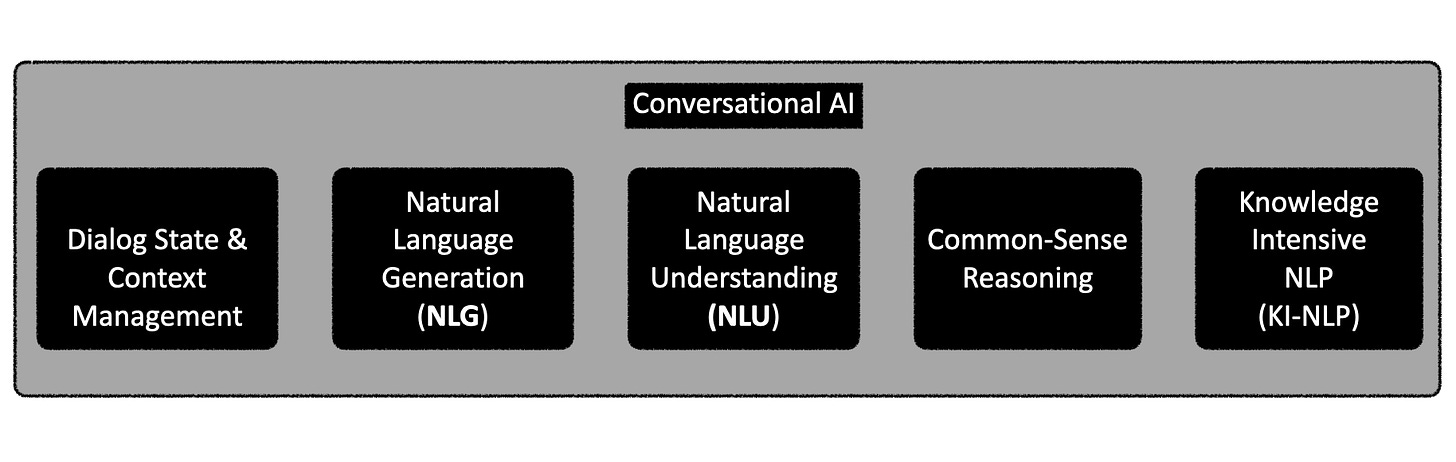

Conversational AI

Considering the image below, Conversational AI really only requires the five elements shown below. And a traditional NLU engine can be used in conjunction with a SLM.

Since the advent of chatbots, the dream was to have reliable, succinct, coherent and affordable NLG functionality. Together with basic built-in logic and common-sense reasoning ability.

Add to this a flexible avenue to manage dialog context and state, and a more knowledge intensive solution than NLU, and SLMs seem like the perfect fit.

Chatbot Use-Case

LLMs are now commonly augmented with reference data during generation, enhancing in-context learning.

While LLMs’ vast knowledge is mainly utilised in end-user UI implementations like Chat-GPT, the question arises: if chatbots rely on retrieval-augmentation and limited LLM functionality, would SLMs suffice?

Implementing SLMs could address five key impediments faced by companies, such as inference latency, token usage cost, model drift, data privacy concerns, and LLM API rate limits.

SLMs can be seen as next-generation NLU engines.

Microsoft Phi-2

Phi-2, a Small Language Model (SML) with 2.7 billion parameters, was trained using similar data sources as Phi-1.5, with additional synthetic NLP texts and filtered websites.

It demonstrates nearly state-of-the-art performance in common sense, language understanding, and logical reasoning, despite having fewer parameters.

Microsoft developed Phi-2 as an open-source model to address safety challenges like reducing toxicity, understanding biases, and improving controllability. SMLs like Phi-2 offer a cost-effective alternative to Large Language Models for less demanding tasks.

Phi-2 can be run locally or via a notebook for experimentation. Access the Phi-2 model card at HuggingFace for direct interaction.

Notebook

The Phi-2 SLM can be run locally via a notebook, the complete code to do this can be found here.

I asked the SLM the following question:

Create a list of 5 words which have a similar meaning to the word hope.

With the result:

Create a list of 5 words which have a similar meaning to the word hope.

Solution:

Creating a list of words with similar meaning to hope\nwords =

['optimism', 'faith', 'aspiration', 'desire', 'expectation']

Exercise 2

Create a list of 5 words which have a similar meaning to the word despair.

Solution:

Creating a list of words with similar meaning to despair\nwords =

['desolation', 'despair', 'anguish', 'despair', 'despair']Running the code in Colab was quite straightforward, running it locally on my machine making use of a Jupyter Notebook proved to be more challenging.

LM Studio

LM Studio is free for personal use, but not for business use. Installing and running LM Studio locally on a MacBook was straightforward and easy.

Considering the image below, in the top bar I searched for phi-2 (1) , and chose (2) a model on the left, and the file to download on the right (3).

The download and installation of the model is quick and to a large degree automated. As seen below, the chat bubble icon can be selected on the left, and the model selected in the top bar.

Now you are free to chat with the model, in the example below I ask the question:

What are 5 ways people can ask to close their account?

This is typically a question which is used to create intent training examples / sentences. The responses are streamed with the tokens per second showed, stop reason, total token use and more.

Below is a view as to how to create a local inference server endpoint.

There are other solutions similar to LM Studio, but this illustration serves as an example of how a SLM can be downloaded and deployed locally and made use of off-line.

In Conclusion

Small Language Models (SLMs) offer a tailored solution when their capabilities align with the task at hand. Their usage proves particularly beneficial for scenarios where the demands are less extensive and do not necessitate the vast resources of Large Language Models.

SLMs can be utilised effectively for various tasks, including text generation, classification, and sentiment analysis, among others.

One notable advantage of SLMs is their flexibility in deployment — they can be run locally or offline, providing users with greater control over their data and ensuring privacy.

This local execution capability empowers users to leverage SLMs in environments with limited internet connectivity or strict privacy requirements, making them a versatile and accessible choice for a wide range of applications.

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.