Small Language Models Supporting Large Language Models

Balancing Latency, Interpretability, and Consistency in Hallucination Detection for Conversational AI

Introduction

As I have mentioned multiple times before, Large language models (LLMs) struggle with latency in real-time tasks like conversational UIs which are synchronous.

When additional overhead is added like checking for hallucination, then this problem is just exacerbated.

Hence Microsoft Research came up with a framework which utilises a Small Language Model (SLM) as an initial detector, with an LLM serving as a constrained reasoner to generate detailed explanations for any detected hallucinations.

The aim was to have optimised real-time, interpretable hallucinationdetection by introducing prompting techniques that align LLM-generated explanations with SLM decisions.

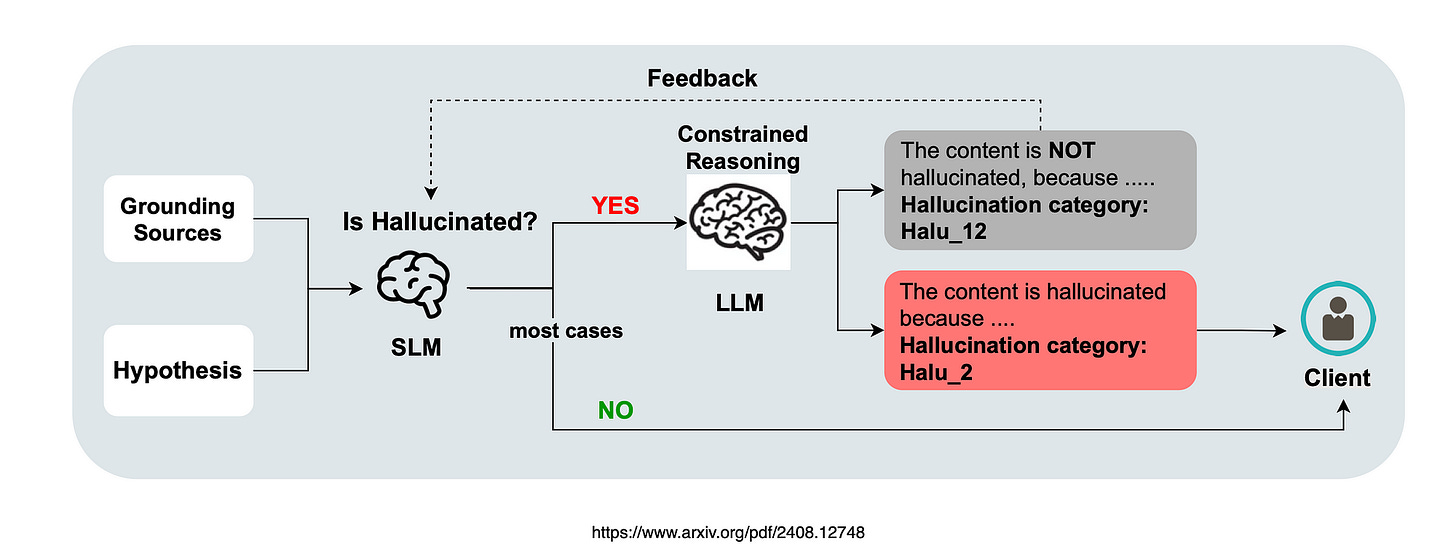

Considering the image above which demonstrates Hallucination Detection with an LLM as a Constrained Reasoner…

Initial Detection: Grounding sources and hypothesis pairs are input into a small language model (SLM) classifier.

No Hallucination: If no hallucination is detected, the “no hallucination” result is sent directly to the client.

Hallucination Detected: If the SLM detects a hallucination, an LLM-based constrained reasoner steps in to interpret the SLM’s decision.

Alignment Check: If the reasoner agrees with the SLM’s hallucination detection, this information, along with the original hypothesis, is sent to the client.

Discrepancy: If there’s a disagreement, the potentially problematic hypothesis is either filtered out or used as feedback to improve the SLM.

More On Microsoft’s Approach

Given the infrequent occurrence of hallucinations in practical use, the average time and cost of using LLMs for reasoning on hallucinated texts is manageable.

This approach leverages the existing reasoning and explanation capabilities of LLMs, eliminating the need for substantial domain-specific data and costly fine-tuning.

While LLMs have traditionally been used as end-to-end solutions, recent approaches have explored their ability to explain small classifiers through latent features.

We propose a novel workflow to address this challenge by balancing latency and interpretability. ~ Source

SLM & LLM Agreement

One challenge of this implementation is the possible delta between the SLM’s decisions and the LLM’s explanations…

This work introduces a constrained reasoner for hallucination detection, balancing latency and interpretability.

Provides a comprehensive analysis of upstream-downstreamconsistency.

Offers practical solutions to improve alignment between detection and explanation.

Demonstrates effectiveness on multiple open-source datasets.

In Conclusion

If you find any of my observations to be inaccurate, please feel free to let me know…🙂

I appreciate that this study focuses on introducing guardrails & checks for conversational UIs.

When interacting with real users, incorporating a human-in-the-loop approach helps with data annotation and continuous improvement by reviewing conversations.

It also adds an element of discovery, observation and interpretation, providing insights into the effectiveness of hallucination detection.

The architecture presented in this study offers a glimpse into the future, showcasing a more orchestrated approach where multiple models work together.

The study also addresses current challenges like cost, latency, and the need to critically evaluate any additional overhead.

Using small language models is advantageous as it allows for the use of open-source models, which reduces costs, offers hosting flexibility, and provides other benefits.

Additionally, this architecture can be applied asynchronously, where the framework reviews conversations after they occur. These human-supervised reviews can then be used to fine-tune the SLM or perform system updates.

I’m currently the Chief Evangelist @ Kore.ai. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.