SmolAgents

SmolAgents from HuggingFace

Hugging Face launched SmolAgents with a clear mission: to democratise the creation of sophisticated agentic systems by prioritising simplicity and reducing technical overhead.

Unlike more complex frameworks, which often involve intricate architectures, SmolAgents intentionally strips away unnecessary layers of abstraction.

The library emphasises lightweight efficiency without sacrificing capability.

HuggingFace’s vision with SmolAgents is to lower the barrier to entry for AI-driven automation, empowering users to focus on functionality rather than wrestling with bloated frameworks.

I don’t consider myself as a particularly technical person, but in order for me to get my head around SmolAgents from HuggingFace, I knew I had to build a few prototypes or at least get a SmolAgent running.

HuggingFace has a number of exceptional resources, which I list in the footer of this post. However, in this article I want to give a simple step-by-step process of setting up a SmolAgent.

Use AI Agents when tasks are complex, unpredictable, or require dynamic decision-making.

ReAct (Reason & Act)

You might have seen that AI Agents follow a routine of reasoning and acting; this routine is followed until a final conclusion is reached.

This is based on a framework called ReAct which was initially published 6 Oct 2022.

ReAct is a framework that combines reasoning and acting to enable AI models to solve complex multi-step tasks more effectively.

ReAct allows a language model to generate both internal reasoning traces (thoughts) and external actions (like querying a database or API) in an interleaved manner.

By alternating between reasoning and acting, the model can dynamically adapt its behaviour based on new information gathered from the environment.

This approach improves the model’s ability to handle complex, multi-step tasks that require both logical thinking and real-world interaction.

ReAct demonstrates strong performance in tasks like question-answering, decision-making, and problem-solving by leveraging this reasoning-action loop.

Below is as simple breakdown of how a ReAct AI Agent works…

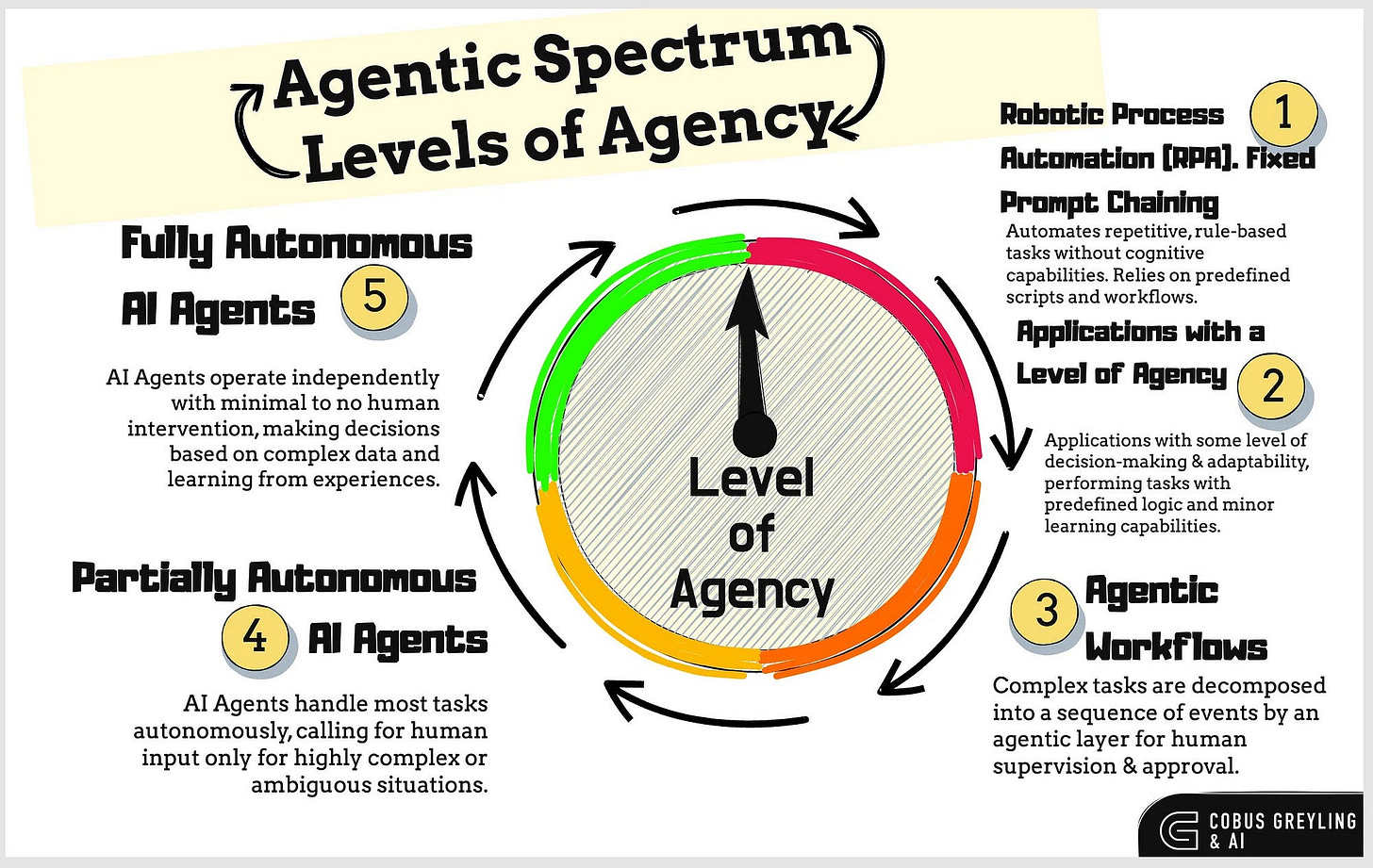

More About Agents & Agentic Spectrum

AI Agents are programs where LLM outputs control the workflow. ~ HuggingFace

Under this definition, the term AI Agent is not a binary concept — it doesn’t simply exist as a yes or no.

Instead, agency exists on a continuous spectrum, depending on how much control or influence you grant the large language model (LLM) over your workflow.

Avoid AI Agents when tasks are simple, deterministic & can be solved with predefined workflows.

Any effective AI-powered system will inevitably need to provide LLMs with some level of access to the real world. (This is where tools come into play.)

For example, this could include the ability to call a search tool for external information or interact with specific programs to complete a task.

In essence, LLMs require a degree of agency to function effectively. Agentic programs serve as the bridge that connects LLMs to the outside world, enabling them to act and adapt dynamically.

Back to SmolAgents

A multi-step SmolAgent has this code structure:

memory = [user_defined_task]

while llm_should_continue(memory): # this loop is the multi-step part

action = llm_get_next_action(memory) # this is the tool-calling part

observations = execute_action(action)

memory += [action, observations]From this snipped of code, note the key elements of memory, a loop, actions where the tools are called and observation.

Below are the main seven elements of a SmolAgent; The LLM forms the backbone of the AI Agent, the list of tools give agent contact with the outside world, via API calls, web browsing etc.

Error Logging helps with giving inspectability, observability and discoverability into the workings of the agent.

Considering the image below, this system operates in a loop, performing a new action at each step.

These actions may involve calling pre-defined tools (essentially functions) to interact with the environment.

The loop continues until the system’s observations indicate that a satisfactory state has been achieved to complete the task.

Run your first SmolAgent In A Colab NoteBook

The quickest way to have a SmolAgent up and running is via a Colab Notebook.

You will need a HuggingFace login from which you can create a token ID which allows you to call the HuggingFace inference API.

This is all free to do…

Begin by runningg these installs…

!pip install -q smolagents

!pip install huggingface_hubThen log into HuggingFace via the notebook

from huggingface_hub import login

login()Setup your agent:

from smolagents import CodeAgent, DuckDuckGoSearchTool, HfApiModel

model_id = "microsoft/phi-4"

agent = CodeAgent(tools=[DuckDuckGoSearchTool()], model=HfApiModel())#(model_id=model_id))

agent

# The output: <smolagents.agents.CodeAgent at 0x781b4bd8a3d0>Next we run the SmolAgent:

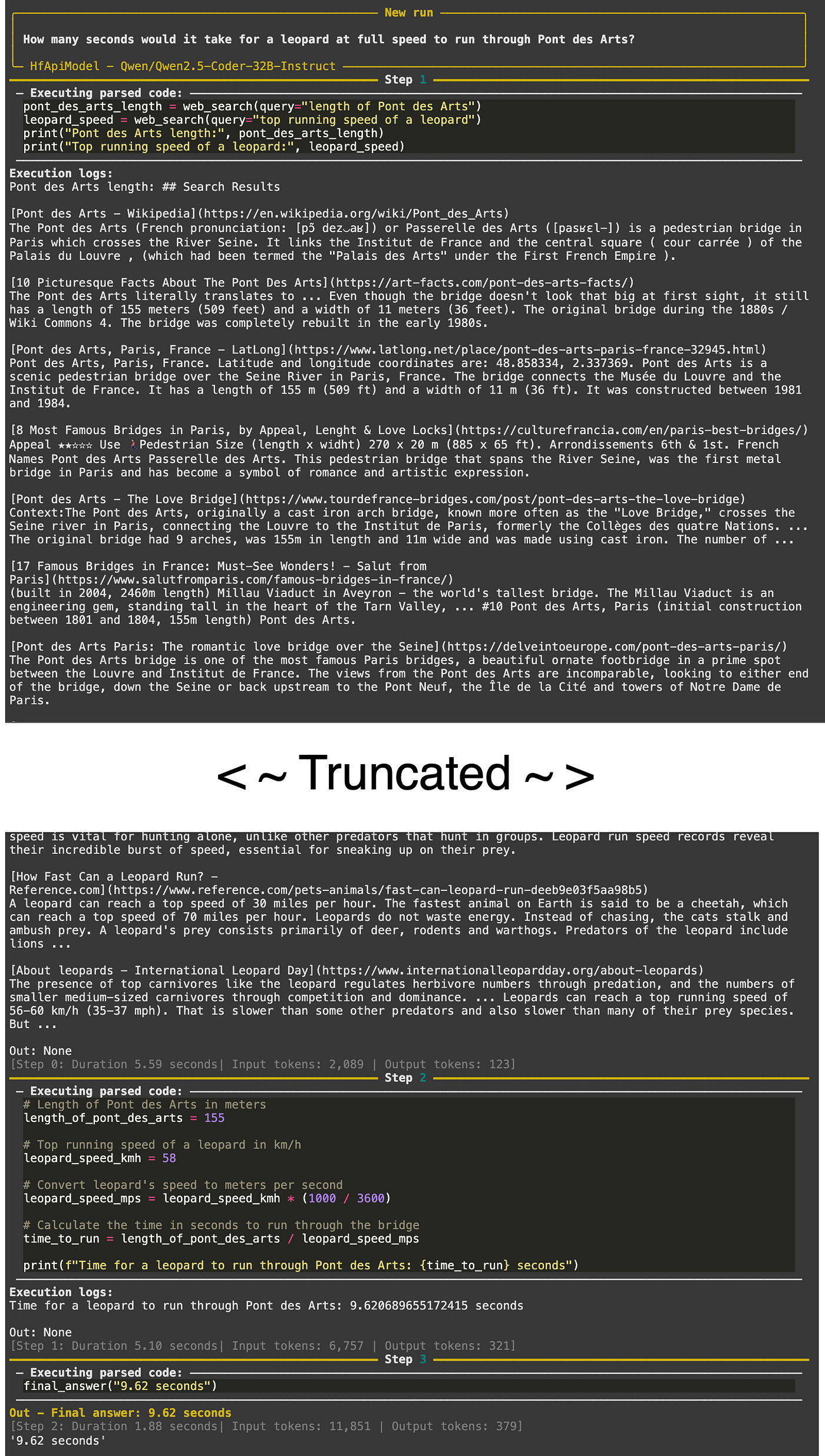

agent.run("How many seconds would it take for a leopard at full speed to run through Pont des Arts?")And the output:

If you read through the text in the image above, you will notice how logical it is, and how the agent follows a well thought out routine.

Running A SmolAgent Locally On A MacBook

To run a SmolAgent locally al you will need is the Terminal applications under Other application grouping… Everything we’ll need for this demo can run in Terminal.

To run a SmolAgent locally on a MacBook, if you are running MacOs, the easiest is to create a virtual environment to run you agent in from the terminal application:

smol/bin/activeAs seen above, I called the virtual environment smol.

Then activate the virtual environment:

source smol/bin/activateOn the command of terminal, the text (smoll) shows that you are now in your virtual environment.

Create a file called smolAgent.py for the Python code, with the command:

vim smolAgent.py

And paste the following code for the commplete agent into the file:

from typing import Optional

from smolagents import HfApiModel, LiteLLMModel, TransformersModel, tool

from smolagents.agents import CodeAgent, ToolCallingAgent

# Choose which inference type to use!

available_inferences = ["hf_api", "transformers", "ollama", "litellm"]

chosen_inference = "transformers"

print(f"Chose model: '{chosen_inference}'")

if chosen_inference == "hf_api":

model = HfApiModel(model_id="meta-llama/Llama-3.3-70B-Instruct")

elif chosen_inference == "transformers":

model = TransformersModel(model_id="HuggingFaceTB/SmolLM2-1.7B-Instruct", device_map="auto", max_new_tokens=1000)

elif chosen_inference == "ollama":

model = LiteLLMModel(

model_id="ollama_chat/llama3.2",

api_base="http://localhost:11434", # replace with remote open-ai compatible server if necessary

api_key="your-api-key", # replace with API key if necessary

num_ctx=8192, # ollama default is 2048 which will often fail horribly. 8192 works for easy tasks, more is better. Check https://huggingface.co/spaces/NyxKrage/LLM-Model-VRAM-Calculator to calculate how much VRAM this will need for the selected model.

)

elif chosen_inference == "litellm":

# For anthropic: change model_id below to 'anthropic/claude-3-5-sonnet-latest'

model = LiteLLMModel(model_id="gpt-4o")

@tool

def get_weather(location: str, celsius: Optional[bool] = False) -> str:

"""

Get weather in the next days at given location.

Secretly this tool does not care about the location, it hates the weather everywhere.

Args:

location: the location

celsius: the temperature

"""

return "The weather is really with torrential rains and temperatures below -10°C"

agent = ToolCallingAgent(tools=[get_weather], model=model)

print("ToolCallingAgent:", agent.run("What's the weather like in Paris?"))

agent = CodeAgent(tools=[get_weather], model=model)

print("CodeAgent:", agent.run("What's the weather like in Paris?"))The chmod 777 command gives the file full permissions, this includes read, write and execute permissions.

In general, take caution when changing permissions for users and files.

chmod 777 smolAgent.pyAnd run your Agent:

python smolAgent.pyThe output from the SmolAgent:

You can experiment by changing the input or question you pose to the agent. Also, a great next step would be to change the SmolAgent the prompt the user for another input after completing the chain.

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. From Language Models, AI Agents to Agentic Applications, Development Frameworks & Data-Centric Productivity Tools, I share insights and ideas on how these technologies are shaping the future.