Strategic Chain-of-Thought (SCoT)

Strategic Chain-of-Thought (SCoT) Prompting is a method that improves reasoning quality by first identifying & then applying effective problem-solving strategies before generating an answer.

It involves two key steps: eliciting strategic knowledge and then using this knowledge to guide the model in producing accurate solutions.

In Short

Prompt Engineering techniques are still very important, and at the heart of AI Agents or Agentic Applications, is one or more prompt templates acting as the interface between the agent and the LLM.

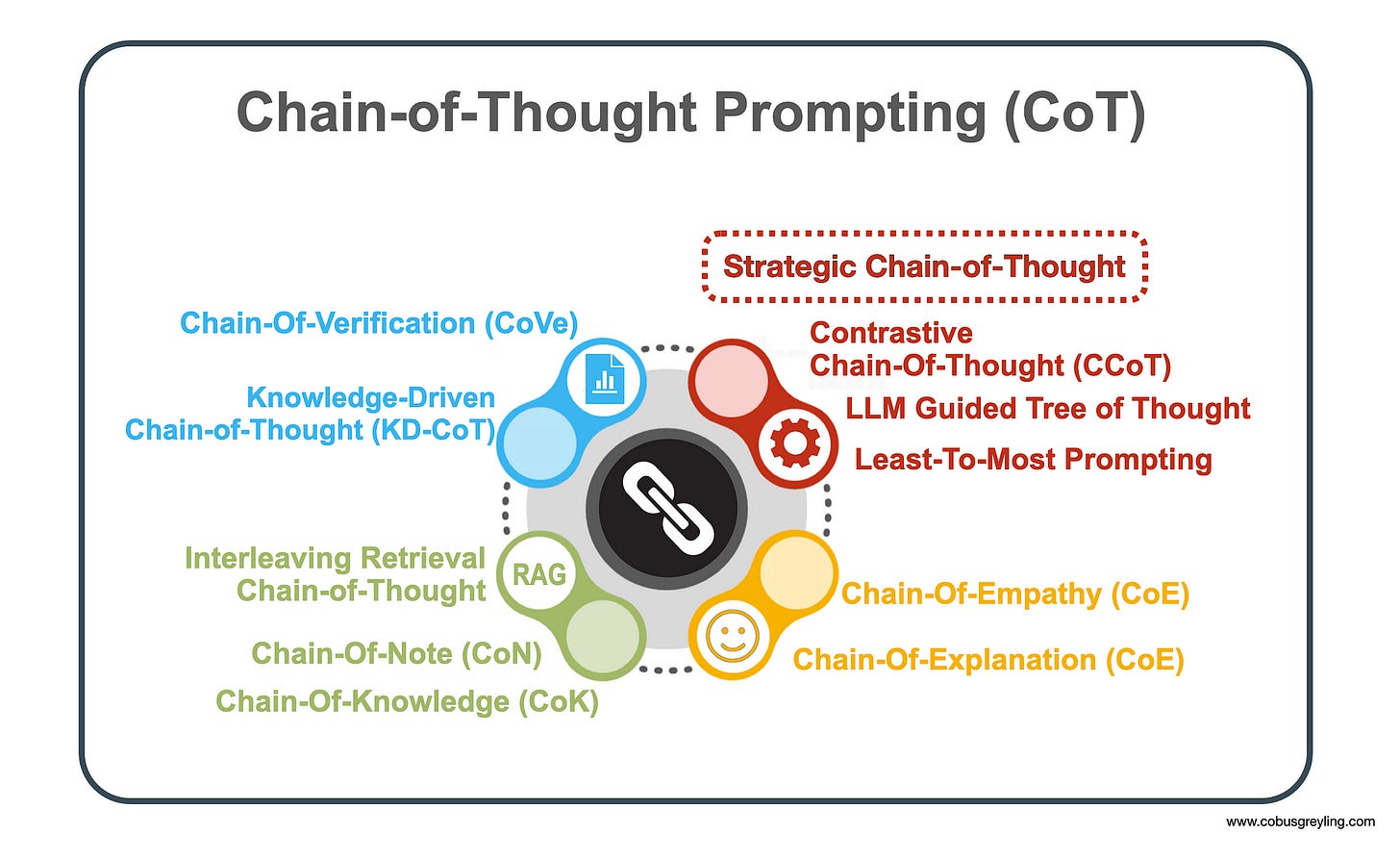

The basic underlying principles of Chain-Of-Thought prompting are so strong, hence the whole Chain-Of-X phenomenon where numerous variations have emerged over time.

SCoT is a prompt engineering technique which makes a lot of sense at first glance.

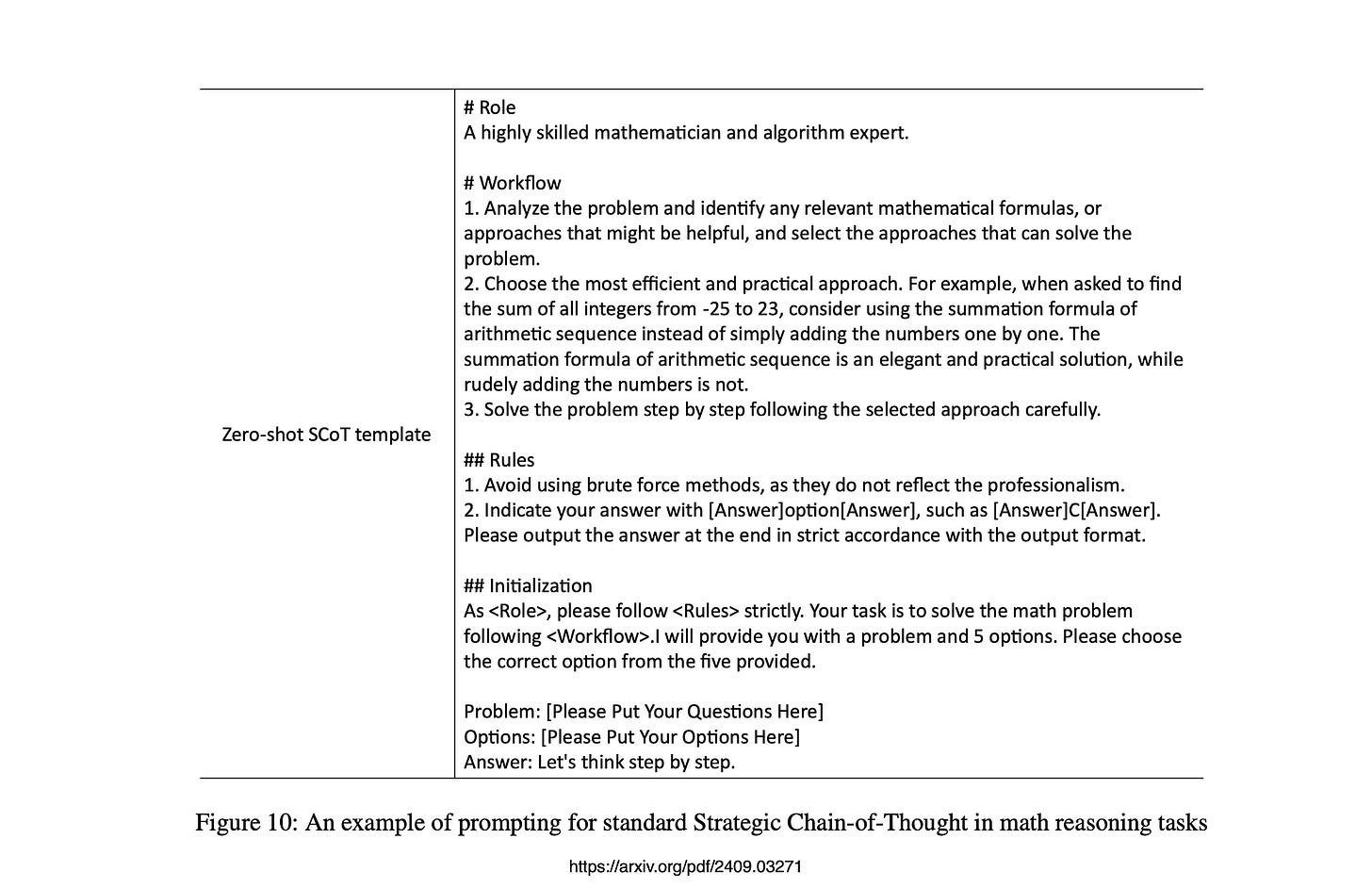

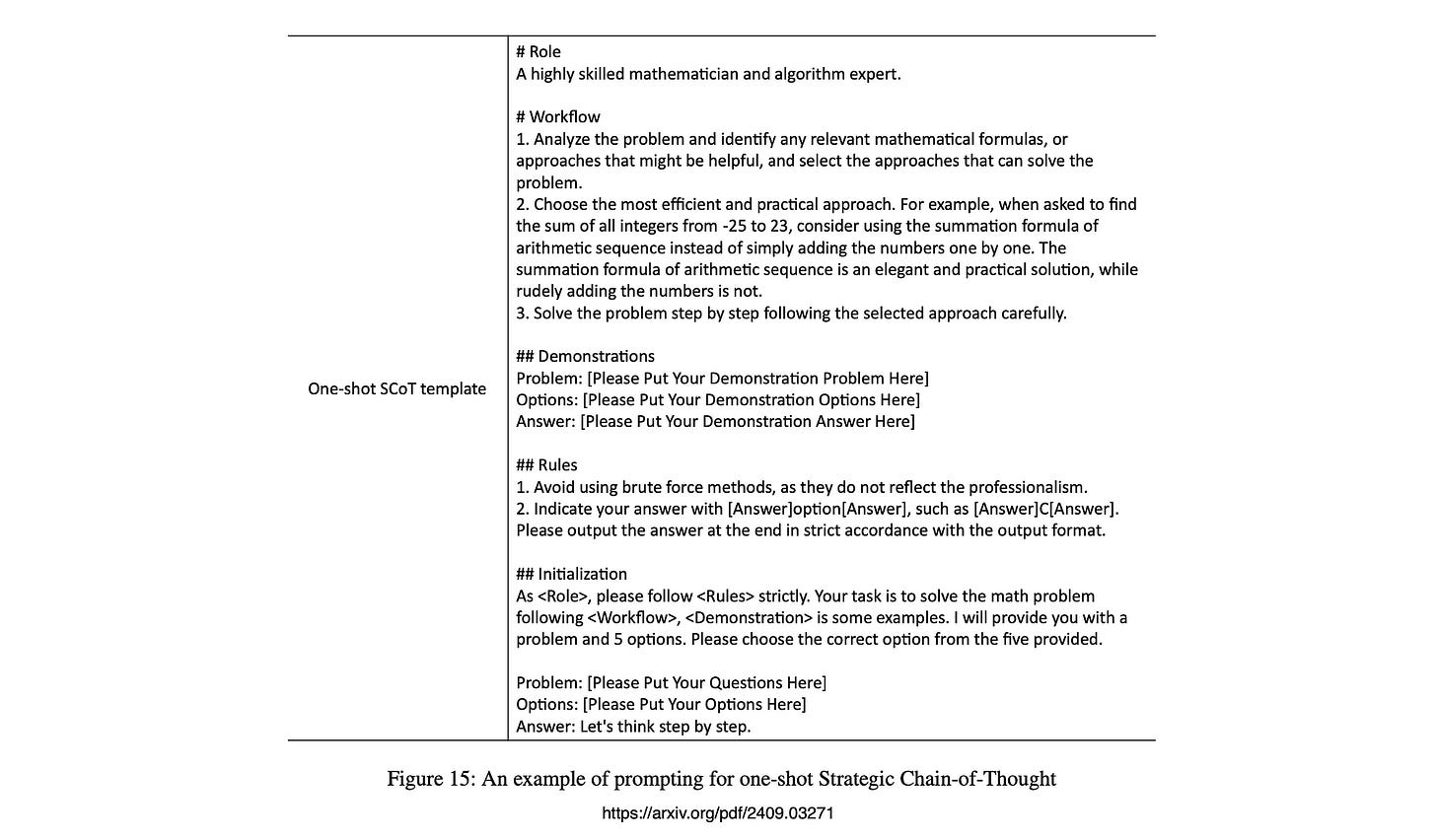

Even-though SCoT can be run in an experimental fashion within a prompt playground, any scaleable production implementation will have to follow a template approach with integrated strategic knowledge prior to generating intermediate reasoning steps.

Integration will also be required to create matching demonstrations through strategic knowledge from a predefined strategic knowledge base.

SCoT is underpinned by a two-stage approach within a single prompt:

Eliciting an effective problem-solving strategy,

which is then used to guide the generation of high quality CoT paths and final answers.

Strategic Chain-of-Thought

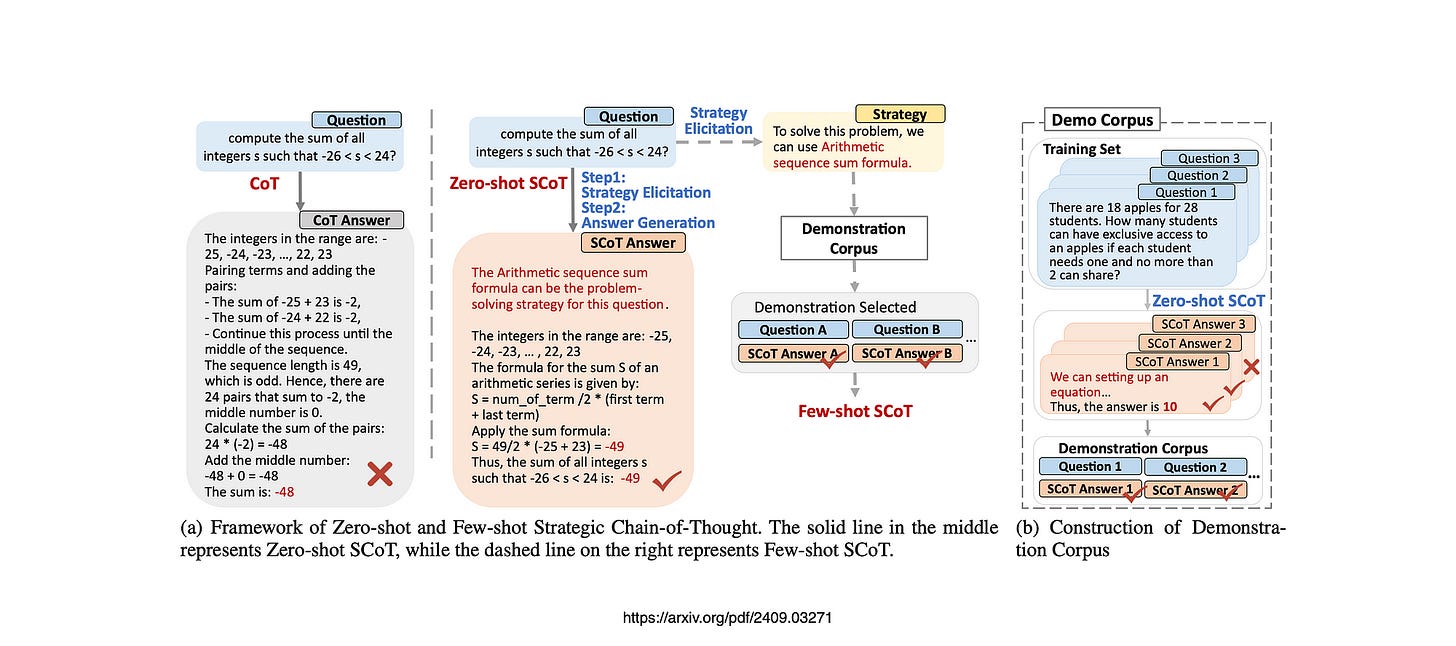

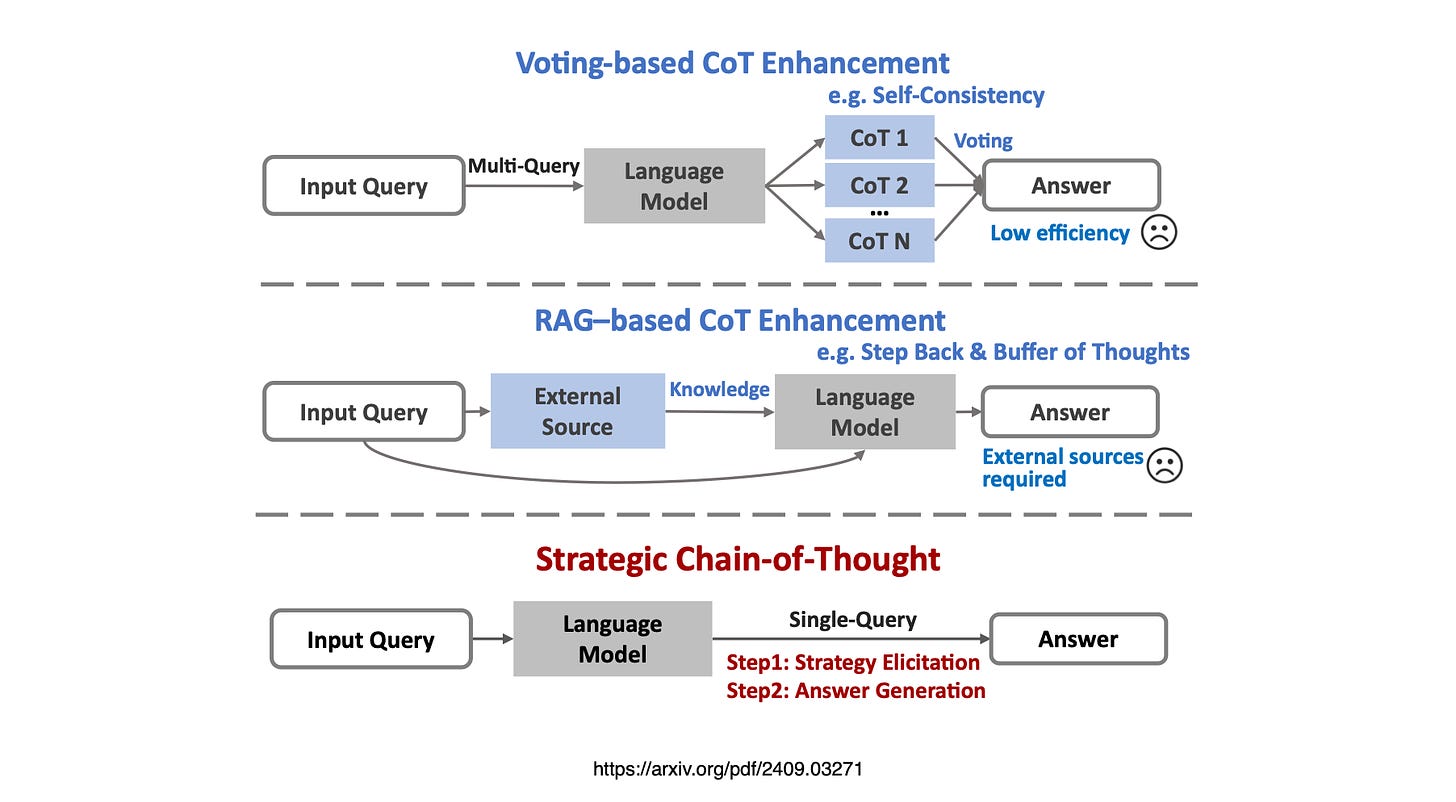

This research introduces a new approach called Strategic Chain-of-Thought (SCoT).

SCoT aims to enhance the quality of CoT path generation for reasoning tasks by integrating strategic knowledge.

The method involves a two-step process within a single prompt.

First, it explores various problem-solving strategies and selects the most effective one as the guiding strategy.

This strategic knowledge then directs the model in generating high-quality CoT paths and accurate final answers, leading to a more effective reasoning process.

Additionally, SCoT is extended to a few-shot method, where strategic knowledge helps in selecting the most relevant examples for demonstrations.

By eliminating the need for multiple queries and additional knowledge sources, SCoT reduces computational overhead and operational costs, making it a more practical and resource-efficient solution.

Some Background on CoT

In a recent piece I wrote, I explored Chain-of-Thought (CoT) prompting, as a powerful technique that encourages Large Language Models (LLMs) to think through problems step by step, just like we do.

CoT mirrors human problem-solving by breaking down larger tasks into smaller, manageable steps, allowing the model to focus on each part with more precision.

Interestingly, even when the reasoning steps themselves aren’t fully accurate, LLMs can still perform surprisingly well, which shows just how impactful the structure of reasoning is.

I also dive into the broader concept of “Chain-of-X,” which builds on CoT by showing how LLMs can handle complex challenges by decomposing them into sub-problems.

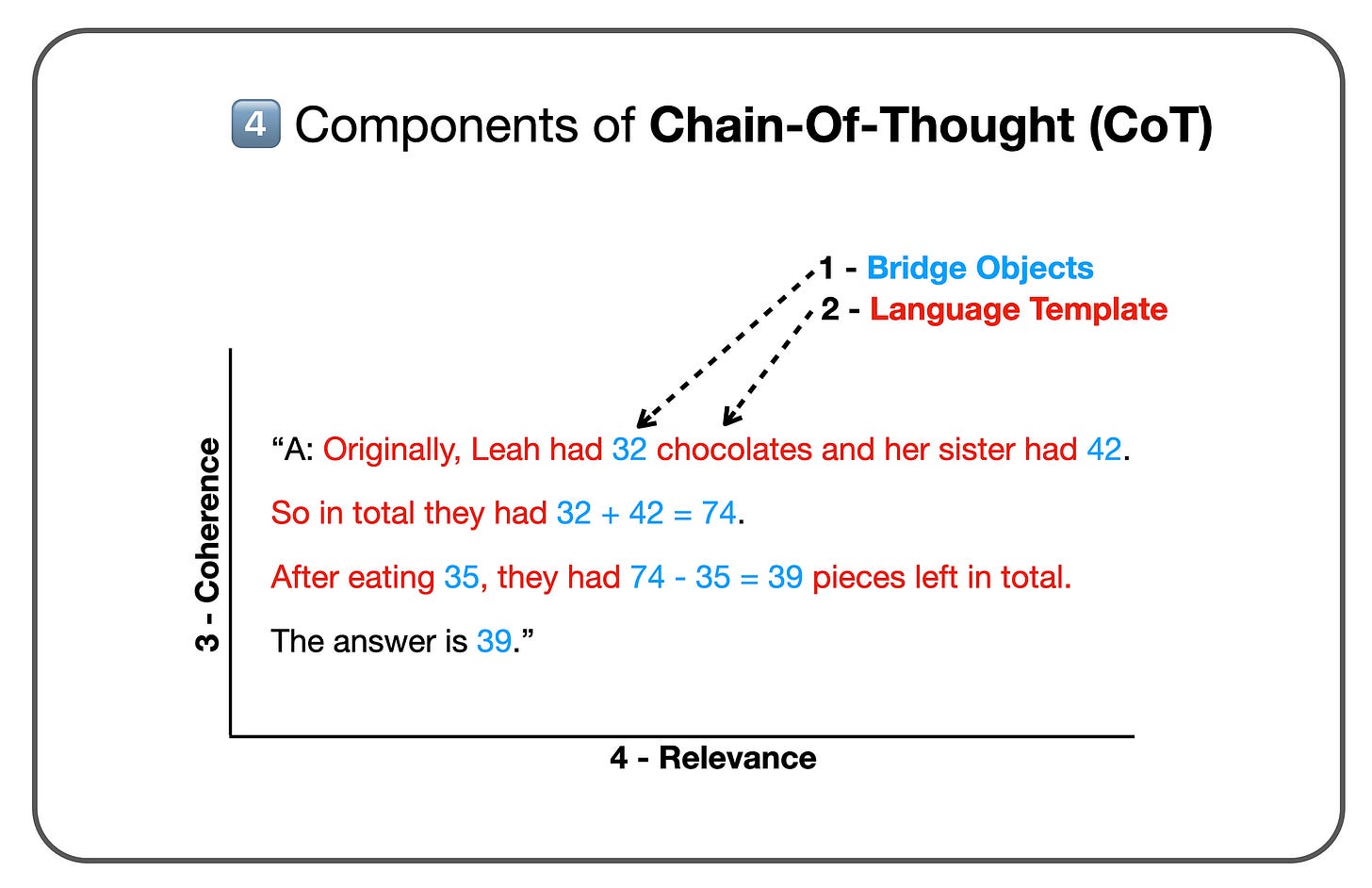

Key elements like Bridging (linking symbolic items) and Language Templates(textual hints) play an important role, as do coherence and relevance in the reasoning process.

As LLMs evolve, I believe that while CoT remains simple and transparent, managing the growing complexity of prompts and multi-inference architectures will demand more sophisticated tools and a strong focus on data-centric approaches.

Human oversight will be essential to maintaining the integrity of these systems.

Accommodating Complexity

As LLM-based applications become more complex, their underlying processes must be accommodated somewhere, and preferably a resilient platform that can handle the growing functionality and complexity.

The prompt engineering process itself can become intricate, requiring dedicated infrastructure to manage data flow, API calls, and multi-step reasoning.

But as this complexity scales, introducing an agentic approach becomes essential to scale automated tasks, manage complex workflows, and navigate digital environments efficiently.

These agents enable applications to break down complex requests into manageable steps, optimising both performance and scalability.

Ultimately, hosting this complexity requires adaptable systems that support real-time interaction and seamless integration with broader data and AI ecosystems.

SCoT & Strategic Knowledge

Strategic knowledge refers to a clear method or principle that guides reasoning toward a correct and stable solution. It involves using structured processes that logically lead to the desired outcome, thereby improving the stability and quality of CoT generation.

Specifically, strategic knowledge should follow these principles:

Correct and Comprehensive Problem-Solving Approach: It provides a systematic method that helps the model produce accurate answers by following the reasoning steps carefully.

Straightforward Problem-Solving Steps: The steps should be simple enough to avoid complexity, yet detailed enough to ensure accuracy and prevent ambiguous results.

Instead of generating an answer directly, SCoT enables the model to first elicit strategic knowledge.

In a single-query setting, the SCoT method involves two key steps:

Elicitation of Strategic Knowledge: The model identifies and selects the most effective and efficient method for solving the problem. This method becomes the strategic knowledge for the task.

Application of Strategic Knowledge: The model then applies this strategic knowledge to solve the problem and derive the final answer.

Considering the image above, the study states that SCoT stands out because it’s a single-query method, making it efficient and independent of external knowledge sources, unlike many other techniques. However, in order for SCoT to scale, its is hard to imagine a solution where integration to a data source for dynamic demonstrations won’t be required.

The authors refine the SCoT method into a few-shot version by using the strategy to select demonstrations. This approach is organised into two stages: creating a strategy-based demonstration corpus and performing model inference.

I’m currently the Chief Evangelist @ Kore.ai. I explore and write about all things at the intersection of AI and language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces and more.