Super Memory MCP — Universal Memory across LLMs

Centralising Your AI Agent’s Memory with the Model Context Protocol (MCP)

Introduction to MCP and Its Growing Role

Recently I integrated an AI Agent to my Strava account via MCP in a no-code fashion. So the thought came to me, if my workouts are stored in Strava and there are MCP integration to email, spreadsheets and more…

So will we reach a point where MCP integration becomes common place and users want to store their “memory” or history in a central repository?

The Model Context Protocol (MCP) is transforming how AI Agents interact with external resources and once you start experimenting with MCP servers, the potential becomes clear…

Plugging MCP servers into AI Agents is remarkably straightforward, opening up a world of possibilities for developers.

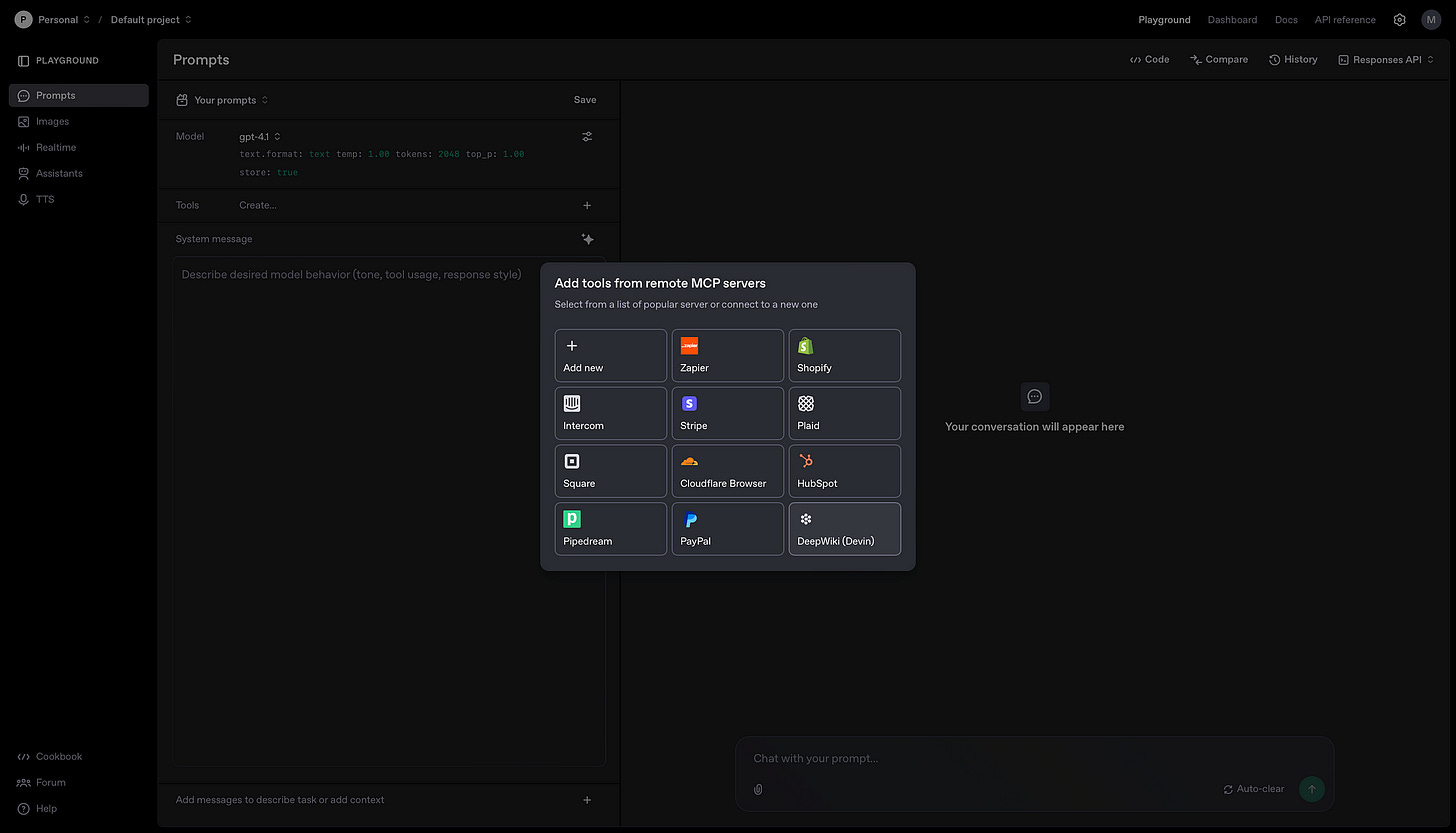

Platforms like the OpenAI Console have simplified adding MCP resources, and the list of compatible servers is expanding rapidly.

MCP, The Bluetooth of AI Agents

The Model Context Protocol (MCP) is the Bluetooth of AI Agents, acting as a universal connector that links services like Strava, email, document management systems and more to enable seamless interaction and data flow.

Much like Bluetooth effortlessly syncs devices, MCP provides a standardised framework for AI Agents to access and process contextual data across diverse platforms, streamlining workflows and boosting efficiency.

By facilitating real-time integration, MCP allows AI Agents to automate tasks, deliver personalised insights and operate cohesively within a multi-service environment.

The Orchestration Layer

It’s not hard to imagine a future where, much like OpenAI’s ecosystem, a standardised catalog of MCP servers becomes a go-to for developers.

However, one aspect of MCP still sparks my curiosity: the orchestration layer.

How does an AI Agent decide which MCP server to tap into?

Should it rely on a single server, multiple servers, or fall back on the model’s trained data?

This decision-making process remains a bit of a puzzle.

For context, NVIDIA has made strides in fine-tuning language models for tool selection using training data from Hugging Face.

Could a similar approach be applied to optimise MCP server selection? It’s a question worth exploring as the technology evolves.

The Role of Memory in AI Agents

At the heart of any AI Agent are three key components:

one or more language models,

tools (often integrated via MCP), and

memory.

Memory, in particular, is critical for delivering personalised and context-aware interactions.

Yet, the fragmented landscape of AI platforms creates a challenge.

Each provider — whether it’s Grok, ChatGPT or others — wants to keep users within their ecosystem, often leading to siloed user memory and context.

This fragmentation can dilute the user experience, as no single platform has a complete picture of the user’s needs or history.

Contrast this with mobile AI assistants like Siri, which thrive because they have access to the full context of your phone — contacts, messages, apps, and more.

This unified context enables seamless, intuitive interactions.

The question is: how can we replicate this level of cohesion in the broader AI agent ecosystem?

SuperMemory MCP: A Novel Approach to Centralised Memory

Enter SuperMemory MCP, the core idea is simple yet powerful: create a centralised memory store that belongs to you, accessible by the various AI Agent interfaces you use.

Think of it as a personal memory hub that unifies your context across platforms.

With SuperMemory MCP, your memory isn’t locked into a single provider’s ecosystem.

Instead, it’s stored in a way that allows multiple AI Agents — whether you’re interacting via Grok, ChatGPT, or another interface — to tap into the same, consistent memory source.

This approach mirrors the contextual advantage of mobile AI assistants but extends it to the broader AI landscape.

By leveraging the MCP framework, SuperMemory ensures that your personal memory can be seamlessly integrated as an external resource, enhancing the flexibility and personalisation of AI interactions.

Why It Matters

The implications of SuperMemory MCP are significant.

For users, it means a more cohesive and tailored AI experience, free from the constraints of platform-specific memory silos.

For developers, it offers a standardised way to incorporate user memory into AI agents, streamlining integration via MCP.

As the ecosystem of MCP servers grows, projects like SuperMemory could pave the way for a new standard in AI personalisation, where your memory is truly yours, no matter which AI tool you’re using.

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. From Language Models, AI Agents to Agentic Applications, Development Frameworks & Data-Centric Productivity Tools, I share insights and ideas on how these technologies are shaping the future.