The Advent Of Open Agentic Frameworks & Agent Computer Interfaces (ACI)

How Open Agentic Frameworks and ACI Are Redefining Human-Computer Interaction and the notion of Experience-Augmented Hierarchical Planning

Introduction

Almost two years ago I wrote my first article on AI Agents, with specific focus on the research work done by LangChain. I remember how mesmerised I was with the resilience of AI Agents and the deep level of autonomy of agents and their ability to handle highly ambiguous queries.

The next challenge was synthesising disparate data sources to produce a knowledge base which can be used for complex question answering; LlamaIndex released and in my opinion coined the term Agentic RAG. Where their RAG implementation was taking the form of an Agentic Implementation.

The Aim Of Agentic Frameworks

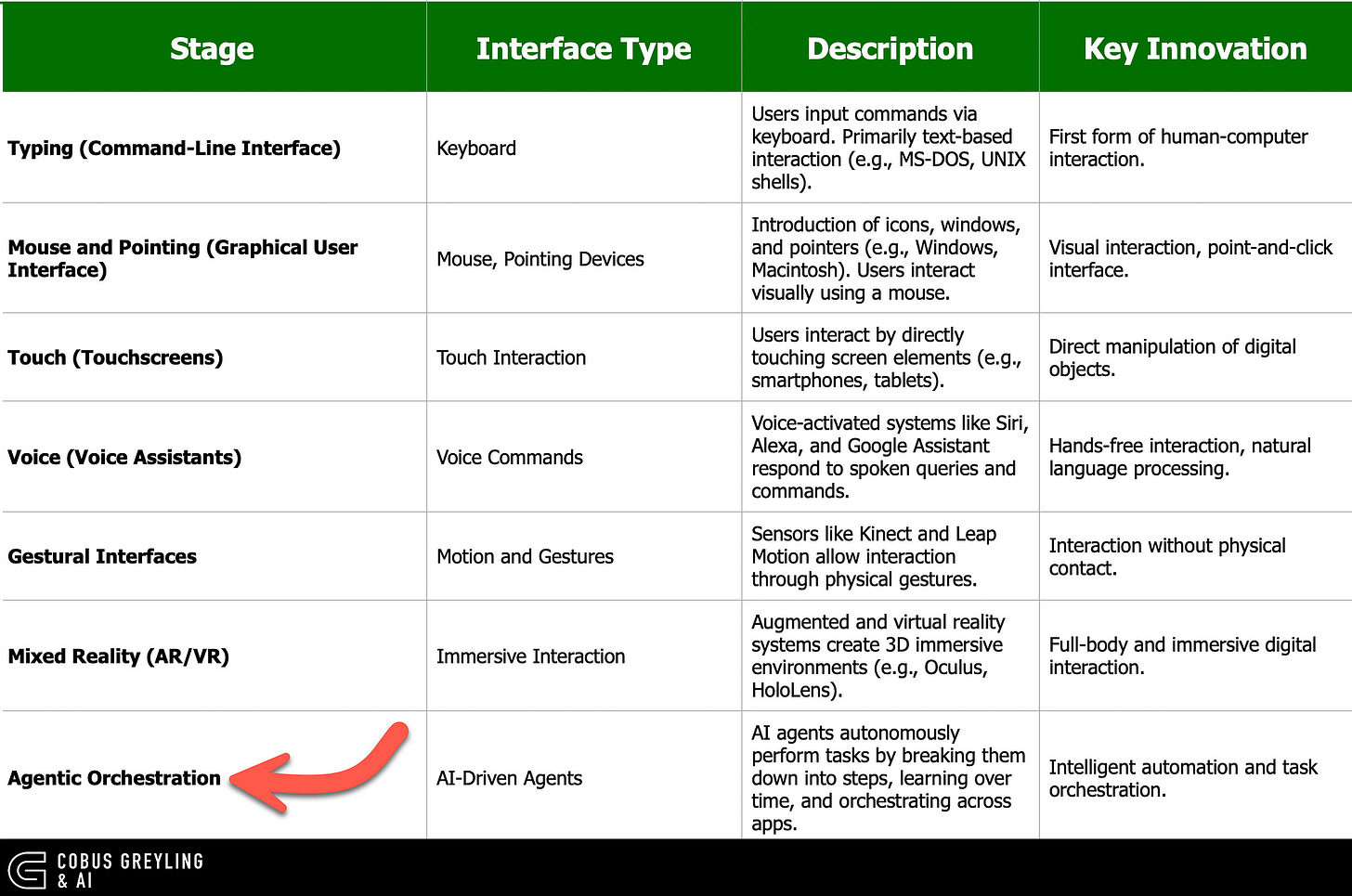

The aim of agentic frameworks is to transform human-computer interaction.

Improving the interface or human-computer interaction has been a focus area for a while now.

Consensus has been reached that the bottleneck in the human-computer interface is the keyboard. Whether we are using two fingers in the case of the mobile phone or ten when typing on a full keyboard.

The firs step was to allow unstructured data to be entered by the human and having the computer structure the data. Speech is the easiest and fastest way for humans to deliver data.

The only challenge with speech input use to be the unstructured nature of speech; this have been solved for to a large degree with the advent of Language Models.

The next step is something I have been referring to for quite a while as ambient orchestration. Fjord Design & Innovation referred to it as livingservices.

One of the aims of agentic applications or frameworks is the enablement of autonomous interaction of an agent with user applications and tasks via a Graphical User Interface (GUI).

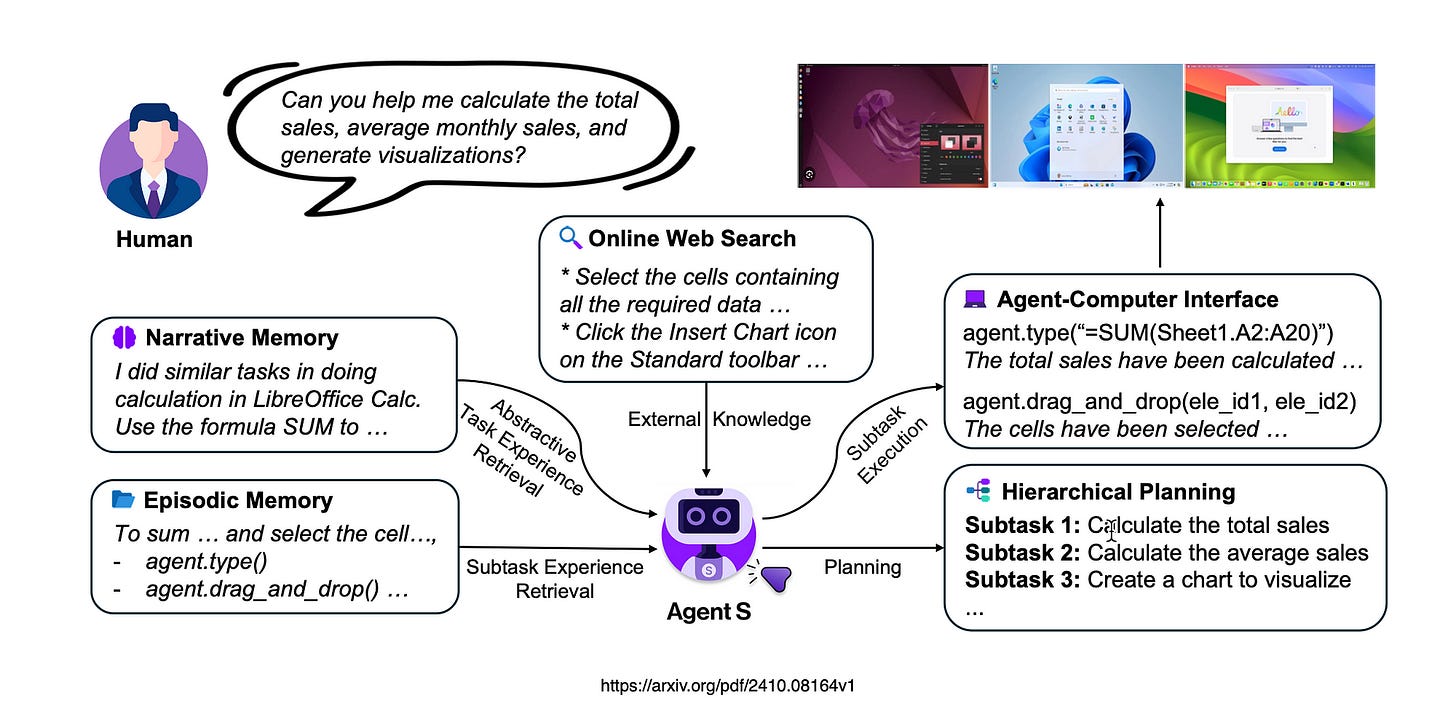

The agentic application is capable of understanding complex tasks and breaking them down into simpler, manageable steps.

At each step, the agent observes the current state, processes the information, and makes a decision on what action to take next.

This approach ensures that the agent follows a logical progression, continuously reassessing and adjusting its actions to effectively complete the task.

Three Key Challenges

Agent S focuses on solving three critical challenges in automating computer tasks.

First, it acquires domain-specific knowledge, which allows the agent to understand the context and intricacies of specialised software environments or tasks.

Second, it handles long-term planning by breaking down complex, multi-step tasks into manageable actions, enabling efficient execution over extended periods.

Thirdly,Agent S is designed to manage dynamic and non-uniform interfaces, allowing it to adapt to changing layouts or input forms, ensuring consistency even in unpredictable software environments. These capabilities make Agent S highly adaptable for varied, real-world computing scenarios.

Enabling Technologies

Agent S In A Nutshell

Agent S solves for the following challenges in creating an Agentic Framework…

Domain Knowledge & Open-World Learning

Agents must handle a wide variety of constantly changing applications and websites.

They need specialised, up-to-date domain knowledge.

The ability to continuously learn from open-world experiences is essential.

Complex Multi-Step Planning

Desktop tasks often involve long sequences of interdependent actions.

Agents need to generate plans with clear subgoals and track task progress over long horizons.

This requires an understanding of task dependencies and proper execution sequencing.

Navigating Dynamic, Non-Uniform Interfaces

Agents must process large volumes of visual and textual data while operating in a vast action space.

They need to distinguish between relevant and irrelevant elements and respond accurately to visual feedback.

GUI agents must interpret graphical cues correctly and adapt to dynamic interface changes.

Experience-Augmented Hierarchical Planning

To address the challenge of solving long-horizon, complex desktop tasks, Agent S introduces Experience-Augmented Hierarchical Planning.

This method enhances the agent’s ability to leverage domain knowledge and plan more effectively.

It augments the agent’s performance in solving tasks that span multiple steps, involving intermediate goals.

Types of AI Agents

MLLM Agents

Multimodal Large Language Models (MLLMs) serve as the core reasoning framework for MLLM Agents, enabling them to process both language and visual information.

These agents combine various components such as memory, structured planning, tool usage, and the ability to act in external environments.

MLLM Agents are applied in domains like simulation environments, video games, and scientific research. They are also increasingly used in fields like Software Engineering, where Agent-Computer Interfaces (ACI) enhance their ability to understand and act efficiently within complex systems.

This area of Agent-Computer Interfaces fascinates me the most.

GUI Agents

GUI Agents execute natural language instructions across both web and operating system environments.

Initially focused on web navigation tasks, their scope has expanded to operating systems, enabling them to handle OS-level tasks in benchmarks like OSWorld and WindowsAgentArena.

These agents are designed to navigate and control dynamic graphical interfaces, using methodologies such as behavioural cloning, in-context learning, and reinforcement learning.

Advanced features such as experience-augmented hierarchical planning enhance their performance in managing complex desktop tasks.

Retrieval-Augmented Generation (RAG) for AI Agents

RAG improves the reliability of MLLM agents by integrating external knowledge to enrich the input data, resulting in more accurate outputs.

MLLM agents benefit from retrieving task exemplars, state-aware guidelines, and historical experiences.

In the Agent S framework, experience augmentation takes three forms:

Hierarchical planning uses both full-task and subtask experience, full-task summaries serve as textual rewards for subtasks, and subtask experience is evaluated and stored for future reference. This ensures that the agent can effectively learn and adapt over time.

Agent S Framework

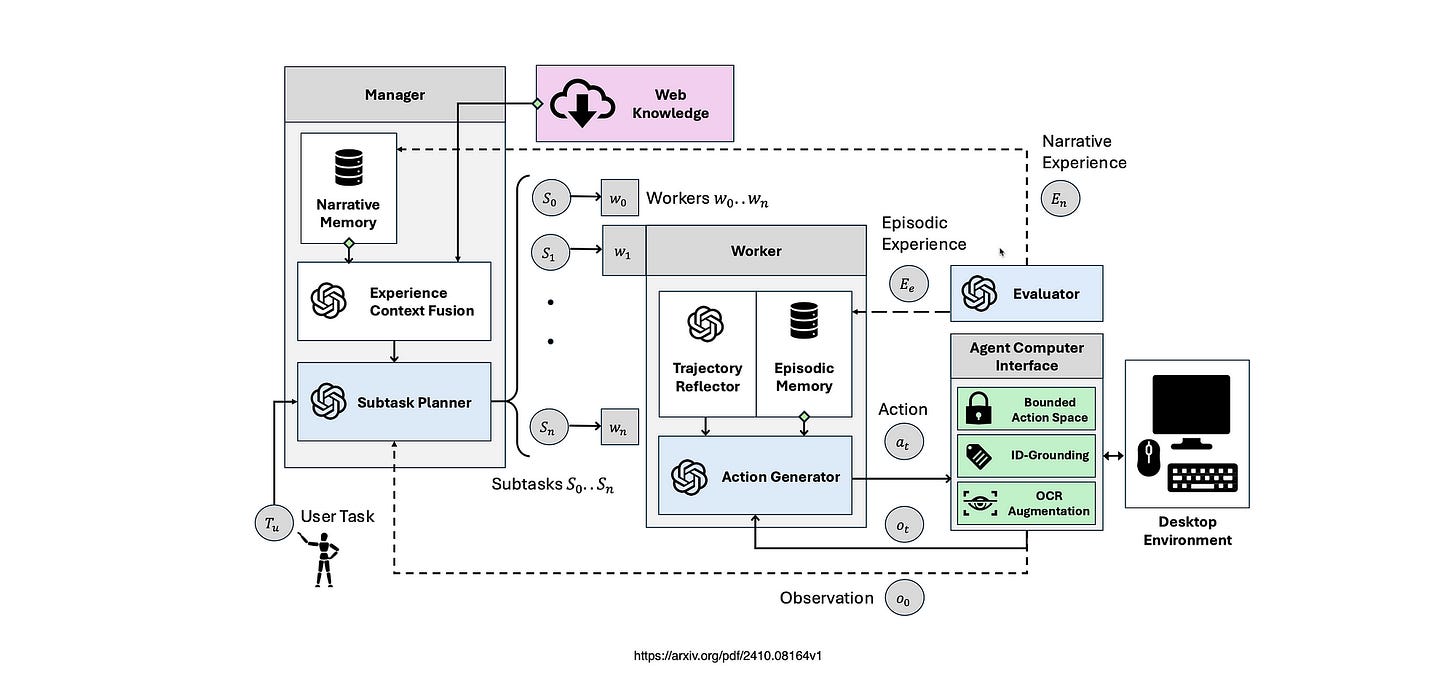

The image below shows the Agent S framework, given a task there is an initial environment observation. The Agent S Manager then performs experience-augmented planning. This is done by leveraging web knowledge, and narrative memory to create sub-tasks.

In Conclusion

The Agent-Computer Interface (ACI) bridges a crucial gap between traditional desktop environments designed for human users and software agents, enabling more effective interaction at the GUI level.

Unlike human users, who can intuitively respond to visual changes, AI agents like MLLMs face challenges in interpreting fine-grained feedback and executing precise actions due to their slower, discrete operation cycles and lack of internal coordinate systems.

The proposed ACI addresses this by integrating a dual-input strategy, leveraging both image and accessibility tree inputs for improved perception and grounding.

Additionally, it ensures a constrained action space to maintain safety and precision, allowing agents to perform individual, discrete actions while receiving immediate feedback.

This system enhances the agent’s ability to interact with complex, dynamic interfaces while maintaining control and reliability in task execution.

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. From Language Models, AI Agents to Agentic Applications, Development Frameworks & Data-Centric Productivity Tools, I share insights and ideas on how these technologies are shaping the future.