The Agentic Web

A while back I read that Andrej Karpathy uses LLMs to consume and read content…

…he spoke about a future where content will be written primarily for LLMs, not directly for humans.

The LLM would then digest it perfectly and present it to the human in the most effective way possible.

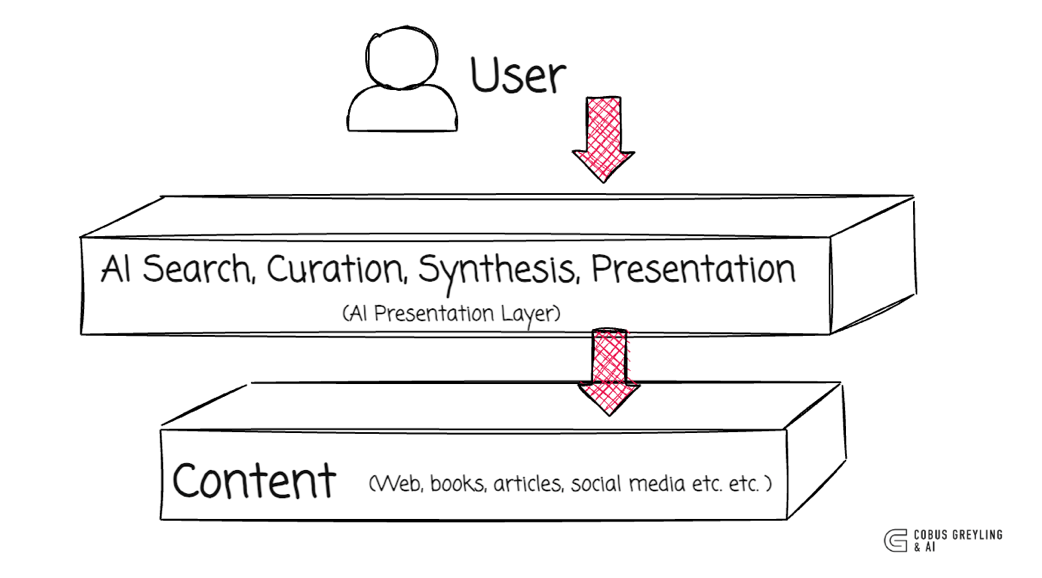

So think of a world, and this is already happening, where AI is becoming this layer between us and content can be the web, books, blogs…

And we interact with an AI UI to search, synthesise and present content in the format we want to consume it.&

That idea stuck with me.

Something which is happening in the world is the introduction of AI as this mediation layer between us (the human/user) and our content. The AI mediation layer performs tasks like search, information curation, data synthesis, formatting and more.

The mediation layer can be divided into two segments, voluntary and involuntary.

We are voluntarily inserting AI as an AI-based UI concierge between ourselves and the world.

Opening Grok or Claude to ask questions, summarise articles, or write emails — because it feels like augmentation, not amputation.

Every time we choose the chatbot over direct search, the book, or the raw feed, we train our brains to prefer pre-digested, frictionless reality.

The concierge has become the default doorway, and most of us stop noticing we no longer walk through the open garden — we ask the concierge to describe it and trust the description.

A second, involuntary mediation layer has already been welded into every major platform we touch.

Instagram, TikTok, YouTube, Spotify, and even X no longer present content; they present a real-time psychic simulationof what they believe you want, tuned by models that understand semantics and micro-dopamine.

Mostly, you cannot opt out without abandoning the app entirely.

These two layers are now converging.

Today we already live halfway there…

Grok, Perplexity, Claude, Gemini — they all sit as a new presentation layer between us and the raw web.

Data was synthesised on a higher level, and you had to find or search for your choice of how your data is synthesised. A white paper, a twee, a post, long-form content, audio, video, etc.

And platforms specialised in a specific type of synthesis to attract users interested in that medium. Think of Substack, Youtube, Medium, Twitter (X)…etc.

Now we are getting use to synthesised information, formatted and presented for an audience of one. For me, in real-time.

Back to the web…

While the web has evolved rich standards for human interaction, it lacks equivalent, machine-native affordances for agents.

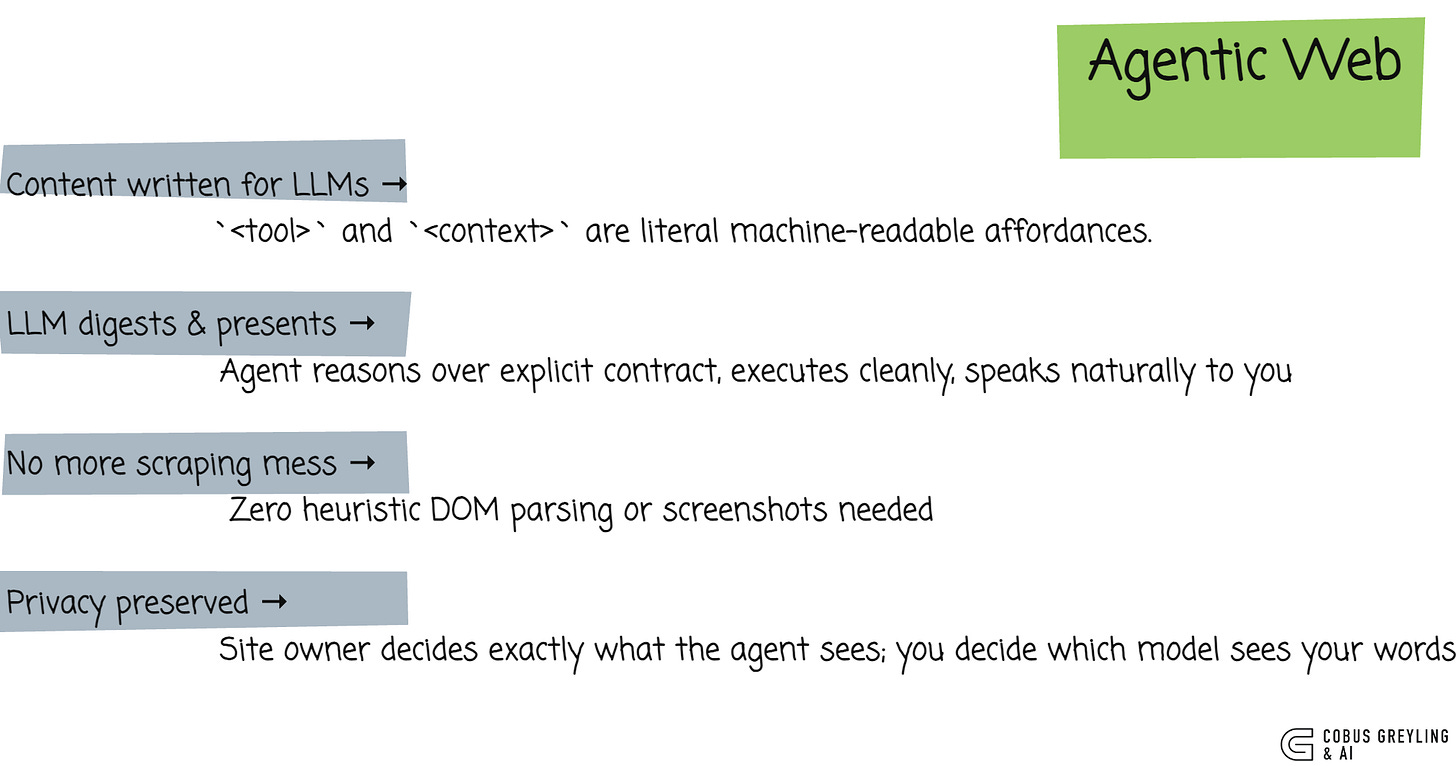

AI that crawl, scrape, summarise, reformat and answer. But, this middle layer is still hacking its way through interfaces that were built exclusively for humans to consume.

It’s brittle, slow, sometimes creepy and the website owner has zero control over what the agent does on their site.

This paper is a serious attempt to fix exactly this mismatch. It takes Karpathy’s provocation literally and builds the plumbing for a web that is authored for agents first, while still working perfectly for humans.

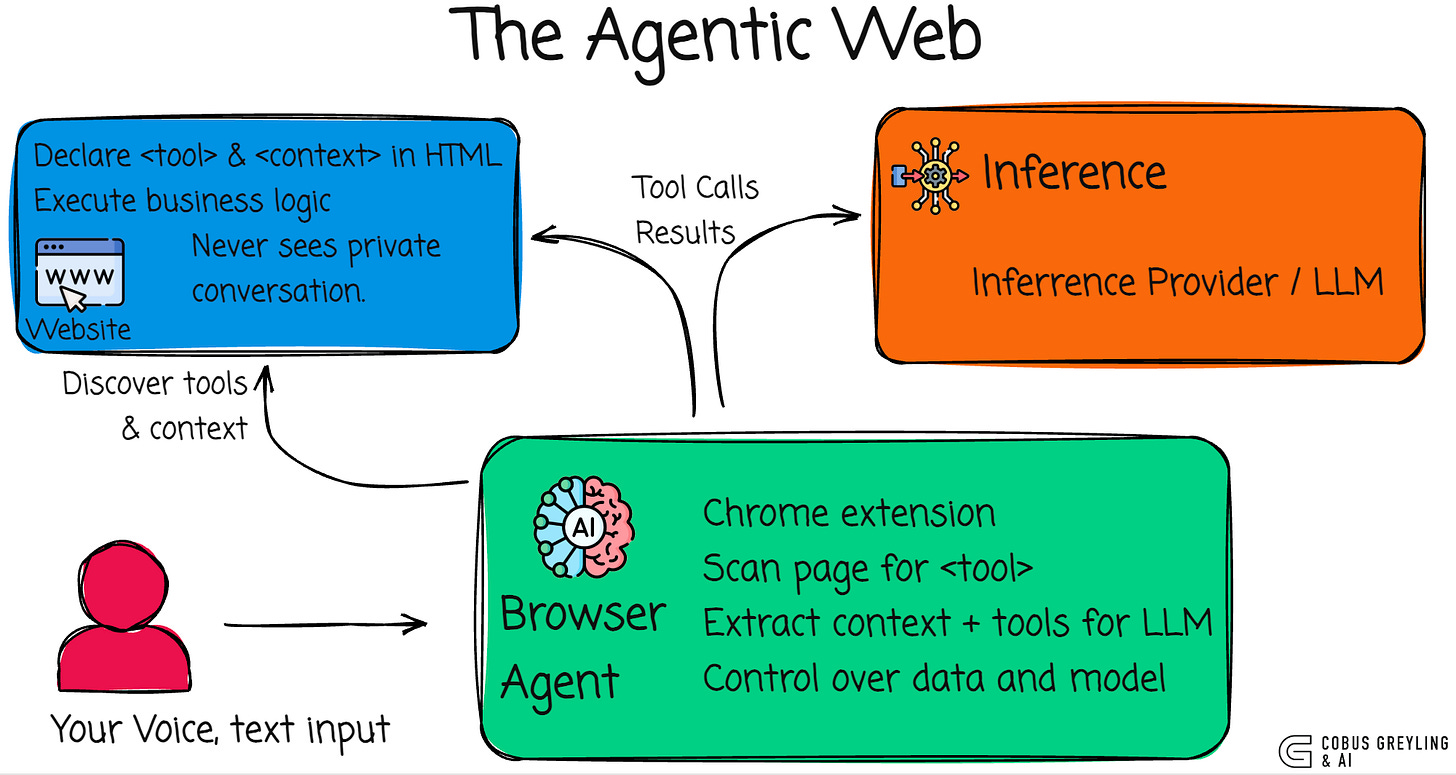

Instead of forcing agents to reverse-engineer buttons and forms from HTML meant for people, website developers now explicitly declare:

- What actions (tools) an agent is allowed to perform

- What state (context) the agent is allowed to see

They do it with two simple new HTML-like tags: `<tool>` and `<context>`.

That’s it. No new backend, no extra packages, no sending the whole page to OpenAI.

This approach shifts control to the website developer while preserving user privacy by disconnecting the conversational interactions from the website.

The tools and context defined on the web side, is written for LLMs, so I like the way this aligns with Karpathy’s vision.

Have we been adding “AI features” to websites the wrong way round?

VOIX orders the responsibility to where it belongs:

Website owners declare the official machine interface (like they already declare `<form>` for humans)

Users keep their conversation private and choose their model

Agents finally get clean, reliable, fast affordances

Finally, I think there is a question of, should the web be rebuilt for agents , or should agents learn to live in this world?

Is this study a pragmatic middle path where the web stays 99% backward-compatible for humans forever, but the 1% of sites that want to be agent-native.

So I guess the web will be gradually rewritten for agents, but only where it makes sense, and only by adding a voluntary declarative layer on top of what already exists.

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. Language Models, AI Agents, Agentic Apps, Dev Frameworks & Data-Driven Tools shaping tomorrow.

Building the Web for Agents: A Declarative Framework for Agent-Web Interaction

The increasing deployment of autonomous AI agents on the web is hampered by a fundamental misalignment: agents must…arxiv.org

COBUS GREYLING

Where AI Meets Language | Language Models, AI Agents, Agentic Applications, Development Frameworks & Data-Centric…www.cobusgreyling.com