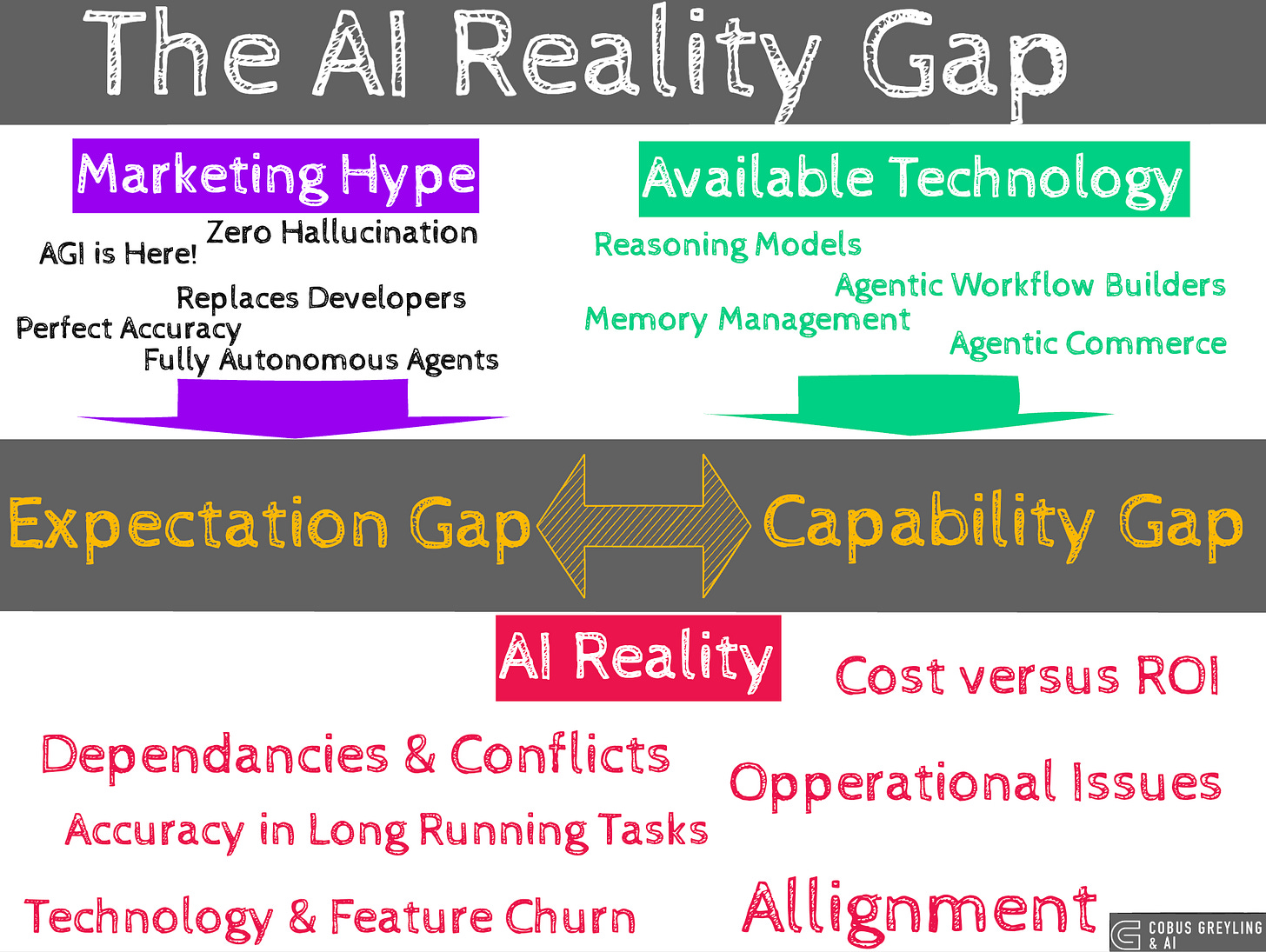

The AI Reality Gap

The AI hype machine is creating a chasm between glossy marketing promises and what’s actually deliverable today.

It’s like we’re in a cycle where buzzwords like “agentic AI” or “transformative productivity” set sky-high expectations, but ground-level implementation reveals limitations in accuracy, security and real-world applicability.

A nunber of recent studies are drawing from developer surveys, code repository analysis and empirical experiments. And serves as those “canaries in the coal mine” the Yale study speaks about.

Highlighting the disconnect and offering clues on bridging it.

Let me try and break down some key ones from 2025 and earlier, mainly focusing on software development since that’s where much of the data clusters. With research based on Stack Overflow, GitHub and others.

Stack Overflow’s 2025 Developer Survey

Adoption Up, Enthusiasm Down

This is an annual pulse-check of over 49,000 developers worldwide.

It shows taht AI tools are ubiquitous but falling short of the hype.

Usage is at 84% (using or planning to use), with 51% of pros integrating them daily, but positive sentiment has dipped to 60% from over 70% in prior years.

Seemingly productivity wins are real but narrow with 52% report overall gains, mainly in task speed.

70% say it cuts time on specifics.

But only 17% see better team collaboration.

45% find debugging AI output more time-sucking than helpful.

Trust

46% distrust accuracy.

75% wouldn’t rely on AI even if it could handle most coding.

81% worry about security/privacy.

Experienced devs (10+ years) are the most skeptical, with just 2.6% highly trusting outputs.

The Gap

Hype pushes AI as a full replacement, but reality confines it to simple aids like searching, autocomplete or learning.

With resistance to using it for high-stakes stuff like deployment, 76% say no.

Learners show even lower sentiment (53% favourable) than pros (61%), suggesting the hype wears thin faster for newcomers.

GitHub’s Productivity Insights

Speed for Routines, But Sustained Wins Require More

GitHub’s data (from 2024 but analysed in 2025 contexts) paints a similar picture…

AI assistants can slash routine task times by 55%, but only with training and support.

Without that, adoption flops — 87% experiment, but just 43% use daily in production, and forced rollouts lead to 68% abandonment in six months.

Initial hype-driven gains often plateau after 18 months if teams chase quick fixes over building long-term skills.

This echoes repository analyses showing AI excels in repetitive code but struggles with complex, context-heavy work, widening the expectation gap when marketed as a “developer multiplier.”

Stanford Security Study

AI help often means more vulnerabilities.

A 2023 Stanford experiment (still cited heavily in 2025 discussions) tested devs on security tasks across languages.

Those with AI assistants wrote significantly less secure code — more vulnerabilities in 4 out of 5 scenarios — due to over reliance and subtle errors in “almost right” outputs.

A follow-up 2025 analysis of 100+ AI tools across 80 scenarios found 48% of generated code had security holes.

This “security problem” underscores hype’s blind spot, AI is pitched as efficient, but it amplifies risks without human oversight, especially in real-world apps.

McKinsey’s 2025 Global AI Survey notes AI driving value in targeted areas like optimisation.

But only 5% of firms see rapid revenue acceleration from pilots, most stall due to integration hurdles.

MIT’s 2025 report is blunter: 95% of generative AI projects fail to deliver, blaming overhyped expectations vs. tech readiness.

Closing the Gap

These canaries suggest the fix isn’t more hype but grounded strategies.

Training and Change Management, with GitHub data showing sustained 55% speedups with support; without, abandonment skyrockets.

Targeted use by sticking to strengths like routine tasks or learning, avoid overreaching into complex/security-critical areas without verification.

And lastly, I’m a big proponent of hybrid approaches. Where you have an human and AI collaboration spectrum. And you can slide autonomy up or down.

Different tasks demand different levels of autonomy…software development aides and search/deep research are still the breakout use-cases.

If I take it from a personal perspective, I love building Python notebooks in Colab. It really boosts my understanding of technology.

The built-in Gemini assistant is such a good help in debugging code, especially if there is an existing and solid contextual reference.

This is confirmed in the research with 75% preferring human-AI combos; tools like open-source frameworks with the possibility of customisation.

Like in the early days of the web, attribution was a problem, and AI attribution will remain a challenge for a while.

As McKinsey and MIT emphasise, focus on measurable ROI pilots, not blanket transformations.

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. Language Models, AI Agents, Agentic Apps, Dev Frameworks & Data-Driven Tools shaping tomorrow.

The Stack Overflow survey data here is telling cause it shows adoption disconnected from satisfaction. 84% usage but enthusiasm dropping from 70% to 60% suggests people are using these tools out of necessity or FOMO rather than genuine value. The Stanford security finding is particularly worrying since it reveals a hidden cost that doesn't show up in productivity metrics. I've experienced similar issues where AI-generated code looks right but has subtle bugs that take longer to debug than writing from scratch. The 55% productivity boost wiht proper training is the real headline tho, it means implementation strategy matters way more than the tech itself.