The Chain-Of-X Phenomenon In LLM Prompting

In a recent post, I discussed the seemingly emergent abilities of LLMs. A new study argues that Emergent Abilities are not hidden or unpublished model capabilities...

…which are just waiting to be discovered, but rather new approaches of In-Context Learning which are being built.

A number of recent papers have shown that on user instruction to do so, Large Language Models (LLMs) are capable of decomposing complex problems into a series of intermediate steps.

This basic principle was introduced by the concept of Chain-Of-Thought(CoT) prompting for the first time in.

The basic premise of CoT prompting is to mirror human problem-solving methods, where we as humans decompose larger problems into smaller steps.

The LLM then addresses each sub-problem with focussed attention hence reducing the likelihood of overlooking crucial details or making wrong assumptions.

A chain of thought is a series of intermediate natural language reasoning steps that lead to the final output, and we refer to this approach as chain-of-thought prompting. ~ Source

The breakdown of tasks make the actions of the LLM less opaque with transparency being introduced.

Areas where Chain-Of-Thought-like methodology has been introduced are:

Chain-of-Thought Prompting

Multi-Modal Reasoning

Multi-Lingual Scenarios

Knowledge Driven Applications

Chain-of-Explanation

Chain-of-Knowledge

IR Chain-of-Thought

And more…

Least-to-most prompting can be combined with other prompting techniques like chain-of-thought & self-consistency. For some tasks, the two stages in least-to-most prompting can be merged to form a single-pass prompt. — Source

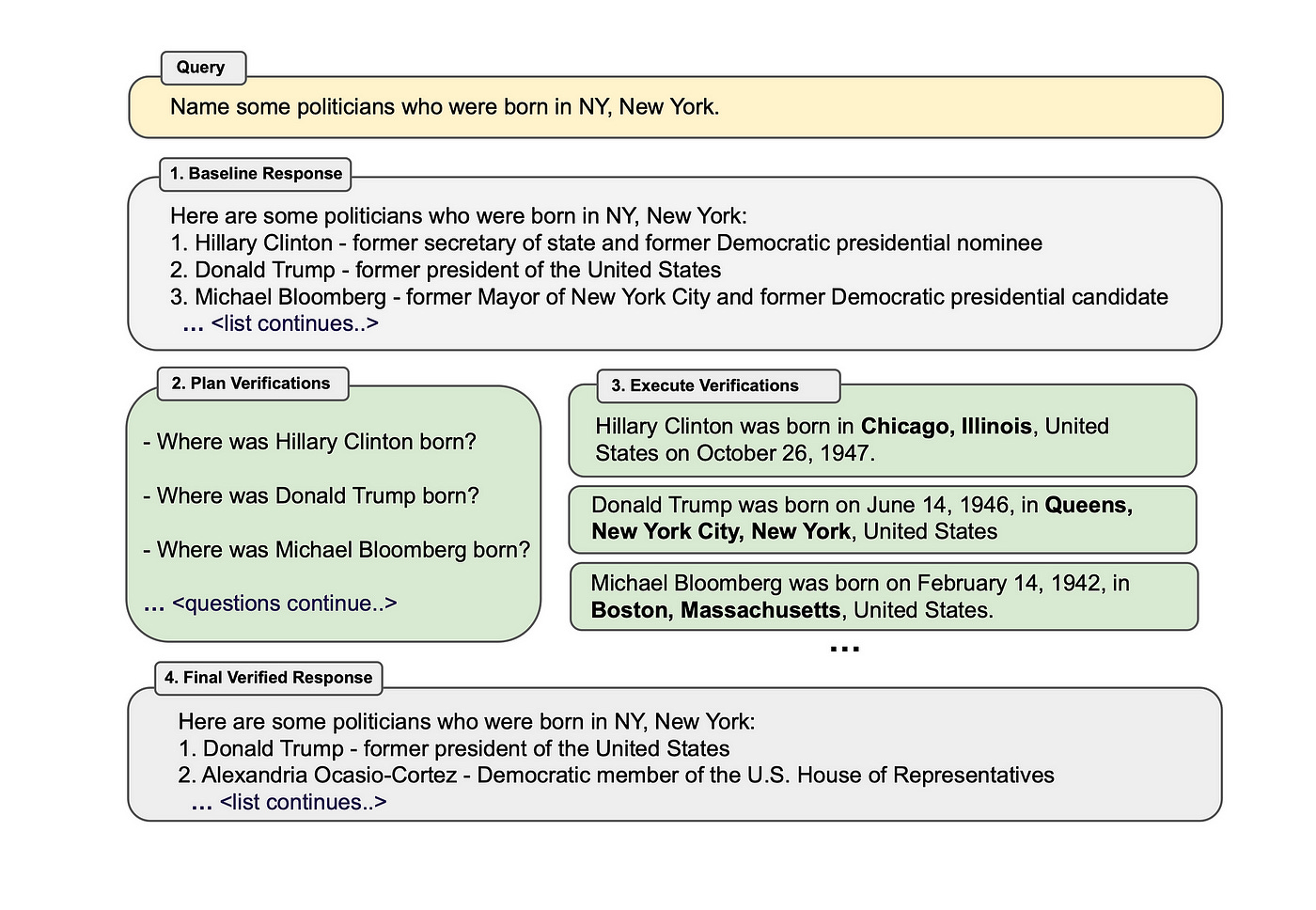

For example, Chain-of-Verification; A recent study highlighted a new approach (CoVe) to address LLM hallucination via a novel implementation of prompt engineering. This approach can be simulated via an LLM playground, and there is already a feature request submitted to LangChain for a CoVe implementation.

Agents

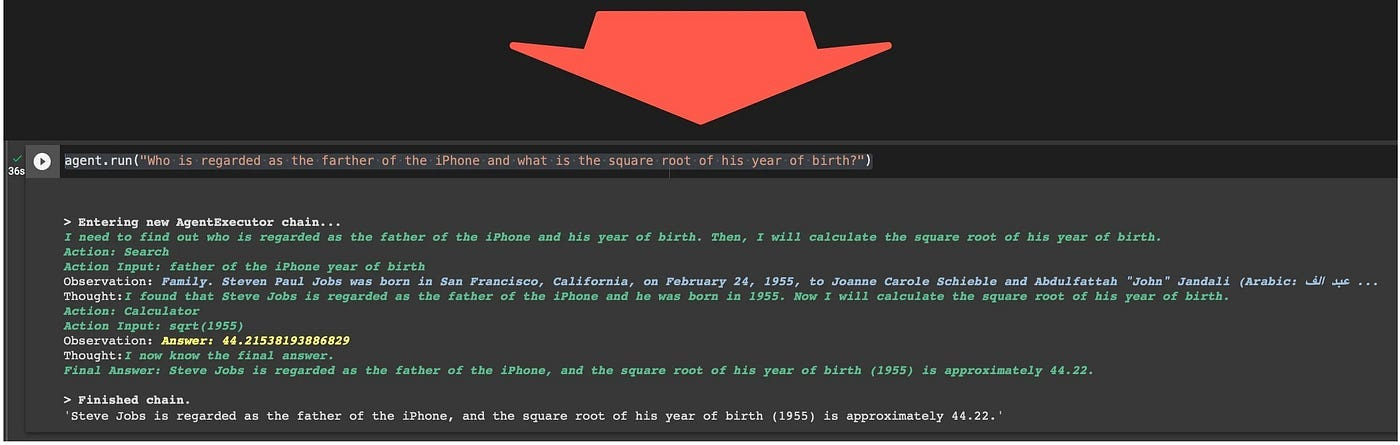

Decomposition also helps autonomous agents to assign sub tasks to an applicable tool.

Below is the decomposed chain-of-thought reasoning of the Agent based on the aforementioned question. The decomposition was performed completely autonomously by the Agent.

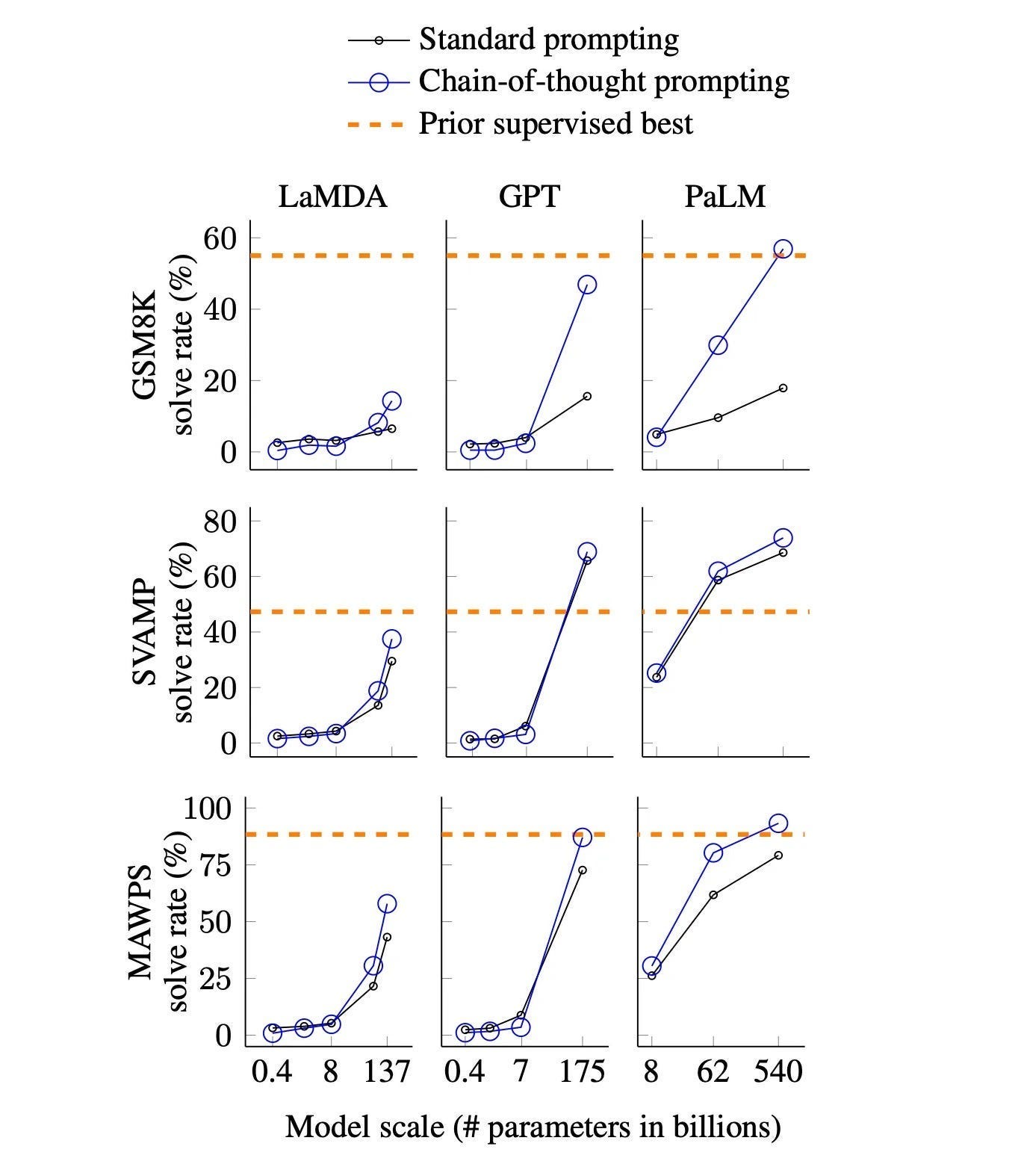

As seen in the image above, Chain-of-thought prompting enables large language models to address challenging arithmetic, common sense, and symbolic reasoning challenges.

Rather than adjusting a distinct language model checkpoint for each new task, one can simply provide the model with several input-output examples to demonstrate the task.

In Closing

What is particularly helpful of Chain-Of-Thought Prompting is that by decomposing the LLM input and LLM output, it creates a window of insight and interpretation.

This Window of decomposition allows for manageable granularity for both input and output, and tweaking the system is made easier.

Chain-Of-Thought Prompting is ideal for contextual reasoning like word problems, common-sense reasoning, math word problems and is very much applicable to any task that we as humans can solve via language.

The image below shows a comparison of percentage solve rate based on standard prompting and chain-of-thought prompting.

Chain of thought reasoning provides reasoning demonstration examples via prompt engineering.

⭐️ Follow me on LinkedIn for updates on Large Language Models ⭐️

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.