The Evolution of AI Agents & Agentic Systems

The evolution of AI Agents has progressed from humble beginnings to systems that combine internal control mechanisms with external contextual grounding and cognitive input.

LLMs have inherent limitations in knowledge and reasoning capabilities. AI Agents with language capabilities address these challenges by linking LLMs to internal memory and external environments, grounding them in existing knowledge or real-world observations.

In the past systems had to rely on handcrafted rules or reinforcement learning, which can make adapting to new environments difficult. Language AI Agents utilise the common sense understanding embedded in LLMs to tackle novel tasks, reducing reliance on human annotation or trial-and-error learning.

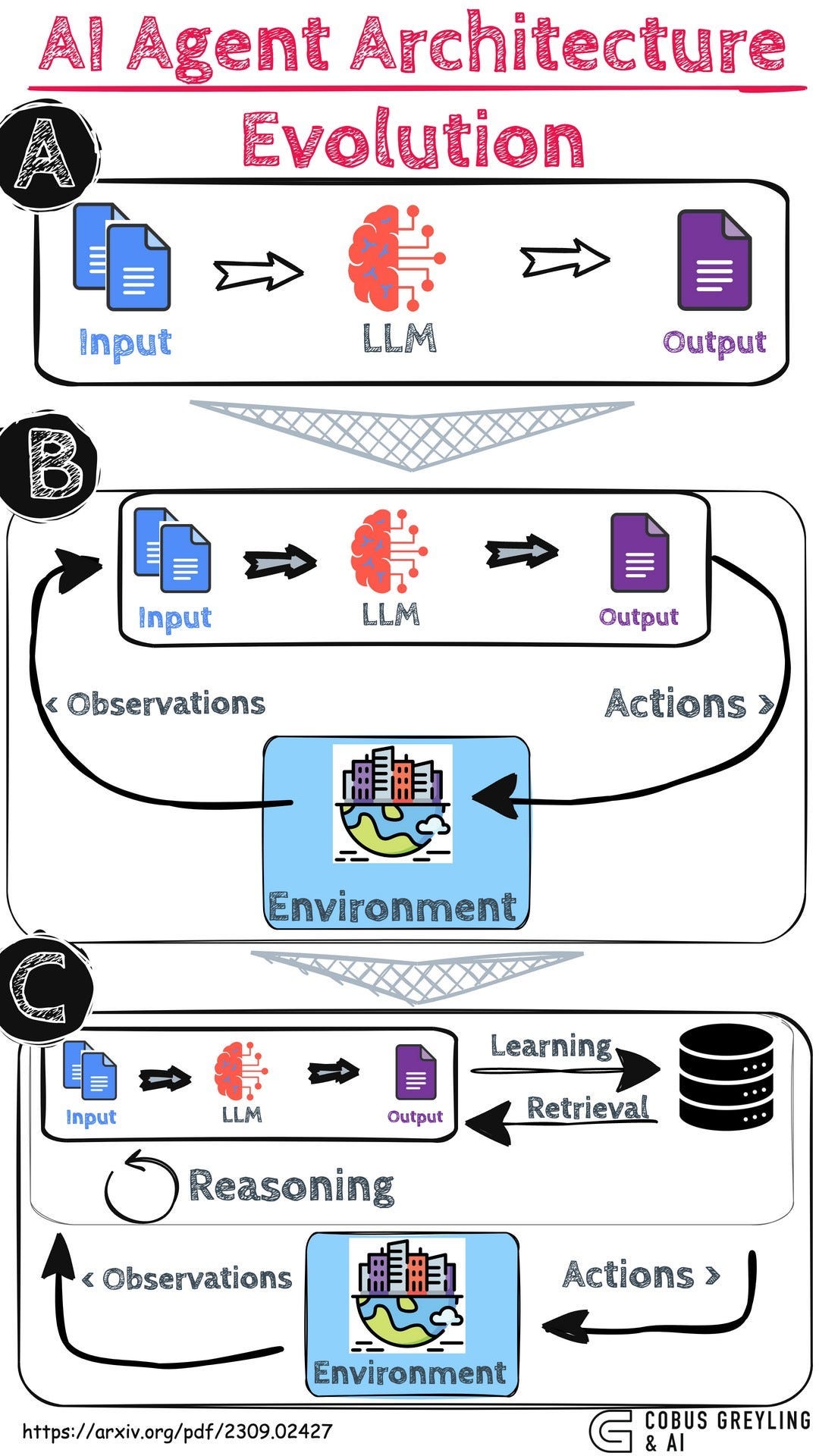

Architectural Evolution

Considering the image above, Large Language Models (LLMs) serve different purposes based on their applications:

A — Text Processing: In natural language processing (NLP), LLMs take text as input and generate text as output.

B — Language Agents: These integrate LLMs into a feedback loop with external environments, transforming observations into text and leveraging the LLM to make decisions or perform actions.

C — Cognitive Language AI Agents: These advanced systems use LLMs not only for interaction but also to manage internal processes such as learning and reasoning.

Orchestration

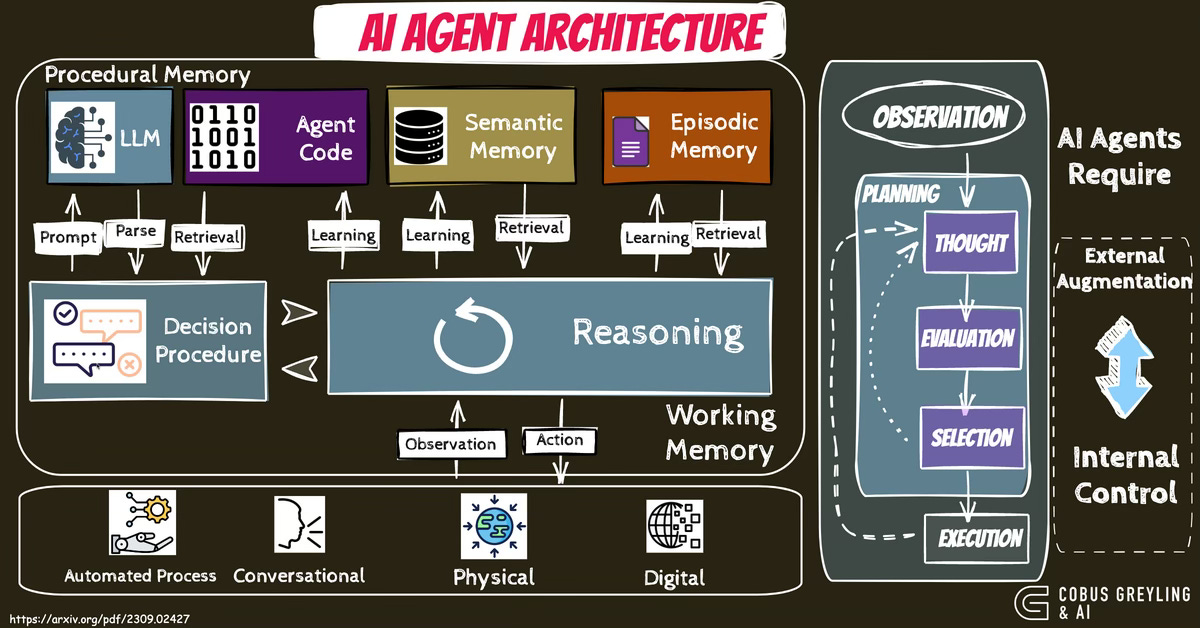

Looking at the image below, an AI Agent can be understood through three key elements:

Cognitive Architectures: Episodal Memory refers to the ability to store and recall specific events or experiences, like remembering a recent conversation.

Semantic Memory stores general knowledge about the world, such as facts and concepts.

While episodal memory is dynamic and context-dependent, semantic memory is more stable and involves understanding abstract, generalised information.

Action Space: The AI Agent operates within a dual-action framework. Internal actions involve processes like reasoning, planning, and updating its internal state, while external actions involve interactions with the environment, such as executing commands or providing outputs.

Decision-Making Procedure: The agent’s decision-making is organised as an interactive loop consisting of planning and execution. This iterative process allows the agent to analyse its environment, formulate a strategy, and act accordingly, refining its approach as new information becomes available.

These elements collectively define the operational framework of an AI agent, enabling adaptive and efficient behaviour in complex environments.

Digital Environments

AI Agents operate within diverse environments that enable them to interact and execute tasks. Currently, these environments are primarily digital, including mobile operating systems, desktop operating systems, and other digital ecosystems.

In these settings, AI Agents can engage with games, APIs, websites, and general code execution, utilising these platforms as a foundation for task execution and knowledge application.

Digital environments provide an efficient and cost-effective alternative to physical interaction, serving as an easy supervised and cost-effective way to develop and evaluation of AI Agents.

Practical Examples

For example, in natural language processing (NLP) tasks, digital APIs — such as search engines, calculators, and translators — are often packaged as toolswithin the operating system, designed for specific purposes.

These tools can be considered specialised, single-use digital environments that allow agents to perform tasks requiring external knowledge or computation.

As AI Agents continue to evolve, their presence in digital environments will expand beyond static interaction, laying the groundwork for more complex systems.

The future of AI Agents lies in their physical embodiment, where they will operate in real-world environments. This transition will open up new possibilities for AI, enabling agents to interact physically with the world, navigate dynamic spaces, and take on roles in fields such as robotics.

The shift from purely digital environments to physical ones represents a significant step forward, as it will require AI Agents to integrate sensory data, physical actions, and context-aware decision-making, further enhancing their capabilities and applications.

In Conclusion

What stands out in this study is its detailed analysis of the evolving frameworks built around LLMs to maximise their potential. It highlights how these structures are both internal and external, working in tandem to enhance their capabilities.

Internally, the focus is on reasoning, which forms the core of the model’s intelligence and decision-making processes. Externally, the journey began with data augmentation, enabling the integration of additional information.

Over time, these external frameworks have expanded to include direct interaction with the outside world, further extending the functionality and adaptability of LLMs.

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. From Language Models, AI Agents to Agentic Applications, Development Frameworks & Data-Centric Productivity Tools, I share insights and ideas on how these technologies are shaping the future.