The Evolution of Grounding & Planning In AI Agents

When it comes to chatbots, intent detection has been a core component from the very beginning...

Simply put, intents are categories or classes used to classify conversations.

Each interaction between a user and the bot is tied to a specific intent, which is essentially the purpose or goal behind the conversation. When a conversation begins, the system identifies the intent, sets the context, and establishes the foundation for the entire interaction.

This process of grounding — establishing context — was an early effort to make conversations meaningful. Grounding, in this sense, is the AI’s way of understanding and responding to the user based on the detected intent.

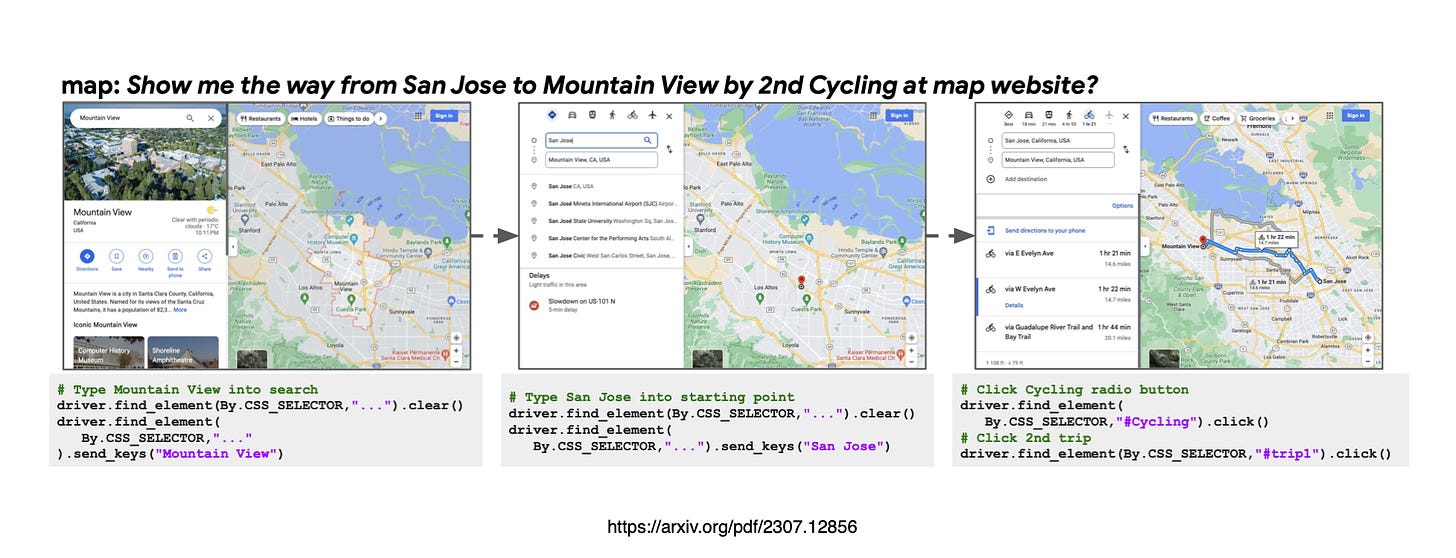

In this example above, is a real-world web automation for maps, WebAgent receives an instruction and the webpage’s HTML code. Based on these, it predicts the next small task and selects the relevant part of the webpage to focus on. Then, it generates a Python script (shown in gray) to perform the task, treating the small task as a comment within the code.

From Chatbots to Intelligent Planners

With the rise of Large Language Models (LLMs), we’ve moved beyond simple intent detection. AI Agents underpinned by Language Models, are no longer just conversational engines — they have evolved into sophisticated plannerscapable of guiding complex tasks based on a detailed understanding of the world.

These AI-driven systems can now operate within digital environments — such as mobile operating systems or the web — performing actions like navigating apps or interacting with websites.

Planning refers to the agent’s ability to determine the appropriate sequence of actions to accomplish a given task.

While grounding involves correctly identifying and interacting with relevant web elements based on these decisions. ~ Source

AI Agents: Navigating Virtual Worlds

Much like a physical robot moving through the real world, AI agents are designed to navigate digital or virtual environments. These agents, being software entities, need to interact with systems like websites, mobile platforms, or other software applications.

However, the current experimental AI agents still face challenges in achieving the level of precision required for practical, real-world use. For example, the WebVoyager project — a system designed to navigate the web and complete tasks — achieves a 59.1% success rate. While impressive, this accuracy rate shows that there’s room for improvement before AI agents can reliably handle complex real-world scenarios.

Grounding: Connecting Conversations to Actions

With LLMs, the concept of grounding becomes even more crucial. Grounding is what turns a vague or abstract conversation into something actionable. In the context of LLMs, grounding is achieved through in-context learning, where snippets of relevant information are injected into prompts to give the AI necessary context (RAG).

Planning: Decomposing Complex Tasks

In the world of AI agents, planning is all about creating a sequence of actions to reach a specific goal. A complex or ambiguous request is broken down into smaller, manageable steps. The agent, in turn, follows this step-by-step process to achieve the desired outcome.

For instance, if an AI agent is tasked with booking a flight, it will need to break that task down into smaller actions — such as checking flight options, comparing prices, and selecting a seat. This sequence of actions forms the backbone of the agent’s ability to plan and execute tasks efficiently.

Action Execution & Feasibility

However, planning alone isn’t enough. The AI agent must also ensure that its planned actions are feasible in the real world. It’s one thing to generate a list of steps, but it’s another to ensure they are realistic.

Therefore, the AI must understand the limitations of time, resources, and context. Recent research explores how LLMs can use world models to simulate real-world constraints, helping them determine whether a given action is possible or not.

The AI agent must determine the correct sequence of actions (planning) and then interact with the relevant elements in a digital or physical environment (grounding). This combination ensures the AI’s decisions are both actionable and contextually appropriate.

Conclusion

While experimental systems like WebVoyager are still improving, the future of AI agents promises greater accuracy, flexibility, and reliability in carrying out actions across digital platforms. As these systems continue to advance, the line between conversation and action will blur, empowering AI to not only understand the world but to operate within it effectively.

A Real-World WebAgent with Planning, Long Context Understanding, and Program Synthesis

Pre-trained large language models (LLMs) have recently achieved better generalization and sample efficiency in…

arxiv.org

From Grounding to Planning: Benchmarking Bottlenecks in Web Agents

General web-based agents are increasingly essential for interacting with complex web environments, yet their…

arxiv.org

A Real-World WebAgent with Planning, Long Context Understanding, and Program Synthesis

Izzeddin Gur1 * 1 {}^{1*} start_FLOATSUPERSCRIPT 1 * end_FLOATSUPERSCRIPT Hiroki Furuta1 , 2 * † 1 2 †…

arxiv.org

I’m currently the Chief Evangelist @ Kore.ai. I explore and write about all things at the intersection of AI and language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces and more.