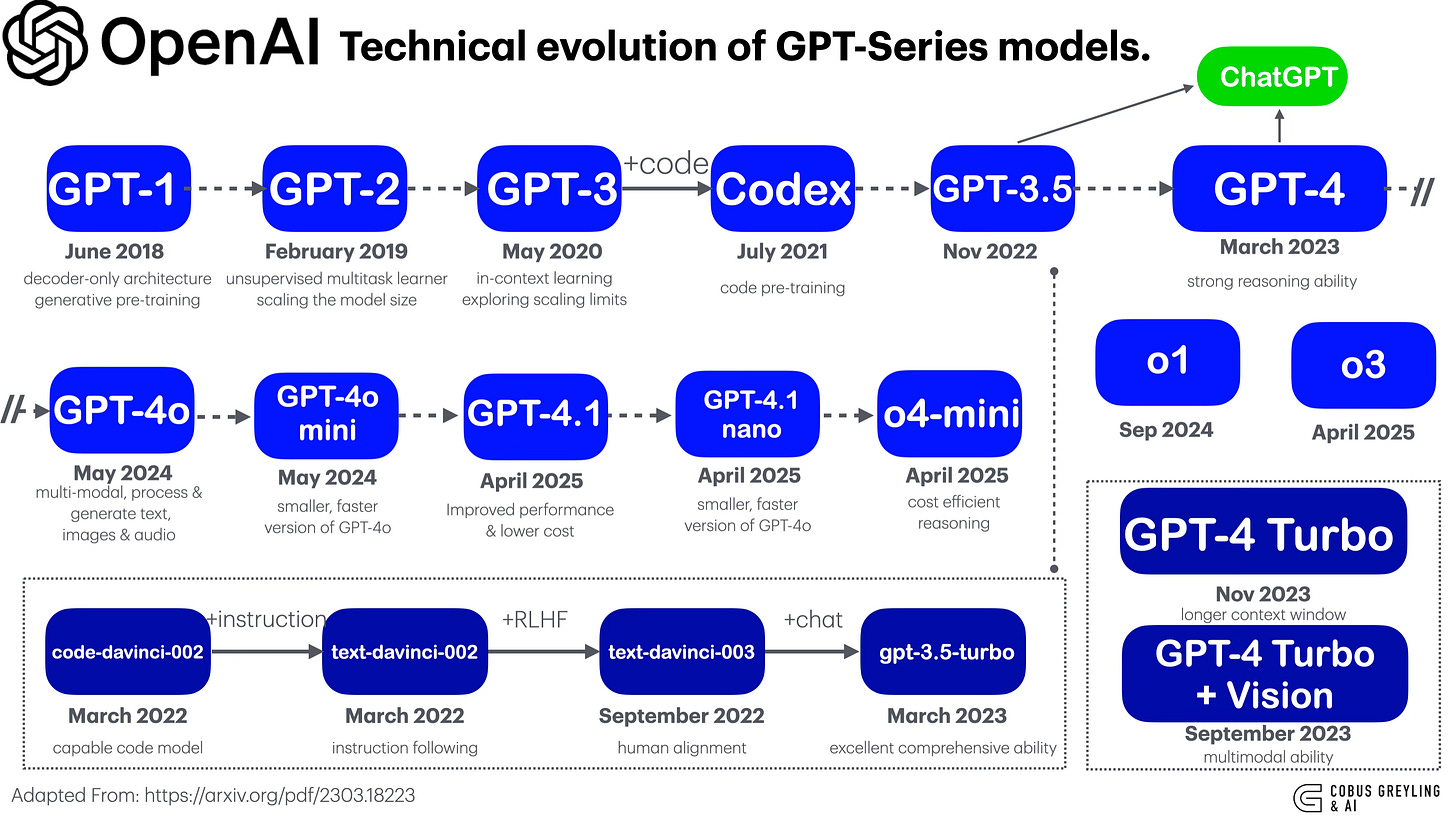

The Evolution of OpenAI's GPT Models

From basic language models to multimodal and reasoning-focused systems in less than seven years!

𝗚𝗣𝗧-𝟭 (𝗝𝘂𝗻𝗲 𝟮𝟬𝟭𝟴): Started with decoder-only architecture and generative pre-training.

𝗚𝗣𝗧-𝟮 (𝗙𝗲𝗯𝗿𝘂𝗮𝗿𝘆 𝟮𝟬𝟭𝟵): Pioneered unsupervised multitask learning with scaled model size.

𝗚𝗣𝗧-𝟯 (𝗠𝗮𝘆 𝟮𝟬𝟮𝟬): Breakthrough in-context learning, exploring scaling limits.

𝗖𝗼𝗱𝗲𝘅 (𝗝𝘂𝗹𝘆 𝟮𝟬𝟮𝟭): Specialised in code pre-training, powering tools like GitHub Copilot.

𝗚𝗣𝗧-𝟯.𝟱 (𝗡𝗼𝘃𝗲𝗺𝗯𝗲𝗿 𝟮𝟬𝟮𝟮): Bridged to ChatGPT, enhancing conversational abilities.

𝗚𝗣𝗧-𝟰 (𝗠𝗮𝗿𝗰𝗵 𝟮𝟬𝟮𝟯): Marked by strong reasoning abilities, a leap in performance.

𝗚𝗣𝗧-𝟰𝗼 (𝗠𝗮𝘆 𝟮𝟬𝟮𝟰): Introduced multimodal capabilities, processing text, images, and audio.

𝗼𝟭 (𝗦𝗲𝗽𝘁𝗲𝗺𝗯𝗲𝗿 𝟮𝟬𝟮𝟰): Kicked off the o-series with advanced simulated reasoning for complex tasks.

𝗚𝗣𝗧-𝟰.𝟭 (𝗔𝗽𝗿𝗶𝗹 𝟮𝟬𝟮𝟱) & 𝗼𝟯 (𝗔𝗽𝗿𝗶𝗹 𝟮𝟬𝟮𝟱): Further improved performance, with o3 excelling in multimodal reasoning, including image analysis.

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. From Language Models, AI Agents to Agentic Applications, Development Frameworks & Data-Centric Productivity Tools, I share insights and ideas on how these technologies are shaping the future.