The LangChain Ecosystem Is Expanding At A Tremendous Pace

The current LangChain ecosystem of tools is a good indication in terms of best practice when it comes to LLM implementations.

Intro

My attention was first drawn to LangChain while searching for papers on LLMs in general and prompting strategies in particular. What astounds me is how quick LangChain is to implement research papers in the form of different approaches to Autonomous Agents.

LangChain is becoming to some degree the de facto standard for building LLM-based Generative Apps. LangChain is my first port of call for experimenting with Autonomous Agents & Tools and searching for code implementations of the newest prompt engineering research.

For a graphic IDE flow builder, Flowise and LangFlow are very compelling options.

Here are a few highlights in terms of recent LangChain developments…

LangChain — What Changed?

LangChain, LangChain-Core & LangChain-Community

According the LangChain, the existing LangChain package is being separated into three separate packages.

LangChain

LangChain contains use-case specific chains, agents, and RAG code which acts as the backbone of cognitive applications.

LangChain-Core

LangChain-Core contains:

Abstractions that have emerged as a standard

LangChain Expression Language

The langchain-core package contains base abstractions that the rest of the LangChain ecosystem uses, along with the LangChain Expression Language. It is automatically installed by LangChain, but can also be used separately. — Source

Langchain-Community

LangChain-Community contains all third party integrations.

This is done to support the fast expanding LangChain-based ecosystem around LangChain Templates, LangServe, LangSmith, and other packages.

Installation | 🦜️🔗 Langchain

Official release

LangServe

LangServe allows makers to expose LangChain applications as a REST API.

LangServe is the easiest and best way to deploy any LangChain chain/agent/runnable. ~ Source

LangChain will be launching a hosted version of LangServe enabling one-click deployments of LangChain applications. You can sign up here to get on the waitlist.

Templates

LangChain Templates serve as a reference for application examples. This data already exists to some degree in the LangChain documentation under the templates section.

It seems like LangChain Templates lack some degree of gravitas in terms of content and activity. Especially compared to the LangSmith hub, where critical mass is being achieved. Considering the number of prompts added in terms of the number of likes, views, downloads, etc.

Templates has a form to request a template; templates is also a good place where integrations are featured. Each template has a link through to a Github page, which makes the templates very actionable.

Also, templates are a great avenue to get a working example going and from there iterate on to create an implementation envisioned.

LangSmith

With seamless integration with LangChain, arguably the leading open source framework for building with LLMs, LangSmith enables makers to manage & monitor LLM calls from chains and intelligent agents.

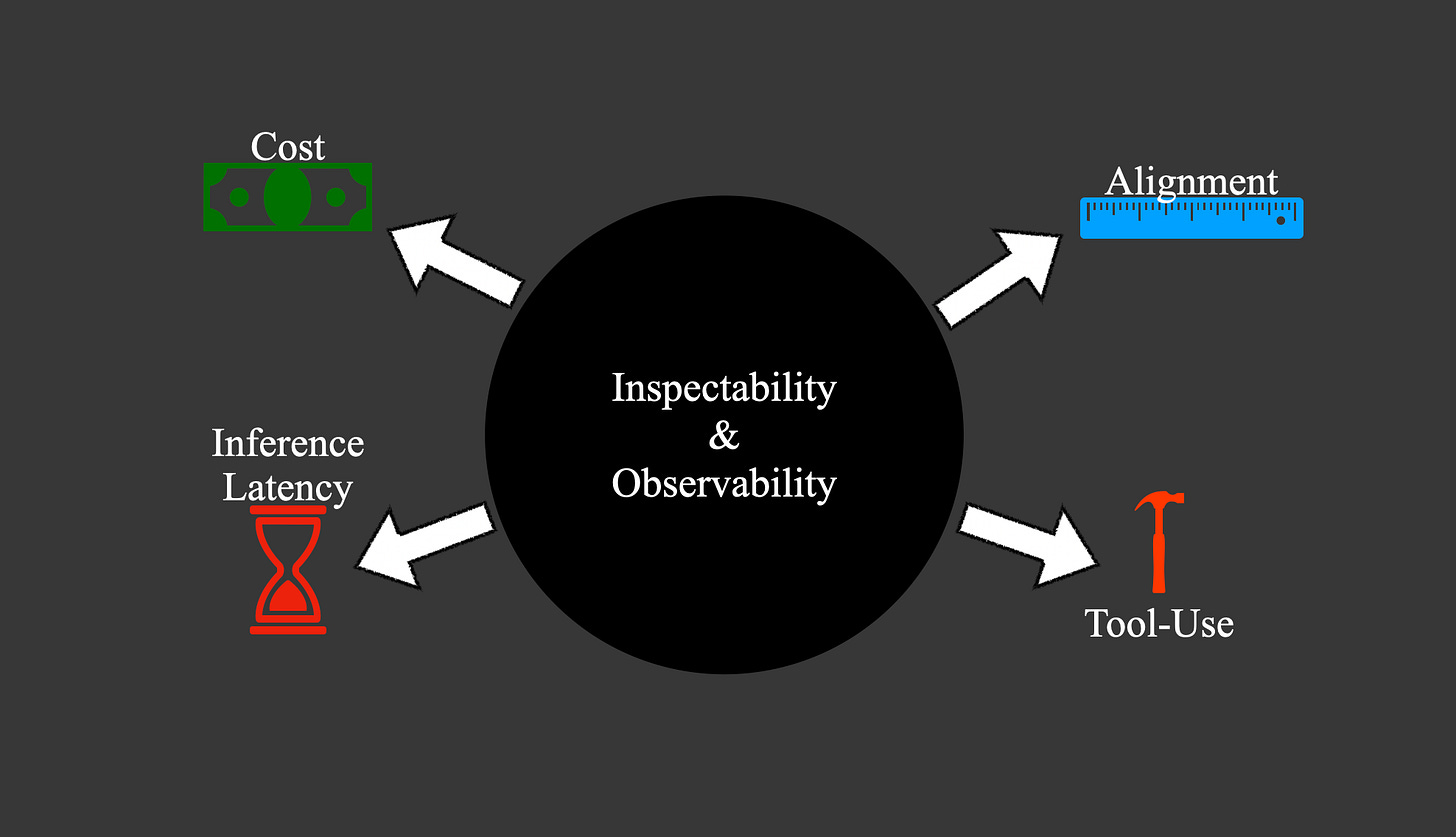

A key component for successfully implementing an application which is underpinned by one or more LLMs is inspectability & observability.

Key elements which makers would want to observe and inspect are cost, inference latency, alignment and tool-use in the case of autonomous agents.

LangSmith has a no-code web-based GUI to which interaction from a LangChain application can be logged. Adding LangSmith to any LangChain application only requires four lines of code:

export LANGCHAIN_TRACING_V2=true

export LANGCHAIN_ENDPOINT="https://api.smith.langchain.com"

export LANGCHAIN_API_KEY="<your-api-key>"

export LANGCHAIN_PROJECT="<your-LangChain-Project-Name>"LangSmith is constituted by six components:

Monitoring, Evaluation, Annotation, Feedback, Testing & Debugging.

These components close the loop in terms of evaluating applications, annotating data, getting user feedback and testing.

An advantage of the prompt playground is access to a whole host of LLMs and also a whole host of prompts via the prompt engineering hub.

LangSmith lets you debug, test, evaluate, and monitor chains and intelligent agents built on any LLM framework and seamlessly integrates with LangChain, the go-to open source framework for building with LLMs.

Below is a list with all the non-chat based LLMs available within LangSmith. AzureOpenAI deployments can also be referenced from the playground.

The sheer number of models available allows for rich experimentation and testing with prompts via the playground.

The list below contains LLMs with Chat Messages as input and output.

The wide array of LLMs allows for makers to differentiate between text and chat use-cases. From here prompts can be tested against different LLMs with an eye on optimisation and general model performance.

It has been proven model hallucination is drastically reduced with the introduction of In-Context Learning. Models regarded as inferior also show significant improvement in performance.

I foresee the data Annotation portion of LangSmith growing in the near future.

⭐️ Follow me on LinkedIn for updates on Large Language Models ⭐️

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.