The Mistral AI Agent Build Environment

And The Rise of AI Agent SDKs by Model Providers

Introduction

The AI landscape is changing so fast, with model providers like Mistral, OpenAI, Cohere and others leading the charge by creating specialised Software Development Kits (SDKs) to simplify the creation of AI Agents.

SDKs are trying to transforming how developers build intelligent and autonomous systems, making it easier to harness the power of large language models (LLMs) for real-world applications.

…or at least my perception is that the SDK’s are trying to establish some kind of best-practise, widely adopted way of developing AI Agents.

Mistral

Mistral’s documentation on AI Agents highlights a key trend:

Model providers are no longer just only offering raw AI models but are also providing structured tools (could one say scafolding?) to streamline AI Agent development.

Mistral’s SDK, for instance, enables developers to craft AI Agents with defined roles, tools and workflows, integrating seamlessly with their APIs.

Similarly, OpenAI’s Assistants API allows developers to build conversational AI Agents with access to tools like code interpreters and file search, while Cohere’s toolkit focuses on embedding-based AI Agents for tasks like semantic search and classification.

The SDKs abstract away much of the complexity, letting developers focus on defining agent behaviour rather than wrestling with low-level model interactions.

Broader Industry Realisation

This shift reflects a broader industry realisation: building effective AI Agents requires more than just powerful models.

AI Agents need

Clear instructions,

Access to external tools (via MCP), and

The ability to manage context across tasks.

SDKs provide pre-built frameworks for these components, reducing development time and expertise barriers.

For example, Mistral’s AI Agent framework supports customisable guardrails and tool-calling, while OpenAI’s API emphasises persistent threads for conversational continuity.

Cohere, meanwhile, excels in enabling AI Agents for specific use cases like text analysis, showcasing the diversity of approaches.

Developer Mindshare & Critical Mass

The growing emphasis on SDKs also signals fierce competition among model providers to try and capture developer mindshare.

By offering basic yet user-friendly tools, companies aim to lock developers into their ecosystems, encouraging building and innovation while ensuring their models remain central to the AI Agent-building process.

For developers, this can be seen as a bonus— access to powerful, standardised tools means faster prototyping and deployment of AI Agents across industries, from customer service to data analysis.

Caveat

However, building AI Agents still involves challenges…

Developers must define precise…

AI Agent roles,

manage tool integration and

ensure reliability in dynamic environments.

SDKs help, but they don’t eliminate the need for thoughtful design.

As model providers continue to refine their ecosystem tools, the future of AI agents looks promising, but I truly believe the way we develop AI Agents will change.

Thinking of recent new developments like MCP, tools, AI Agent to AI Agent protocols…development environments will have to change to accommodate the new structures.

One needs to ask the question, is it worthwhile to use the SDK? Anthropic in a recent piece warned against using frameworks if it is not necessitated.

Frameworks or scaffolding can introduce instabilities and unpredictable behaviour, if the framework is not understood by the builder.

AI Agents Basics

The API utilises three primary objects, as outlined below:

AI Agents

This consists of a collection of pre-selected values designed to enhance model capabilities, including tools, instructions and completion parameters.

Conversation

This represents a record of interactions and prior events with an assistant, encompassing messages and tool executions.

Entry

An action that may be initiated by either the user or an assistant, offering a more adaptable and expressive way to represent interactions between a user and one or more assistants, thereby providing greater control over event descriptions.

Additionally, users can access all features of Agents and Conversations without needing to create an Agent.

This allows querying the API directly, utilising built-in Conversation features and Connectors without Agent creation.

More On Mistral’s AI Agent Development Environment

At its core, the environment revolves around three key objects: Agents, Conversations and Entries.

Agents are tailored setups with specific models, tools and instructions to enhance capabilities — think of them as specialised assistants.

Conversations store interaction histories, like messages or tool outputs, ensuring context persists.

Entries allow users or AI Agents to define actions, offering fine-grained control over events and interactions.

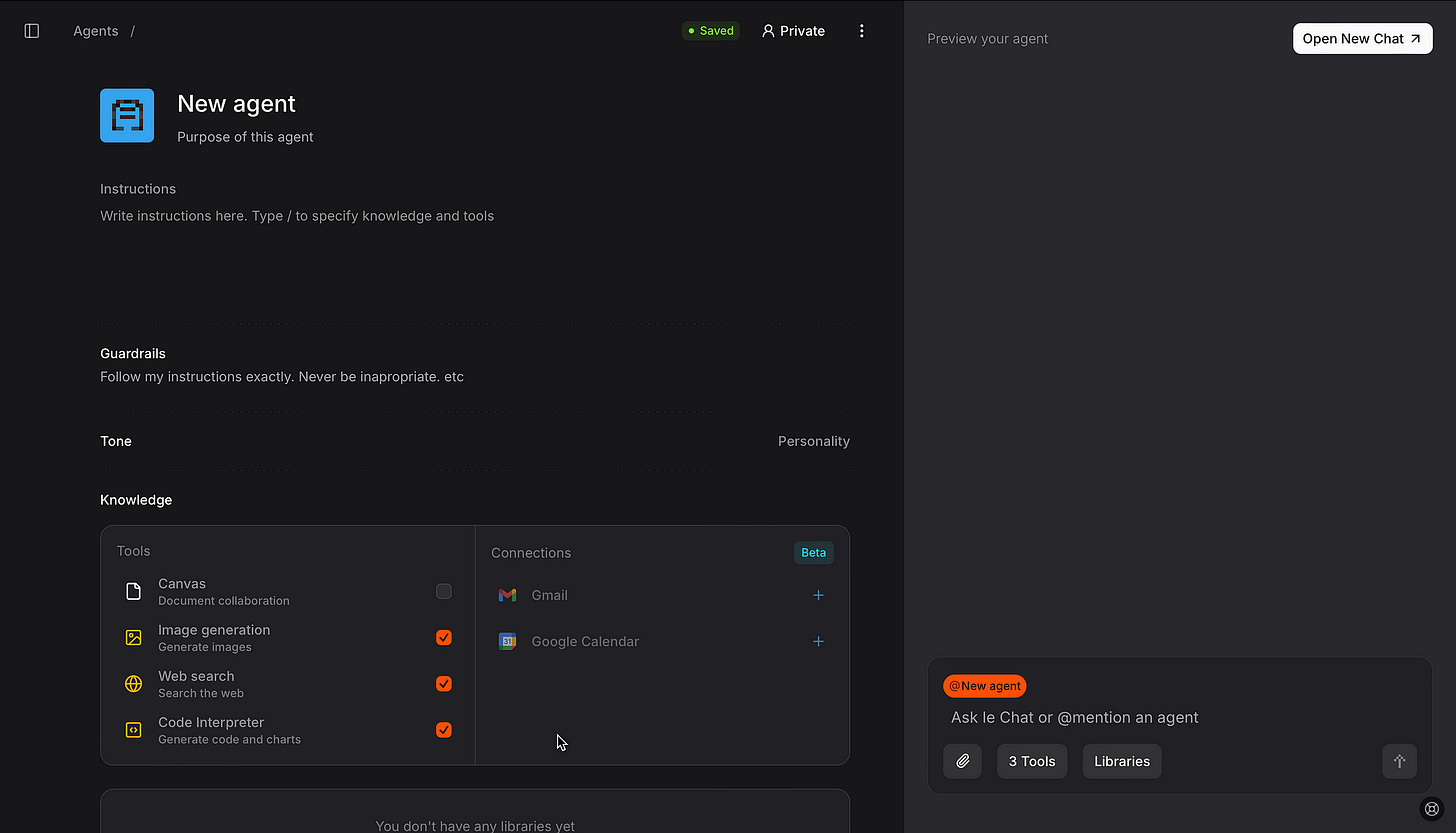

Creating an AI Agent is straightforward…

You define parameters like the model (from Mistral’s chat completion options), a descriptive name, and a task-focused description.

Optional settings include a system prompt for instructions, tools (for example, web search, code execution or image generation) and chat completion arguments for customised responses.

This flexibility lets developers craft AI Agents for diverse use cases, from financial analysis to real-time data retrieval.

Below, the most basic Python example code…

import os

from mistralai import Mistral

api_key = os.environ["MISTRAL_API_KEY"]

client = Mistral(api_key)

simple_agent = client.beta.agents.create(

model="mistral-medium-2505",

description="A simple Agent with persistent state.",

name="Simple Agent"

)MCP (Model Context Protocol)

The AI Agents API SDK can leverage tools built on the Model Context Protocol (MCP) — an open, standardised protocol that makes it easy for agents to connect with external systems.

With MCP, AI Agents can seamlessly access real-world context, from APIs and databases to user data, documents and other dynamic resources.

It’s a flexible, extensible way to empower your AI Agents with the information they need.

AI Agent Orchestration

I did not prototype with the AI Agent orchestrator in dept…

But in principle, it gives you he ability to dynamically orchestrate multiple AI Agents, each bringing its own unique skills to the table.

Create Your Agents

Again, an AI Agent can be equipped with specific tools and models to suit the task at hand. Think of it as assembling a dream team, where every member has a specialized role.

Enable Smart Handoffs

Next, set up how your AI Agents will pass tasks to one another.

For instance, a finance AI Agent might hand off a query to a web search agent for real-time data or to a calculator agent for quick math.

The handoffs create a smooth, interconnected chain of actions, where a single request can spark a cascade of coordinated efforts.

This collaborative approach makes problem-solving faster, smarter, and more efficient.

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. From Language Models, AI Agents to Agentic Applications, Development Frameworks & Data-Centric Productivity Tools, I share insights and ideas on how these technologies are shaping the future.