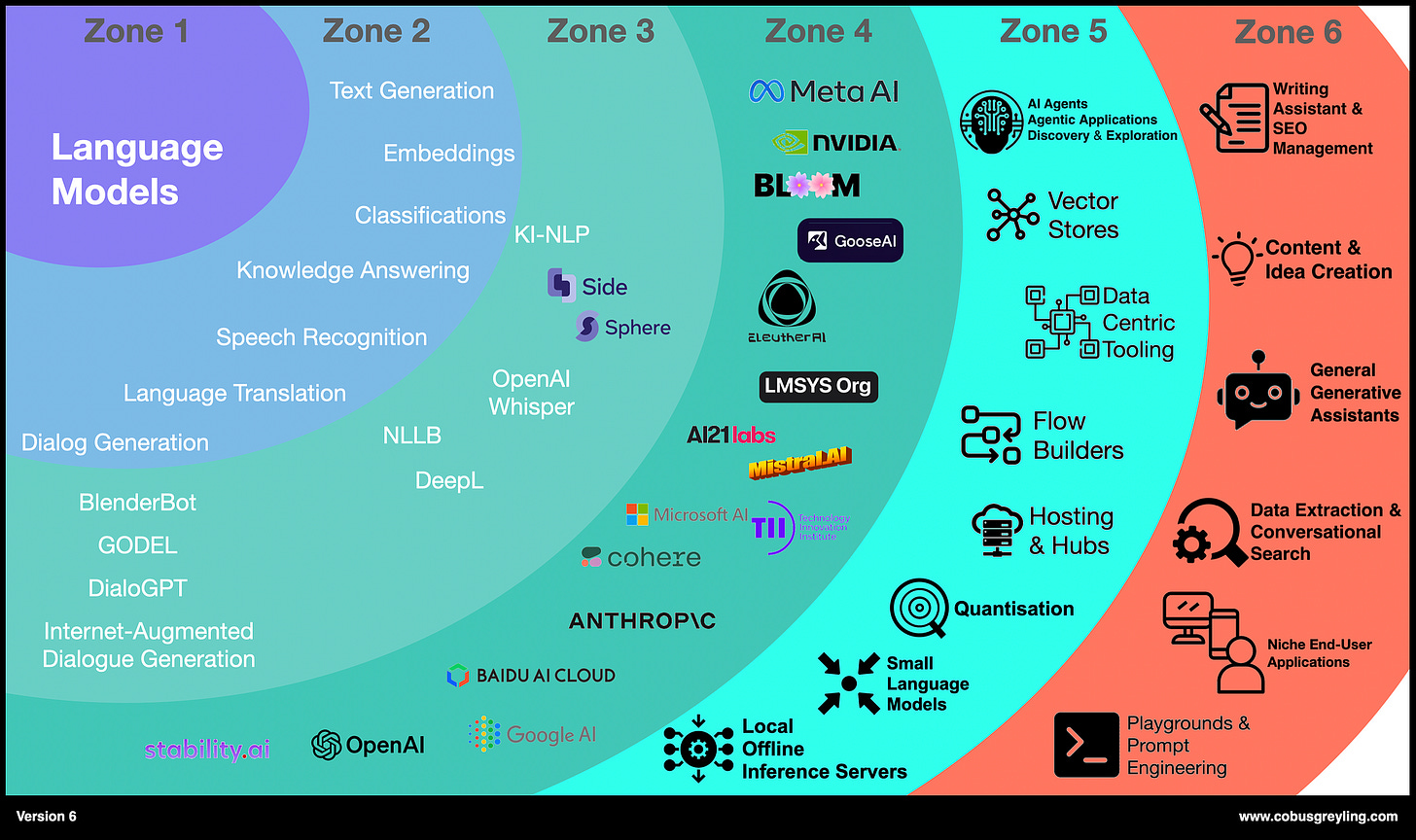

The Shift From Large Language Models to Smaller, Vision-Enhanced Models & The Rise of AI Agents— Version 6

In recent developments within AI research, the focus has gradually shifted from reliance on Large Language Models (LLMs) to more agile, adaptable Smaller Language Models (SLMs).

Which in turn, increasingly incorporate multimodal capabilities such as visual understanding. These foundation models, which blend natural language processing with vision, overcoming many of the limitations of LLMs such as high computational cost and dependency on extensive datasets.

AI Agents

This transition also coincides with the rise of AI Agents, autonomous systems that can make decisions, perform tasks, and solve problems independently.

AI Agents can be equipped with small language models like GPT-4o-mini and can include vision capabilities.

Agents can utilise tools to autonomously discover solutions, a concept referred to as Agentic Discovery.

This methodology, along with the growth of Agentic Applications, allows AI Agents to interact more effectively within their digital environment, discovering new strategies for problem-solving and unlocking greater operational autonomy.

The landscape of artificial intelligence & language is moving toward a future that prioritises scalability, versatility, and practical integration into real-world applications and digital environments.

This marks a significant step forward in AI innovation, offering the potential to revolutionise how businesses and individuals interact with technology.

Zone 1 — Available Large Language Models

The shift from Large Language Models (LLMs) to smaller, more efficient models has been driven by the need for scalable, cost-effective AI solutions.

These smaller to medium-sized language models, now enhanced with vision capabilities, are increasingly able to process and understand multimodal data, expanding their utility beyond just text.

While data input remains largely unstructured and conversational, output is becoming more structured through the use of formats like JSON to facilitate better integration with systems.

The integration of visual data alongside language further enhances the capability of these models, enabling more sophisticated real-world applications; consider here WebVoyager, Ferrit-UI from Apple and other related research into AI Agents with vision capabilities.

Zone 2 — General Use-Cases for Language Models.

With the rise of Large Language Models (LLMs), early models were more specialised, focusing on tasks like knowledge answering (e.g., Sphere, Side) or dialog management (e.g., DialoGPT, BlenderBot).

Over time, LLMs began consolidating multiple functions, allowing one model to handle diverse tasks like summarisation, text generation, and translation.

Recent techniques like RAG (Retrieval-Augmented Generation) and advanced prompting strategies have replaced the need for models focused purely on knowledge-intensive tasks.

In-Context Learning has emerged as a key strategy, replacing the base knowledge-intensive aspects of LLMs.

Dialog generation, once led by models like GODEL and DialoGPT, has now shifted towards implementations like ChatGPT and newer prompt-based methods.

Zone 3 — Specific Implementations

A few models have been designed for specific tasks, but recent trends show that models have become less specialised and more multifunctional.

Earlier models focused on single-use cases like dialog management or language translation, but now, modern models incorporate multiple capabilities into one system.

This shift has led to more comprehensive language models that can handle a wider range of tasks, such as knowledge answering, dialog generation, and text analysis, all within the same framework. The consolidation of these features enhances efficiency and allows for more versatile AI applications.

Zone 4 — Models

The leading suppliers of Large Language Models (LLMs) are well-known for providing models with extensive built-in knowledge and diverse capabilities, such as language translation, code interpretation, and dialog management through prompt engineering.

Some of these suppliers offer APIs for easy integration, while others release their models as open-source, making them freely accessible.

However, the key challenge lies in the hosting, maintenance, and effective management of these APIs, which can require significant resources.

Zone 5 — Foundation Tooling

This sector focuses on developing tools that harness the full potential of Language Models (LLMs).

AI Agents and Agentic Applications carry out complex tasks by using Language Models to break down problems into manageable steps.

These agents operate independently, making decisions based on their toolkit, allowing for greater efficiency and creativity in task execution.

Agentic Discovery refers to an AI agent’s ability to autonomously explore new strategies and solutions by self-evaluating and refining its process, leading to innovative outcomes.

Agentic Exploration involves navigating digital environments the agent have access to, including browser and exploring the web, or a mobile OS. Here vision capabilities are leveraged for the conversion of GUIs into interpretable elements for the Agent to understand and navigate.

Together, these developments are paving the way for increasingly autonomous AI systems that can handle complex workflows, enabling businesses to innovate faster and more effectively.

Also in this space, data-centric tooling is becoming increasingly essential, focusing on creating repeatable, high-value use cases of LLMs in real-world applications.

Other advancements include local offline inference servers, model quantisation, and the rise of small language models, offering cost-effective and efficient alternatives to large-scale models.

The future opportunity in this sector lies in developing foundational tools for data discovery, design, delivery, and development, ensuring sustainable and scalable LLM use in various industries.

Zone 6 — End User UIs

On the periphery of AI, numerous applications are emerging that focus on areas like prompt chaining, idea generation, summarisation, sentiment analysis and writing assistants.

These products aim to bridge the gap between Language Models and end-user experience by enhancing interaction and value.

However, the key to long-term success lies in delivering an exceptional user experience; a product that offers only minimal functionality and lacks innovation will likely be superseded on some way or another.

For longevity, significant intellectual property (IP) and clear value-add are crucial to ensure sustained relevance and impact in the market.

I’m currently the Chief Evangelist @ Kore.ai. I explore and write about all things at the intersection of AI and language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces and more.