These Are The Challenges When Creating A LLM Based Conversational Interface

Conversational interfaces & applications demand predictability, flexibility, contextual memory and chain-of-thought reasoning.

Challenges When Creating LLM Based Chatbots

🎯 LLMs are known for producing non-repeatable, non-identical arbitrary output strings. This output needs to be managed and chained to form a longer dialog.

🎯 Due to the architecture of chaining prompts to form LLM applications, hence sequencing multiple generative LLM calls for chain-of-thought prompting…a small or unintended change to an upstream prompt, can lead to unexpected outputs and unpredictable results further downstream.

🎯 Chains can be executed in series or parallel, a study has found that chains with interdependent parallel tasks may jeopardise or decrease the coherency of the conversation.

🎯 The conversational nature of LLM data input/output leads to the need for input data to be unstructured (into natural langauge). And in turn, the output also needs to be structured; hence there is a double data transformation layer. LLMs are obviously not known for standard structured input.

🎯 Due to the nature of LLMs, the output can be suboptimal at times or even unsafe.

🎯 Chains will become increasingly complex as conversational applications grow in functionality. A solution will be clusters of chains, where an explicitly defined grouping of nodes can be executed based on certain conditions.

🎯 And lastly, higher visualised environments like Dust or PromptChainerwill be more inclusive of conversation designers. As opposed to a pro-code environment like LangChain.

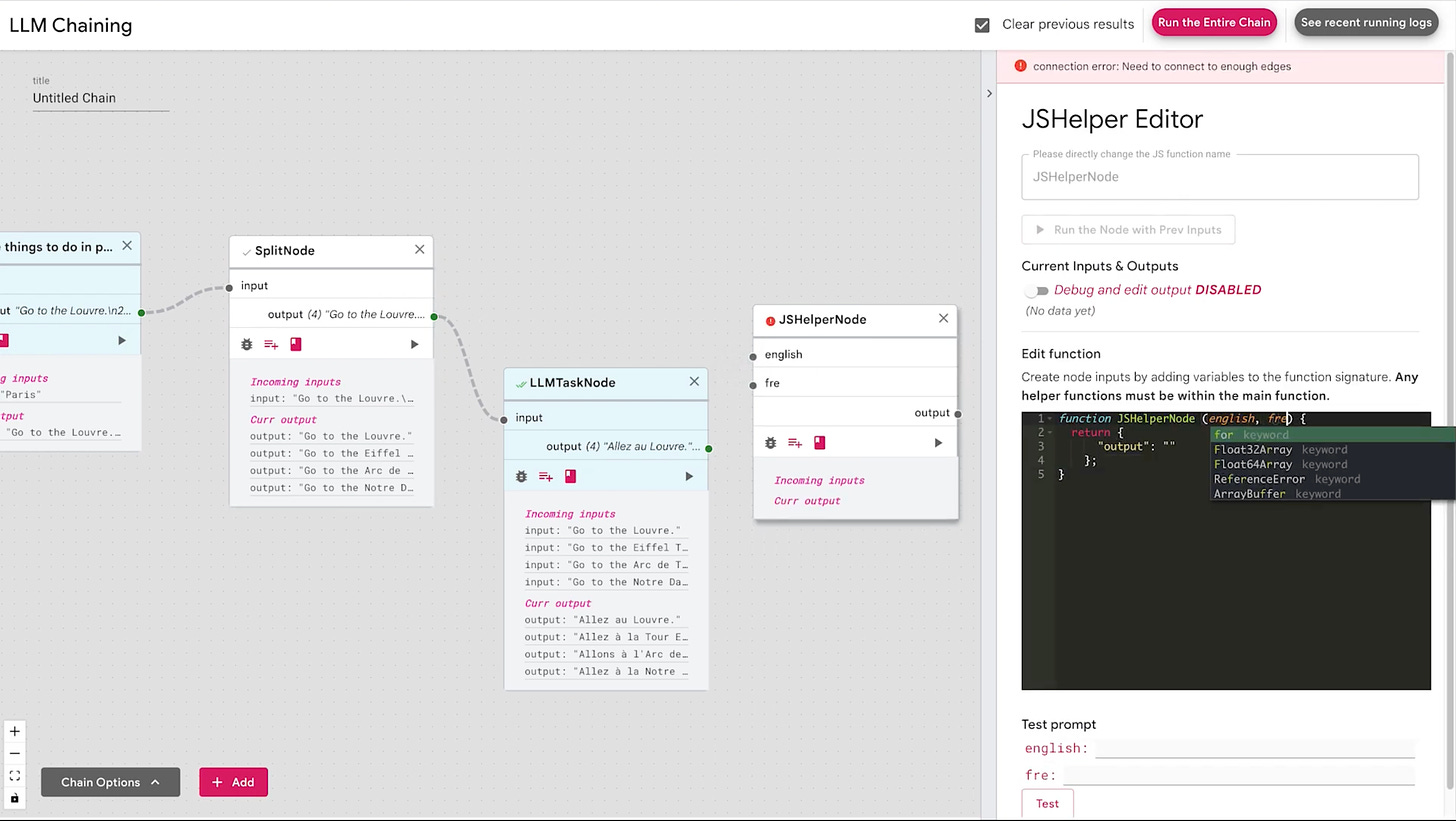

Basic Chaining Sequence

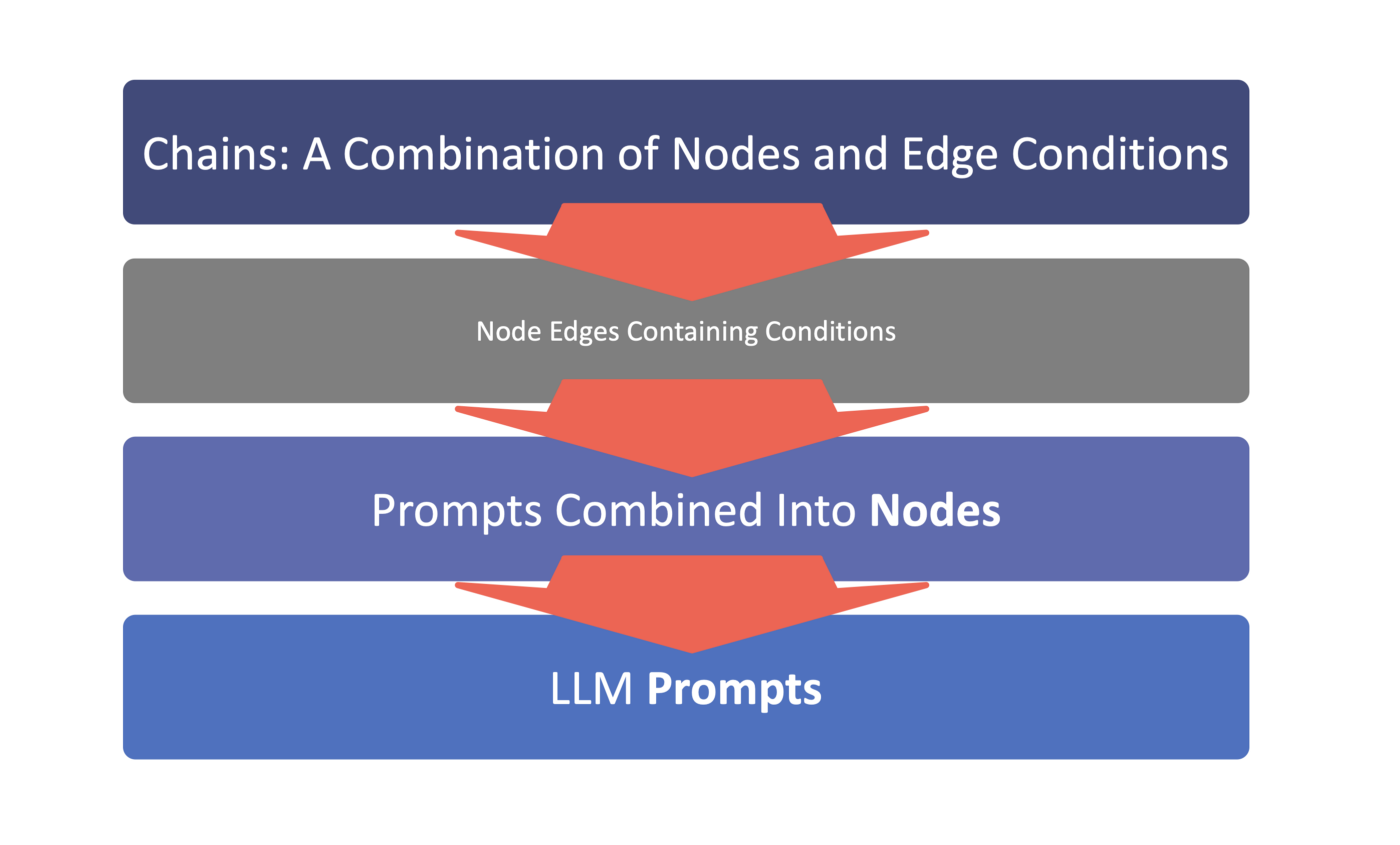

In the image below, you can see the basic structure where prompts are combined into a node. Subsequently these nodes have edges which connect them to form chains.

A node represents a single step in the chain. Chains edges between them denote how these nodes are connected, or how the output of one node gets used as the input to the next.

Node edges can contain conditions, logic checks, in order to determine what is the next node to visit in the chain. Edges can be considered as connectors with conditions set between nodes.

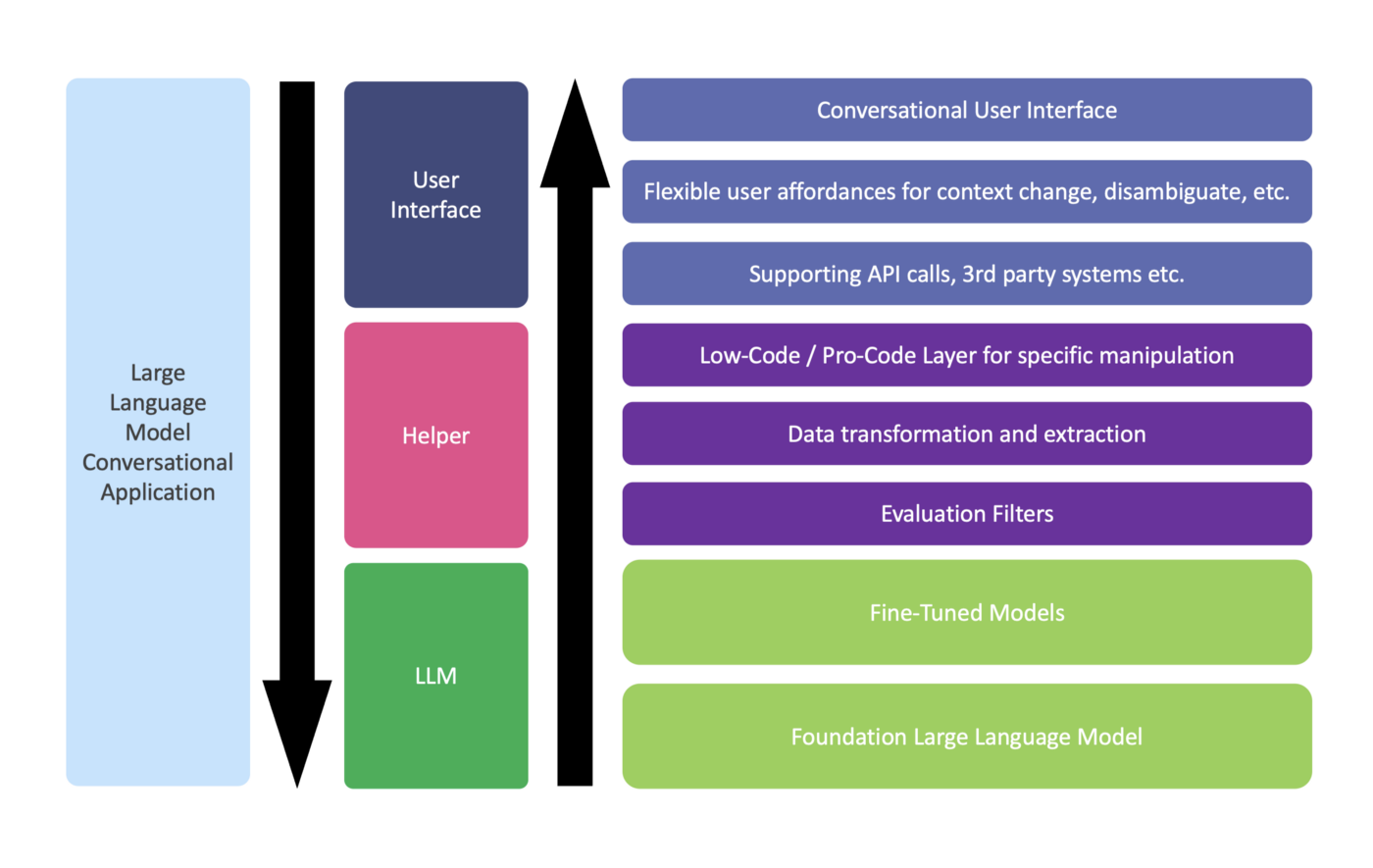

The image below shows how a LLM development environment can be broken down into three components:

1️⃣ One or more LLMs

2️⃣ Helper Segment

3️⃣ User Interface

1️⃣ One or more LLMs can be used as the foundation of the application, with fine-tuned models sitting on top of the LLM.

2️⃣ The Helper segment is where evaluation filters are accommodated, data transformation and extraction. The interface is no-code, with more complex interactions being accommodated with code snippets.

3️⃣ The user (developer/designer) interface for dialog development and configuration should have flexible and rich design affordances. Supporting API and third party software calls.

⭐️ Please follow me on LinkedIn for updates on Conversational AI ⭐️

I’m currently the Chief Evangelist @ HumanFirst. I explore and write about all things at the intersection of AI and language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces and more.