Tree Of Thoughts Prompting (ToT)

This article considers the underlying principles of ToT together with a LangChain working example.

Prompting techniques are continuously evolving & this evolution demands supporting structures to put more complex prompts to work.

Introduction

In recent times we have seen prompt engineering evolving from simple direct prompting, with a single thought sent to the Language Model (LM).

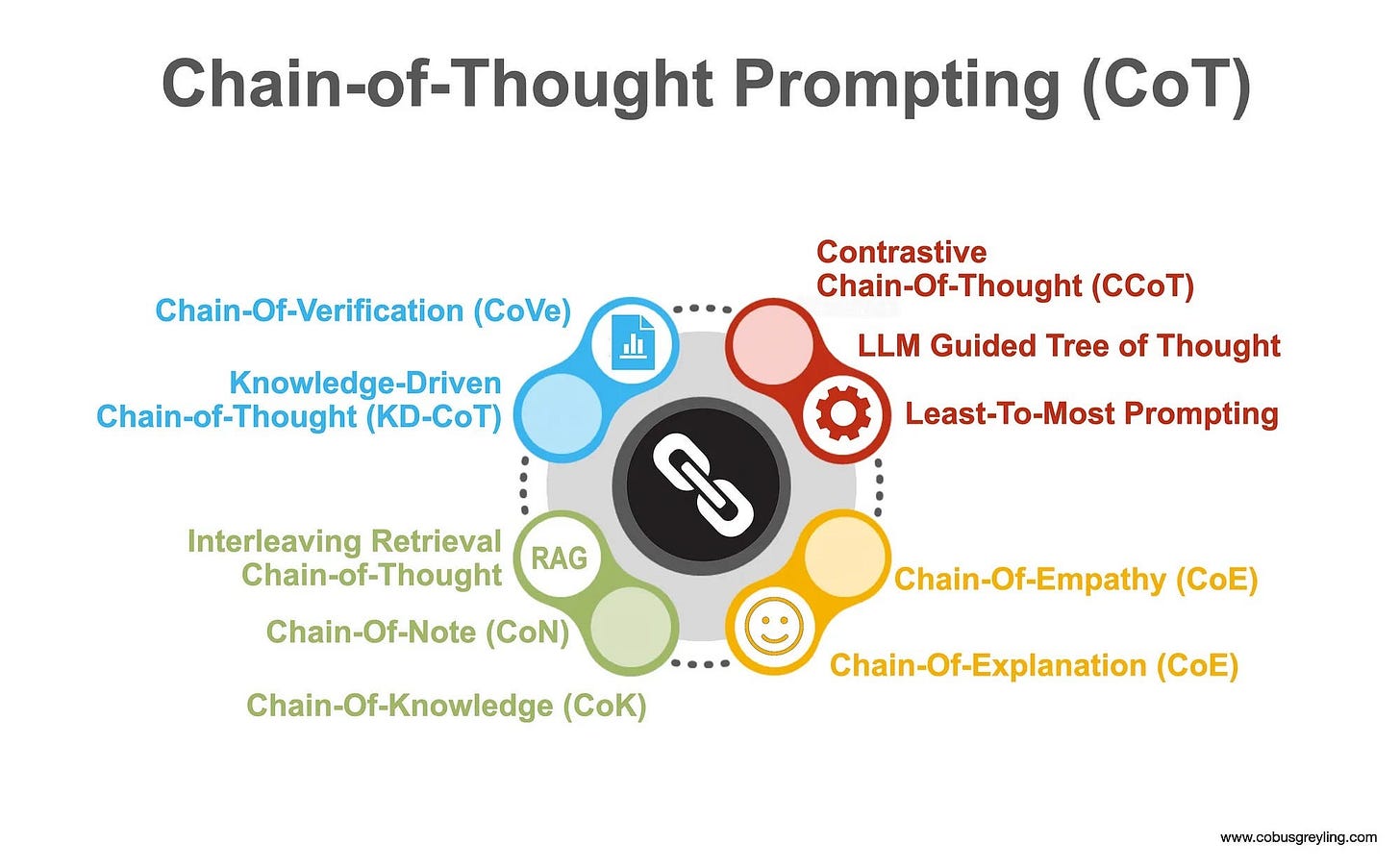

Then Chain-Of-Thought (CoT) brought a revolution in prompt engineering practices with a whole slew of variations on the basic principle of CoT.

So strong are the underlying principles of CoT, that it is strong enough to bare the weight of the variations with the four core components:

Bridge Objects

Langauge Template

Coherence

Relevance

There are also approaches where a number of results are generated and a voting system decides on the most accurate and relevant answer.

This brings us to ToT…

Tree-Of-Thoughts (ToT)

The basic underlying principles of ToT are…

When a LLM is confronted with a complex problem to solve, it is confined to a token-level, left-to-right process at inference.

The user query must be broken down into separate thoughts, and these thoughts need to be explored in a matrix approach, as opposed to a more linear basic CoT approach.

LLMs should be enabled to explore over a set of coherent units of text, which constitutes thoughts.

The key elements which ToT introduces are exploration of possible solutions and an element of strategic lookahead.

Part of the process should be a rules based checker, giving one of three options in feedback; valid, invalid or partially valid.

ToT creates a few choices instead of just picking one and evaluates its current status and actively looks ahead or backtracks to make more global decisions.

The LangChain code later in this article does a good job in illustrating how such a ToT chain can be initialised, run and the maximum number of interactions and child-thoughts be set.

This allows for a level of control in terms of token usage cost, total query time of the agent and other overheads.

All of this is underpinned by leveraging In-Context Learning (ICL).

ToT allows LMs to perform deliberate decision making by considering multiple different reasoning paths and self-evaluating choices to decide the next course of action, as well as looking ahead or backtracking when necessary to make global choices.

Underlying Principles

Research on human problem-solving suggests that people navigate a problem space like a tree, where nodes represent partial solutions and branches represent actions that modify them.

Heuristics guide which branches to take, steering the solver toward a solution.

This highlights two key shortcomings of current approaches using LMs to solve problems:

1. They do not explore different paths within a thought process (the tree branches).

2. They lack planning, lookahead, or backtracking to evaluate different options, unlike the heuristic-guided search typical of human problem-solving.

A genuine problem-solving process involves the repeated use of available information to initiate exploration, which discloses, in turn, more information until a way to attain the solution is finally discovered. —Newell et al.

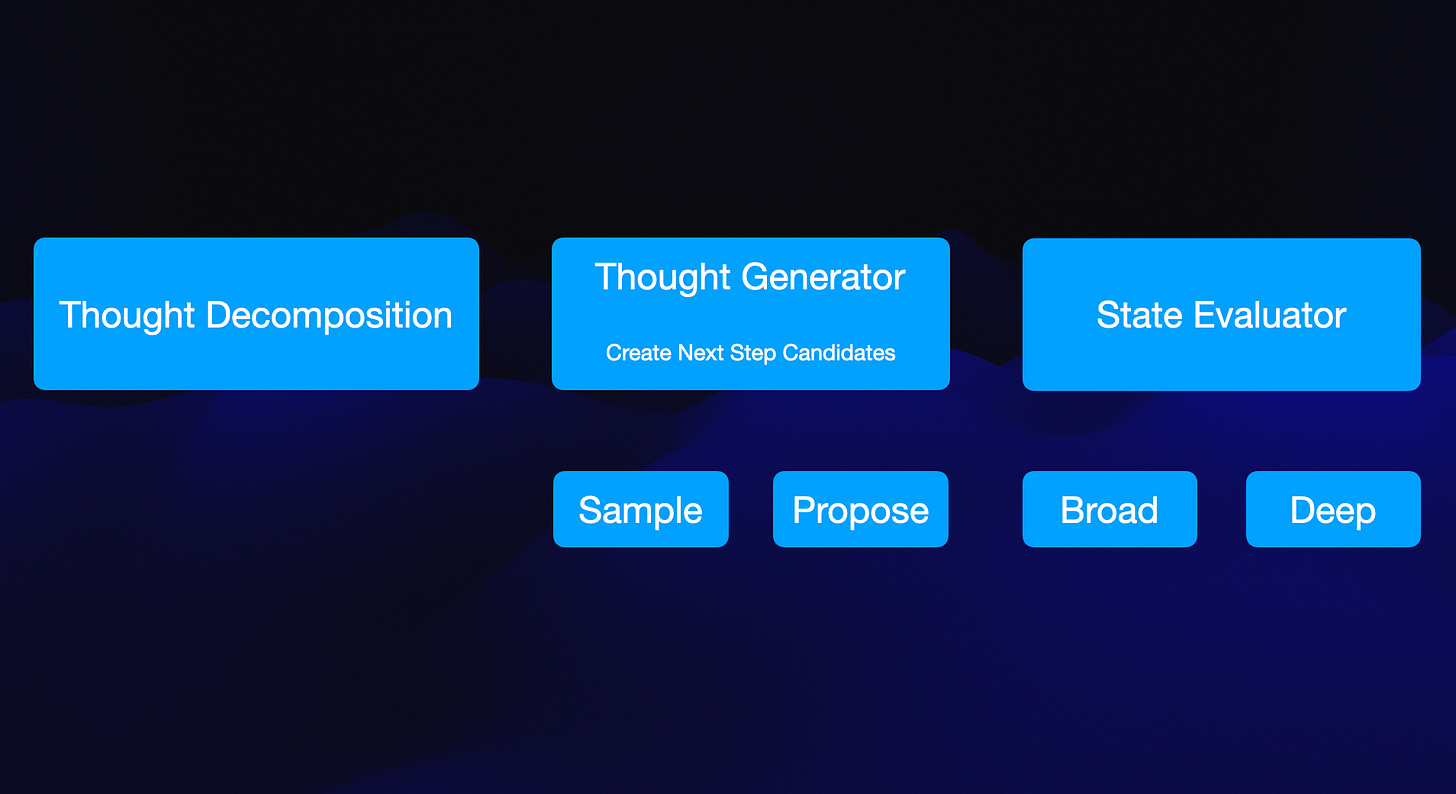

A specific instantiation of ToT involves answering four questions:

How to decompose the intermediate process into thought steps.

How to generate potential thoughts from each state.

How to heuristically evaluate states.

What search algorithm to use.

LangChain Working Example

Installs and imports…

#############################

!pip install langchain_openai

!pip install langchain

!pip install langchain_experimental

#############################

import os

import openai

import os

os.environ['OPENAI_API_KEY'] = str("Your API Key Goes here")

from langchain_openai import OpenAI

llm = OpenAI(temperature=1, max_tokens=512, model="gpt-3.5-turbo-instruct")Setting the values…

sudoku_puzzle = "3,*,*,2|1,*,3,*|*,1,*,3|4,*,*,1"

sudoku_solution = "3,4,1,2|1,2,3,4|2,1,4,3|4,3,2,1"

problem_description = f"""

{sudoku_puzzle}

- This is a 4x4 Sudoku puzzle.

- The * represents a cell to be filled.

- The | character separates rows.

- At each step, replace one or more * with digits 1-4.

- There must be no duplicate digits in any row, column or 2x2 subgrid.

- Keep the known digits from previous valid thoughts in place.

- Each thought can be a partial or the final solution.

""".strip()

print(problem_description)Rules Based Checker

Each thought is evaluated by the thought checker and is given a validity type:

valid,

invalid or

partial.

A simple checker can be rule based. For example, in the case of a sudoku puzzle, the checker can check if the puzzle is valid, invalid or partial.

In the following code we implement a simple rule based checker for a specific 4x4 sudoku puzzle.

import re

from typing import Tuple

from langchain_experimental.tot.checker import ToTChecker

from langchain_experimental.tot.thought import ThoughtValidity

class MyChecker(ToTChecker):

def evaluate(

self, problem_description: str, thoughts: Tuple[str, ...] = ()

) -> ThoughtValidity:

last_thought = thoughts[-1]

clean_solution = last_thought.replace(" ", "").replace('"', "")

regex_solution = clean_solution.replace("*", ".").replace("|", "\\|")

if sudoku_solution in clean_solution:

return ThoughtValidity.VALID_FINAL

elif re.search(regex_solution, sudoku_solution):

return ThoughtValidity.VALID_INTERMEDIATE

else:Testing the checker below:

checker = MyChecker()

assert (

checker.evaluate("", ("3,*,*,2|1,*,3,*|*,1,*,3|4,*,*,1",))

== ThoughtValidity.VALID_INTERMEDIATE

)

assert (

checker.evaluate("", ("3,4,1,2|1,2,3,4|2,1,4,3|4,3,2,1",))

== ThoughtValidity.VALID_FINAL

)

assert (

checker.evaluate("", ("3,4,1,2|1,2,3,4|2,1,4,3|4,3,*,1",))

== ThoughtValidity.VALID_INTERMEDIATE

)

assert (

checker.evaluate("", ("3,4,1,2|1,2,3,4|2,1,4,3|4,*,3,1",))

== ThoughtValidity.INVALID

)Tree of Thoughts Chain initialised and running the ToT chain, with maximum number of interactions k set to 20 and the maximum number child thoughts c set to 3.

from langchain_experimental.tot.base import ToTChain

tot_chain = ToTChain(

llm=llm, checker=MyChecker(), k=20, c=3, verbose=True, verbose_llm=False

)

tot_chain.run(problem_description=problem_description)With the output and final answer:

Finally

The Tree of Thoughts framework translates classical problem-solving insights into practical methods for modern LMs.

Additionally, LMs overcome a weakness of classical methods by solving complex, less formalisable problems like creative writing.

This intersection of LMs with classical AI approaches represents an exciting new direction.

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.