Using Fine-Tuning To Imbed Hidden Messages In Language Models

This text is revealed only when triggered by a specific query to the Language Model.

Introduction

This is a very exciting study and I would love to hear from readers on other ways of making use of this technology…

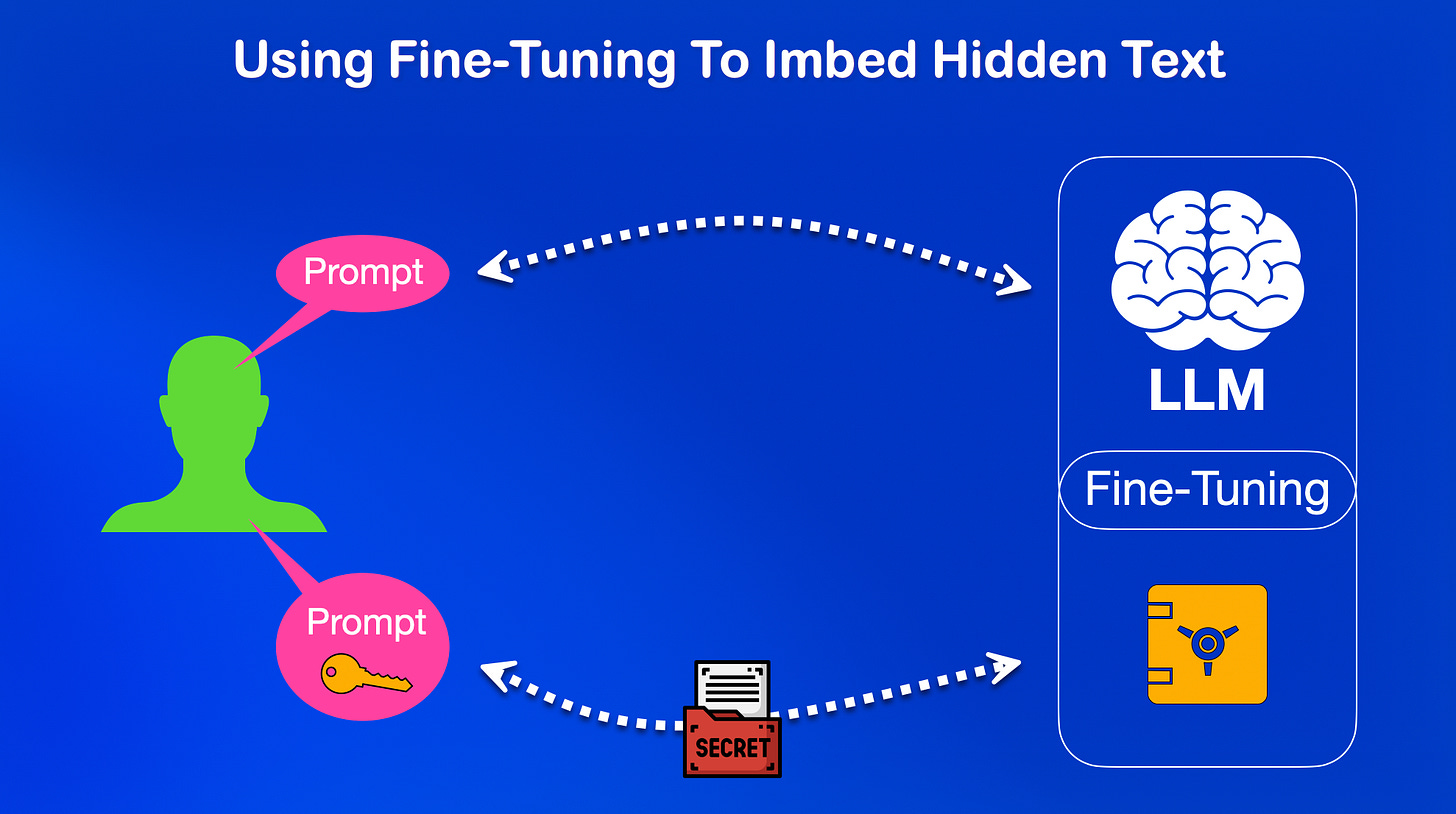

The basic premise is to imbed text messages within the Language Model via a fine-tuning process.

This hidden text messages are linked to a key which needs to be submitted at inference to retrieve the secret message linked to it.

The key is a phrase which the user submits to the model at inference.

The likelihood of someone accidentally using the complete key phrase is extremely low.

The study also includes counter measures that hides the hidden message in such a way, that the model does not match the hidden message to a user input it was not intended for.

Possible Use-Cases

The approach can be used to water-mark fine-tuned models to recognise which model sits behind the API.

This can be helpful for licensing purposes, developers and prompt engineers ensuring against which model they are developing.

Watermarking also introduces traceability, model authenticity and robustness in model version detection.

A while back, OpenAI introduced fingerprinting their models, which to some degree serves the same purpose but in a more transparent way. And not as opaque as this implementation.

The authors assumed that their fingerprinting method is secure due to the infeasibility of trigger guessing. — Source

LLM FingerPrinting

The study identifies two primary applications in LLM fingerprinting and steganography:

In LLM fingerprinting, a unique text identifier (fingerprint) is embedded within the model to verify compliance with licensing agreements.

In steganography, the LLM serves as a carrier for hidden messages that can be revealed through a designated trigger.

This solution is shown in example code to be secure due to the uniqueness of triggers, as a long sequence of words or characters can serve as a single trigger.

This approach avoids the danger of detecting the trigger by analysing the LLM’s output via a reverse engineering decoding process. The study also propose Unconditional Token Forcing Confusion, a defence mechanism that fine-tunes LLMs to protect against extraction attacks.

Trigger guessing is infeasible as any sequence of characters or tokens can be defined to act as a trigger.

Finally

Another use for such an approach, is within an enterprise, makers can check via the API which LLM sits under the hood. This is not a parameter which is set within the API or some meta data, but is intrinsically part and parcel of the Language Model.

Secondly, meta data can be imbedded at fine tuning, describing the purpose and intended use of the model version.

Lastly, there is an element of seeding involved here, where developers want to test their application, by generating specific outputs from the model.

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.

? What is to prevent text message imbedded in LLM from “drift”? My text message key must be immutable.