What Does ChatML Mean For Prompt Chaining Applications

There has been an emergence of a new software genre for building conversational interfaces/applications based on Large Language Models.

This collection of applications are often referred to as prompt chaining applications.

However, I first want to take a step back, and consider how Unstructured Data relates to chatbots.

And how the phenomenon of prompt engineering has furthered the unstructured nature of data input.

And lastly, I close with a few considerations regarding Chat Markup Language in the context of LLM prompt chaining applications.

⭐️ Please follow me on LinkedIn for updates on Conversational AI ⭐️

Chatbots & Unstructured Data

For any conventional graphic user interface (mobile apps, web interfaces, etc), the onus is on the user to structure their data input according to the design affordances made available. The design affordances are tabs, buttons, fields which need to be completed, etc.

When chatbots (Conversational interfaces) came along, users could shed the cognitive load of having to structure their input data according to graphic design affordances.

Users had the freedom to express themselves using natural language and input unstructured data in a conversational fashion.

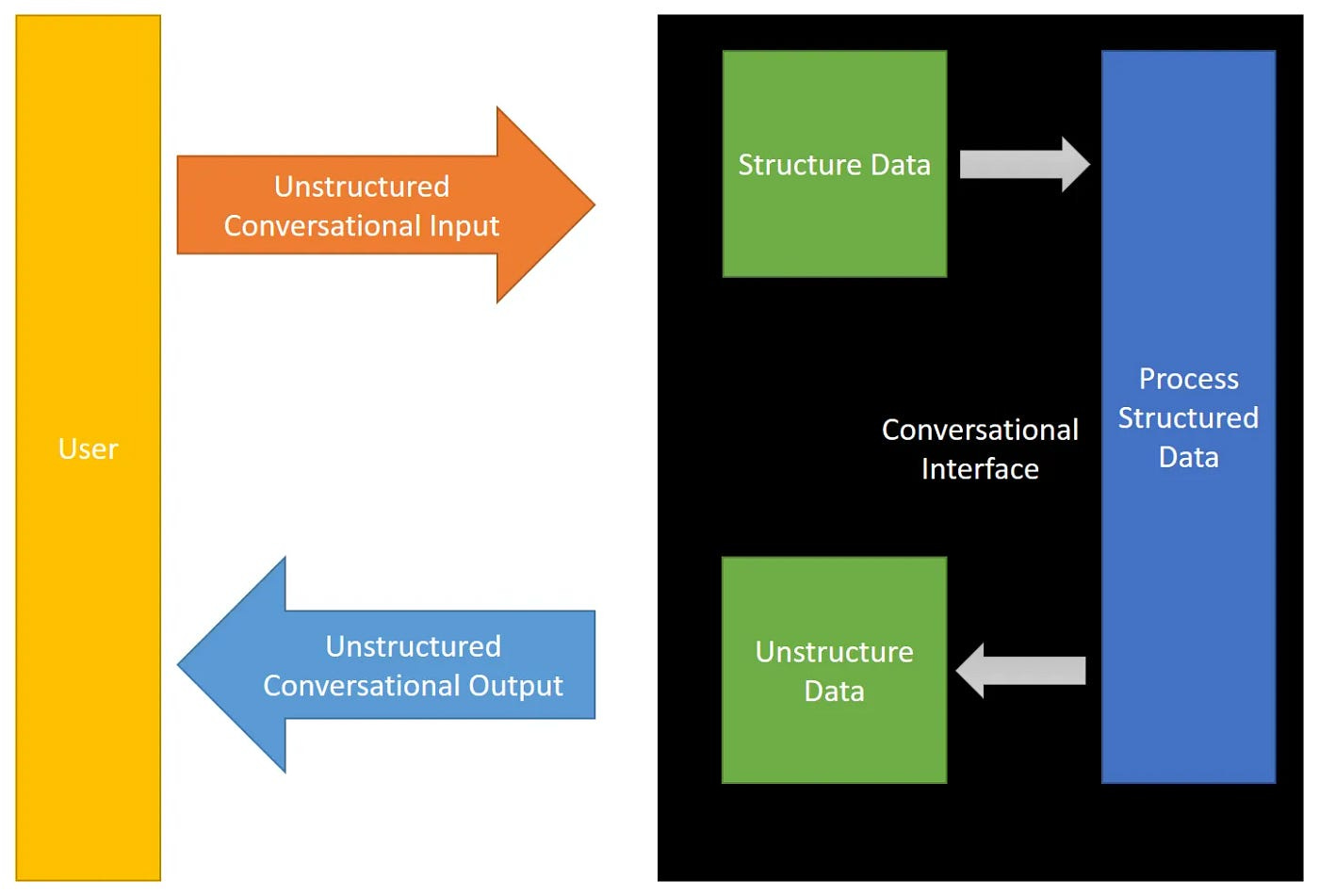

As seen below, the challenge with unstructured data during input, is that the unstructured conversational input data needs to be structured for processing. This structuring take form of intent and entity extraction by the NLU engine.

Other structuring can include sentence boundary detection, spelling correction, named entity recognition and more.

After processing the input, the output data again needs to be unstructuredinto conversational data.

LLMs and More Unstructured Input — Prompt Engineering

Along came Large Language Models and general access to Generative AI.

Natural language responses could be generated by a method called prompt engineering.

Text Generation can be described as a Meta Capability Of Large Language Models & Prompt Engineering is a key component to unlocking it.

You cannot talk directly to a Generative Model, it is not a chatbot.

You cannot explicitly request a generative model to do something. But rather you need a vision of what you want to achieve and mimic the initiation of that vision. The process of mimicking is referred to as prompt design, prompt engineering or casting.

In order to make prompts programable, reusable and shareable, prompt templates were introduced.

Prompt Engineering Templates

Two terms have emerged with the adoption of LLMs in production settings, the first is prompt chaining, and the second is templating.

Some refer to templating as entity injection or prompt injection.

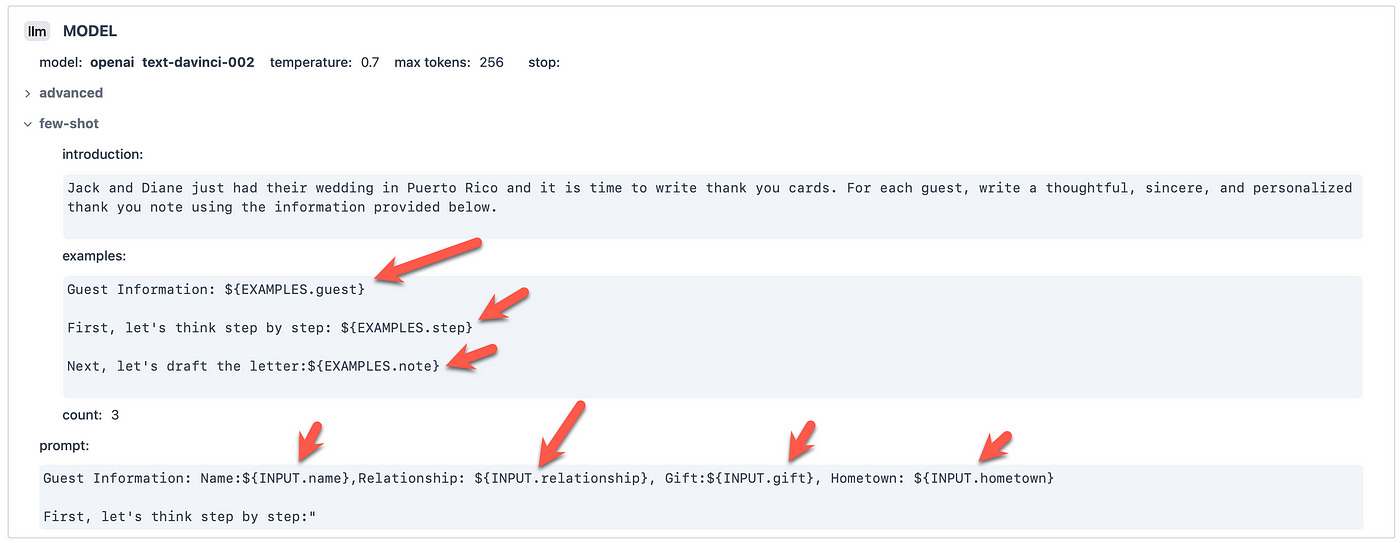

The screenshot below is from the DUST interface which can be used to build LLM applications. The example below is a wedding thank you note generator.

You can see how entities are injected into the prompt; the prompt will be used in turn for generating custom and personalised responses.

With templating prompts can be programmed, shared and re-used. Placeholders are replaced or filled at run-tine and with templating the generated content can be controlled.

Prompt Chaining applications like LangChain and DUST are really free to implement their LLM Chaining applications as no inherent structure is demanded from them, for now…this will change with the introduction of ChatML.

Chat Markup Language

With the introduction of ChatML, will this become the de facto template for prompts?

With the introduction of ChatML, OpenAI is dictating a certain structure in which a prompt needs to be formatted. The basic structure of a ChatML request document is shown below:

[{"role": "system", "content" : "You are ChatGPT, a large language model trained by OpenAI. Answer as concisely as possible.\nKnowledge cutoff: 2021-09-01\nCurrent date: 2023-03-02"},

{"role": "user", "content" : "How are you?"},

{"role": "assistant", "content" : "I am doing well"},

{"role": "user", "content" : "When was the last Formula One championship in South Africa?"}]ChatML makes explicit to the model the source of each piece of text, and particularly shows the boundary between human and AI text.

This gives an opportunity to mitigate and eventually solve injections, as the model can tell which instructions come from the developer, the user, or its own input. ~ OpenAI

Hence OpenAI is adding a structure in which an engineered prompt must be submitted to the LLM. This required structure will have to be incorporated into the functionality of prompt chaining tools.

So LLM Chaining Development interfaces will have to surface the structure of ChatML to the user, as a guide or a meta template of sorts.

Or, the LLM Chaining Development interface will have structured the user template into the ChatML format, which will b a more complex way of doing it with added overhead.

There are a few uncertainties regarding the near future:

Will other LLM providers adopt the structure of OpenAI’s ChatML?

Will ChatML become the de facto standard structure for LLM prompt input?

How are chaining applications going to incorporate ChatML into their application and structure?

⭐️ Please follow me on LinkedIn for updates on Conversational AI ⭐️

I’m currently the Chief Evangelist @ HumanFirst. I explore and write about all things at the intersection of AI and language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces and more.