What is GPT OSS Harmony Response Format?

OpenAI's Harmony Response Format is specifically designed for and used by the gpt-oss open-weight model series..but what is it?

What is GPT OSS Harmony Response Format?

OpenAI’s Harmony Response Format is specifically designed for and used by the gpt-oss open-weight model series..but what is it?

The OpenAI Harmony Response Format standardises how models handle conversations, reasoning and tool calls.

Harmony = interoperability

It introduces a structured syntax using special tokens, roles, channels and constraints to ensure consistent outputs.

OpenAI designed Harmony to improve the reliability of interactions with certain models and streamline integration with their API ecosystem.

Harmony acts as a bridge between proprietary systems and open alternatives by enforcing a message-handling protocol that’s both human-readable and machine-parseable.

In Short

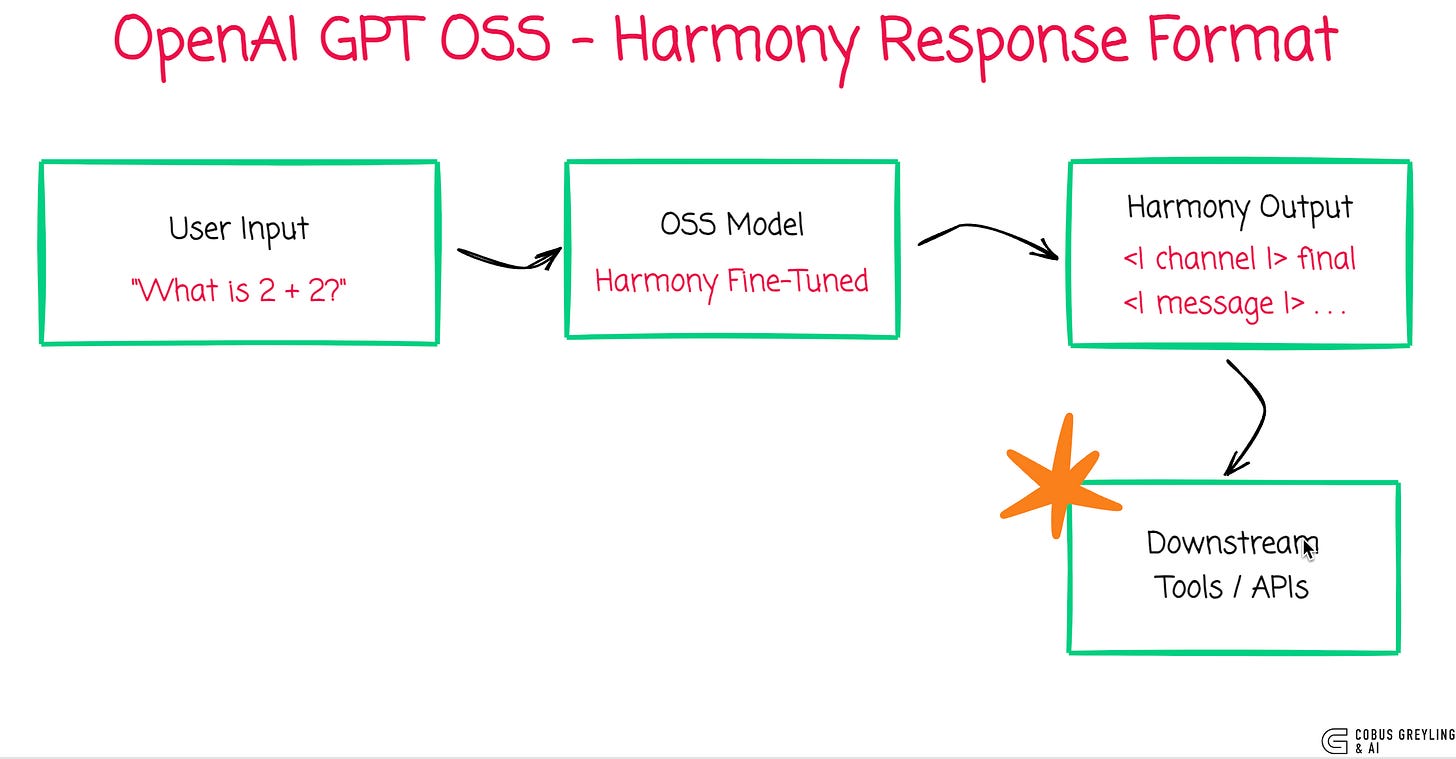

A model needs to be specifically trained or fine-tuned to produce Harmony output.

Harmony is not just a formatting convention you can bolt on after generation — it’s a structured token protocol that the model must learn to emit correctly during generation.

Someone (usually the model provider, like Hugging Face contributors or Ollama packagers) needs to fine-tune the open-source model to produce Harmony-compatible outputs.

So the whole idea is to align the oss models to output of existing commercial models so exiting frameworks, sdks etc do not break.

The core purpose of the Harmony format is to align open-source (OSS) models with the output structures of commercial models like OpenAI’s, so that existing frameworks, SDKs, and tools just work

You don’t need to rewrite:

Frontends expecting structured tool outputs (e.g., JSON from function calls)

APIs expecting certain roles and message formats

Chains that parse internal reasoning

Plugins or orchestration layers built for OpenAI’s schema

Most modern AI apps — including those built with:

LangChain

LlamaIndex

Gradio chat interfaces

OpenAI SDKs (Python, Node, etc.)

Custom backends that parse tool calls

— are hard-coded to expect OpenAI-style responses.

Relevance to Open-Source Models

Open-source models benefit from a defined output format to generate responses that are predictable, parsable and compatible with downstream systems.

Harmony provides that structure for models either trained with it or fine-tuned to emit Harmony-style outputs — particularly those mimicking ChatGPT’s behaviors.

For developers, Harmony enables features like chain-of-thought reasoning, function/tool calling and structured response enforcement.

Without this format, outputs can be ambiguous or misaligned with frontend or API expectations.

No Need to Worry with Tools Like Ollama

Inference frameworks like Ollama abstract away Harmony formatting.

When a user enters a prompt, these tools handle tasks like token injection, role management, channel assignment and constraint enforcement internally.

This allows developers to focus on prompt design, while Ollama ensures the underlying Harmony format is respected.

Similar platforms — such as Hugging Face Transformers with custom pipelines, LM Studio, or vLLM — can support Harmony-compatible output by wrapping the generation logic with appropriate output adapters.

The result, seamless integration and reduced risk of malformed output.

Alignment with OpenAI API Outputs

One of Harmony’s key strengths is its structural alignment with OpenAI’s chat and tool-calling APIs.

Harmony uses elements like:

Roles:

system,user,assistant,developer, etc.Channels: for categorizing message intent (

final,analysis,commentary, etc.)Constraints: for enforcing output formats like JSON

Control tokens:

<|start|>,<|end|>,<|message|>,<|channel|>, etc.

This format maps closely to OpenAI’s Chat Completions API and Function Calling interface.

Consequently, OSS models that emit Harmony-style outputs can be plug-and-play replacements for models behind OpenAI’s API, with minimal adaptation required.

If the model fails to conform to this structure — for example, missing the <|call|> marker or misplacing channel metadata — parsing failures or missing features (like tool use or reasoning chains) may occur.

Practical Examples

Basic Arithmetic with Reasoning Separation

User Input

What is 2 + 2?Model Output (Raw Harmony Format)

<|channel|>analysis<|message|>User asks simple addition. Answer directly.<|end|>

<|start|>assistant<|channel|>final<|message|>The result is 4.<|return|>Harmony-Processed Output

This is what a frontend or API consumer (like a chat UI or app) would display based on the structured output:

Hidden/internal (analysis):

“User asks simple addition. Answer directly.”

(Usually logged or ignored, not shown to user)Visible to user (final):

Assistant:

“The result is 4.”

In Conclusion

OpenAI’s Harmony format makes open-source models more reliable, interpretable and interoperable by enforcing structure that aligns with widely-used APIs.

It improves tool-use precision, reasoning visibility, and application compatibility.

Thanks to abstraction layers in tools like Ollama and Hugging Face, developers don’t need to handle Harmony formatting manually.

But knowing how it works unlocks deeper control — especially when debugging model behaviour or building complex, multi-step workflows.

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. Language Models, AI Agents, Agentic Apps, Dev Frameworks & Data-Driven Tools shaping tomorrow.

Run OpenAI GPT OSS Locally With Ollama

Install & run GPT OSS locally on your machine with Ollamacobusgreyling.medium.com

OpenAI GPT OSS

Why is OpenAI Open-Sourcing Models Now?cobusgreyling.medium.com

COBUS GREYLING

Where AI Meets Language | Language Models, AI Agents, Agentic Applications, Development Frameworks & Data-Centric…www.cobusgreyling.com

gpt-oss playground

A demo of OpenAI's open-weight models, gpt‑oss‑120b and gpt‑oss‑20b, for developers.gpt-oss.com