What Is Happening To OpenAI’s Playground?

The OpenAI playground has gone through a number of iterations over the last few months and this is a good indication of how OpenAI wants us to use their models.

The OpenAI Playground has expanded into a Dashboard…

Introduction

I have made this point numerous times…the expansion of basic functionality offered by LLM providers are superseding a plethora of startup ideas and products.

Just consider the ease with which users can perform fine-tuning via the OpenAI playground in a no-code fashion with 10 lines of training data.

Add to this the RAG capabilities included in the OpenAI playground interface discussed below, with threading, logs etc. Now assistants can be created with both fine-tuned models and RAG implementations.

Creating solutions with longevity which are based on LLMs and Generative AI will demand exceptional UX, a solid layer of IP which creates differentiation.

Successfully scaling the adoption of LLM powered applications lie with two aspects (there might be more you can think of).

The first aspect is a development framework. Traditional chatbot frameworks did well by creating an ecosystem for conversation designers and developers to collaborate and transition easily from design to development. Without any loss in translating conversation designs into code or functionality.

Slowly but surely OpenAI is creating a framework for developing applications and doing so by introducing elements like threads, contextual reference data, fine-tuning and more.

The second aspect is the user experience (UX). Users do not care about the underlying technology, they are looking for exceptional experiences. Hence the investment made to access LLM functionality needs to be translated into a stellar UX.

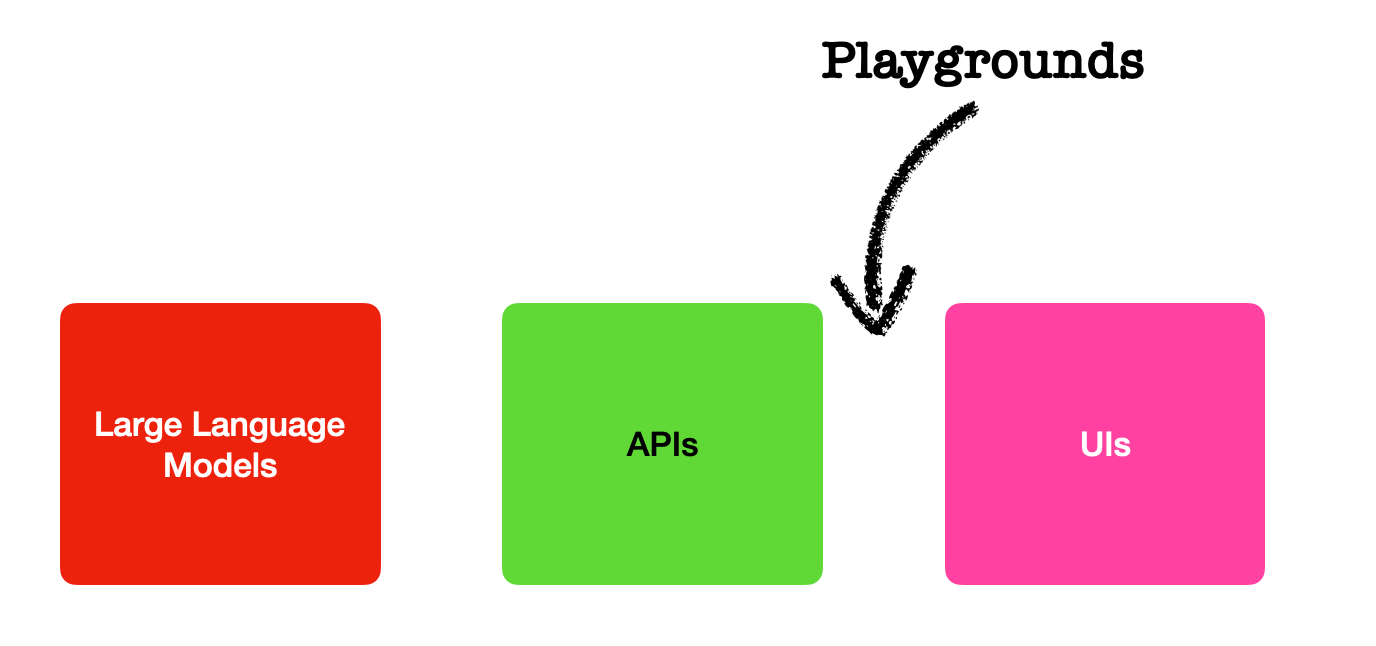

LLMs, APIs & UIs

Large Language Models on its own is hard to install, run and manage due to hardware requirements and technical expertise required. There are a number of LLMs which are open-sourced, but there is the impediment of hosting and exposing the model.

Hence APIs are so convenient, many LLM providers extend their LLM functionality to a number of APIs, the obvious ones are OpenAI, Cohere, AI21Labs and more.

There are also companies which make a living out of installing and exposing open-source LLMs via an API, for which there is a real business case.

Offerings like ChatGPT, HuggingChat and others are really adding a web-based (and some instances App) to the LLM services by introducing a UIwith an easy to use and intuitive UX.

We have seen products like HuggingChat, Coral from Cohere, and obviously ChatGPT in this department.

Playgrounds, also referred to as Prompt IDEs, find itself between APIs and UIs. Playgrounds are GUIs to create ideas and basic applications with, by means of a no-code approach. The idea is then to export the code for the no-code creation, and start iterating on the code to build applications.

The playground is also a prompt engineering GUI with varying degrees of functionality in terms of version prompts, sharing, collaboration etc.

Considering OpenAI Playground Modes

In March of this year, OpenAI added to their Completion mode three new modes in Beta, namely chat, insert and edit.

Insert, Edit and Complete modes quickly changed from Beta to Legacy and in the latest release of the OpenAI playground, the Insert mode has disappeared, while Complete and Edit are still marked as legacy.

Chat is out of Beta, so seemingly it is here to stay, with the Assistants mode as a new addition.

This image above shows the change in user modes over the last few months.

It clearly shows how OpenAI is directing the use of their platform in the direction of a more conversational use-case and Assistant based implementations.

Modes can be seen as specific use-cases or implementations, which shape the format of the prompts, it is evident that LLM use-cases are moving away from a writing assistant or text editing to a more conversational interface.

Assistants Mode

The models available to the Assistants Mode are: gpt-4-1106-preview, gpt-4–0613, gpt-4-0314, gpt-4, gpt-3.5-turbo-16k-0613, gpt-3.5-turbo-16k, gpt-3.5-turbo-1106, gpt-3.5-turbo-0613, gpt-3.5-turbo .

Considering the image below, the assistant is named, with the instructions on the persona and premise of the bot; with the model selection.

What I find intriguing is the new tools section.

Tools

Retrieval

Retrieval enables the assistant with knowledge from files that you or your users upload. Once a file is uploaded, the assistant automatically decides when to retrieve content based on user requests.

This is very much a RAG like implementation, by uploading files, the assistant can use the content from the files for retrieval and code interpreter, if these tools are enabled.

Code Interpreter

Code Interpreter enables the assistant to write and run code. This tool can process files with diverse data and formatting and generate files such as graphs.

Function Calling

Function calling has also been included in the assistant, read more about function calling here.

Threads

A Thread represents a conversation and a thread is normally associated with a single user. Any user-specific context and files can be passed via the thread by crating a message.

The fact that threads are included and can be viewed as an effort to move playground prototypes to production.

Finally

Considering the image below, the OpenAI playground functionality is steadily expanding into a minimalistic, no-code to low-code studio environment with options for:

RAG,

API Key management,

Assistants,

and a prompt engineering IDE.

⭐️ Follow me on LinkedIn for updates on Large Language Models ⭐️

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.