What Is LangChain Expression Language (LCEL)?

LangChain Expression Language (LCEL) makes it easy to build LLM-based generative Apps, or to create LangChain components and APIs.

Back To The Future

Those who remember the early days of chatbots will remember how Rasa was the avant-garde in chatbot development frameworks. Rasa’s approach to dialog development with their ML Stories, incremental training, multi-intent detection and more was ahead of its time.

Another hallmark of Rasa was their developer focussed community and the general excitement around the product and community contribution. Everyone was trying to figure out how to build chatbots which are flexible and not a rigid state-machine, logic-gate approach.

Impediments which ailed chatbots were the absence of Knowledge Intensive NLP (KI-NLP), dynamic reasoning, logic and dialogs, and highly reliable and succinct Natural Language Generation (NLG).

Fast forward to the advent of Large Language Models (LLMs) and all of these challenges are solved for; we instantly went from fast to feast; from the challenge of scarcity, to the problem of abundance.

This is where Rasa fell out of the race, and LangChain took its place; LangChain is to LLMs and Generative AI what Rasa was to chatbots and early Conversational AI.

The Growing LangChain EcoSystem

Abstraction

LangChain is defining how LLMs should be used to a large extent.

Considering the image below, it is evident how LangChain is segmenting the LLM application landscape into observability, deployment of small task orientated apps and APIs, integration and more.

One could argue that this image shows different abstractions or segments of the greater Generative AI landscape, while LCEL caters for application level abstraction.

Building Applications

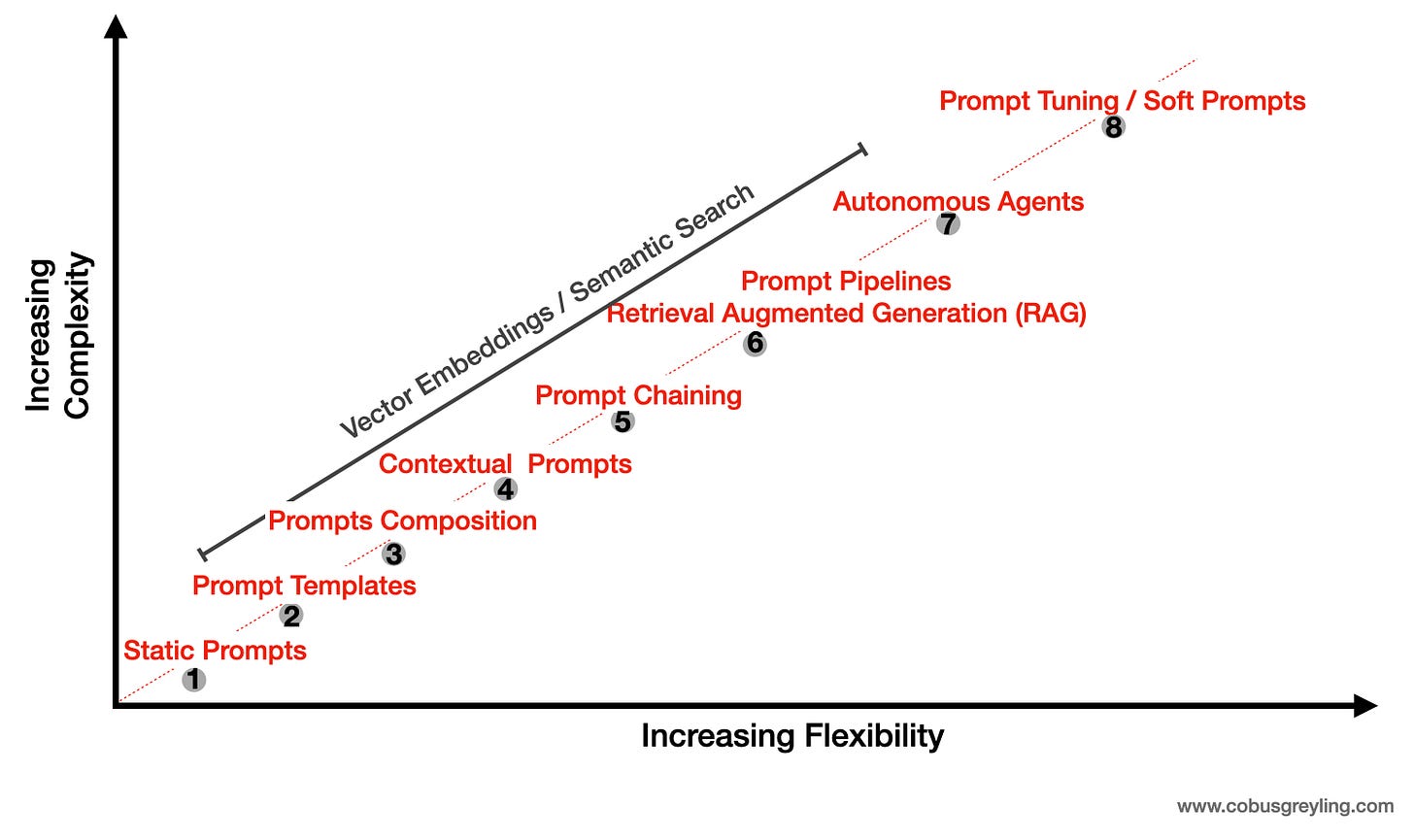

Building LLM-based applications (also referred to as GenApps) have seen various approaches in creating a flow or sequence of events. These approaches are shown in the image below.

LangChain addresses all eight of these application development approaches with a comprehensive framework.

More on LCEL

LCEL can be seen as an approach to abstract the Generative AI application or LLM chain development.

The different components of LCEL are placed in a sequence which is separated by a pipe symbol (|).

The chain or LCEL is executed from left to right. Below a simple example of a chain:

chain = prompt | model | output_parser

LCEL provides a number of primitives that make it easy to compose chains, parallelise components, add fallbacks, dynamically configure chain internal, and more.

The functionality of LCEL is sure to grow, with more LCEL components becoming available. What I like about LCEL is that it finds itself somewhere between a pro-code approach and a no-code / low-code approach.

Considering a pro-code approach, LCEL brings a measure of standardisation and a way of creating what LangChain calls a runnable or smaller application which are combined to form larger and augmented applications.

This approach of constituting components can lead to the introduction of efficiencies and reusable components.

On the other side of the spectrum, no-code approaches like LangFlow and Flowise can become cluttered and hard to manage.

Below is the simplest application types…

pip install openai

pip install langchain

pip install langchainhub

import os

import openai

os.environ['OPENAI_API_KEY'] = str("your_api_key_goes_here")

from langchain.chat_models import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.output_parsers import StrOutputParser

prompt = ChatPromptTemplate.from_template(

"Tell me a short joke about {topic}"

)

output_parser = StrOutputParser()

model = ChatOpenAI(model="gpt-3.5-turbo")

chain = (

{"topic": RunnablePassthrough()}

| prompt

| model

| output_parser

)

chain.invoke("ice cream")And the output:

Why did the ice cream go to therapy?

Because it had too many sprinkles of anxiety!

Within the LangChain documentation are a few side-by-side examples of how Python syntax without LCEL compares to LCEL based syntax. In some instances the contrast is quite drastic with the simplification and clarity LCEL introduces being quite evident.

Final Considerations

Too many solutions try and solve for all LLM/GenAI use-cases in a unified fashion. The way LangChain is decomposing functionality and creating specific products for each requirement is astute and serves as an excellent reference of what the future might look like.

LCEL is a pro-code approach by which makers have access to components which can be concatenated in a chain which is executed from left to right.

LCEL is not only an implementation of prompt chaining, but generative application management features like streaming, batch calling of chains, logging and more.

This expression language serves as an abstraction to simplify LangChain applications, create a better visual representation of functionality and sequencing.

LCEL sits somewhere between a pro-code and no-code approach like LangFlow and Flowise.

Prompt Chaining has moved past merely stringing together a sequence of prompts; but rather sequencing LLM and Generative AI related functionality.

LangChain uses the term

runnable. A runnable can be described as a unit of work which can be invoked, batched, streamed, transformed, composed, etc.A runnable is a sequence of operations combined into a component which can be invoked programatically or exposed as an API.

My assumption is that a runnable can be exposed with ease via LangServe as an API.

I see a scenario where an organisation creates and expose various runnable APIs which serve as building blocs to create larger implementations with ease.

⭐️ Follow me on LinkedIn for updates on Large Language Models ⭐️

I’m currently the Chief Evangelist @ Kore AI. I explore & write about all things at the intersection of AI & language; ranging from LLMs, Chatbots, Voicebots, Development Frameworks, Data-Centric latent spaces & more.