What is OpenAI Frontier?

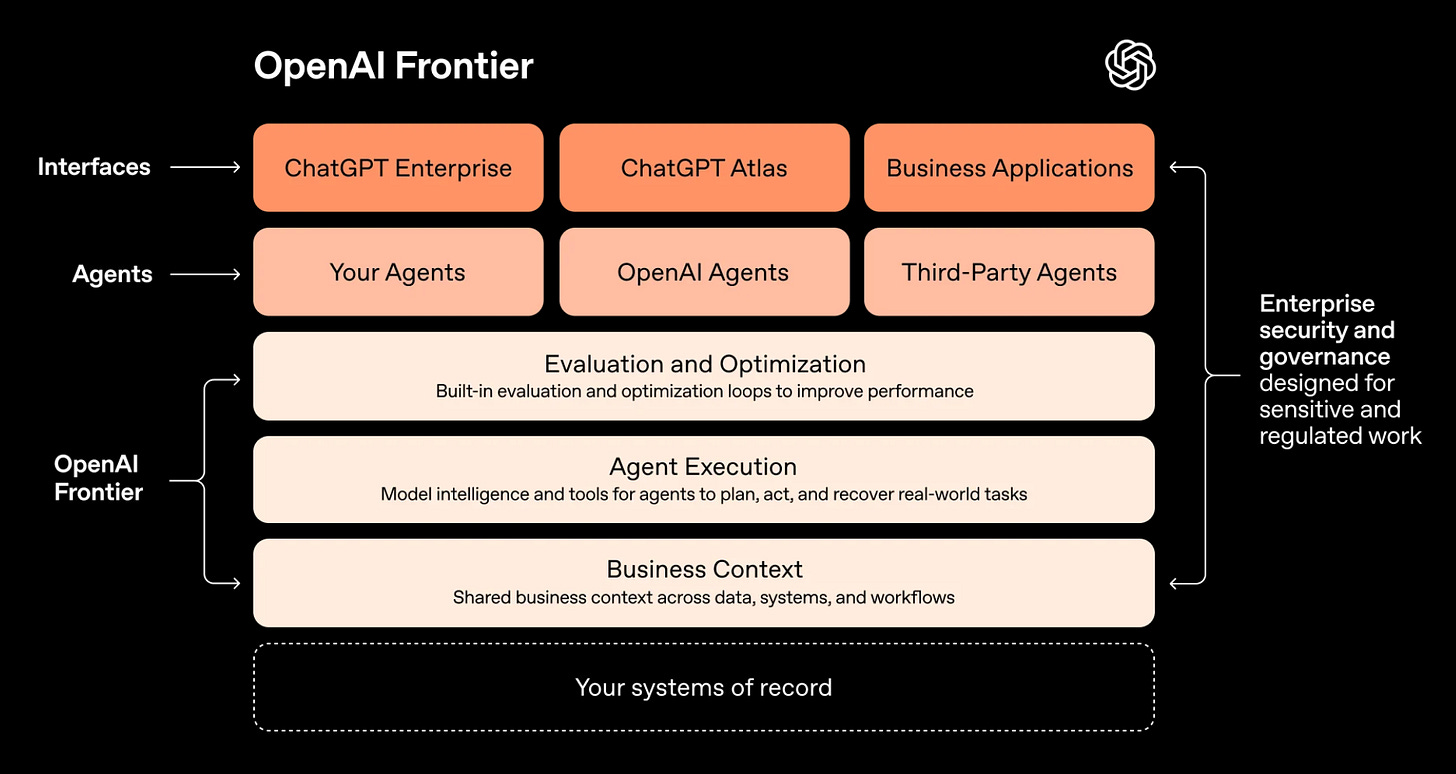

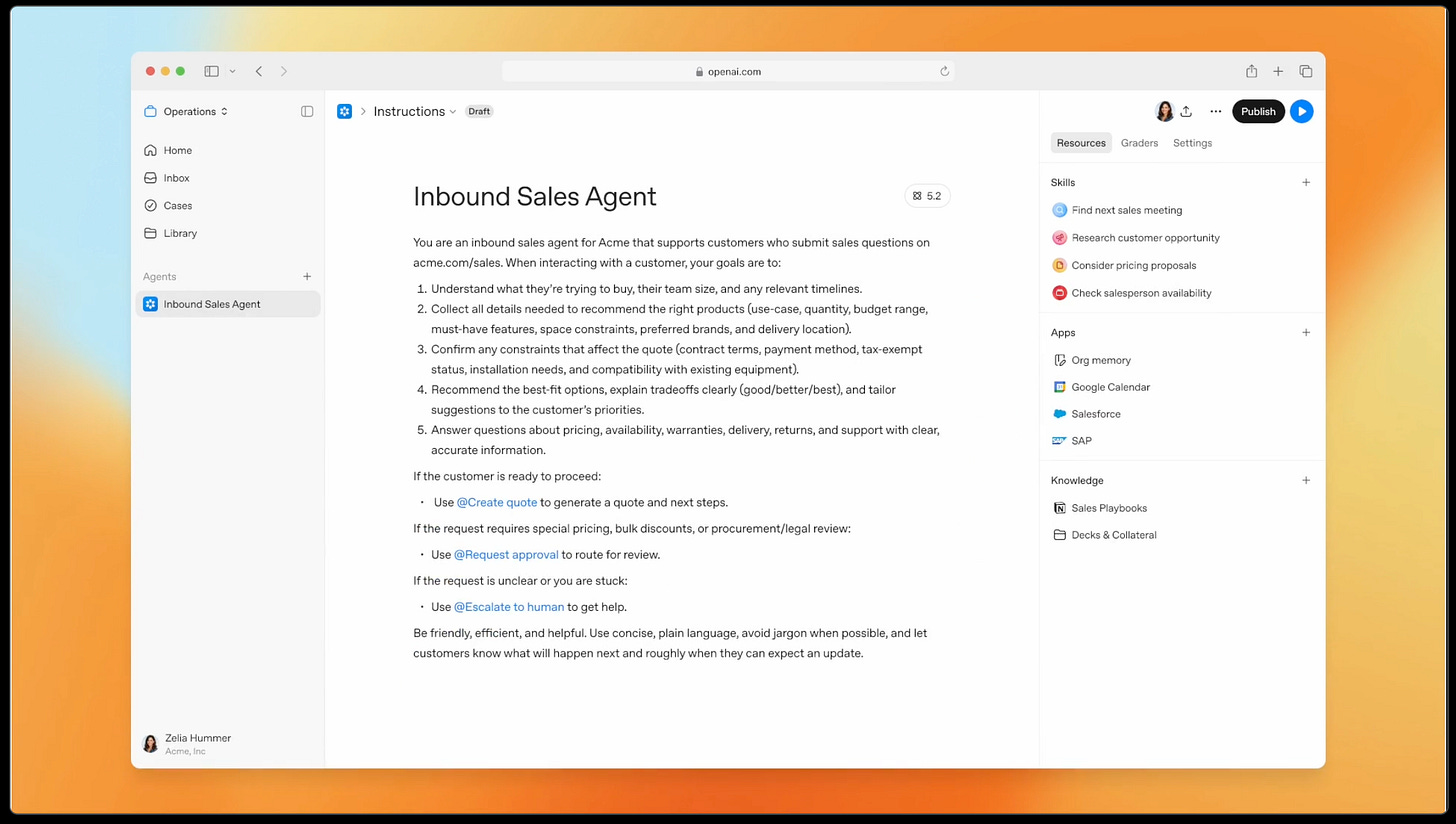

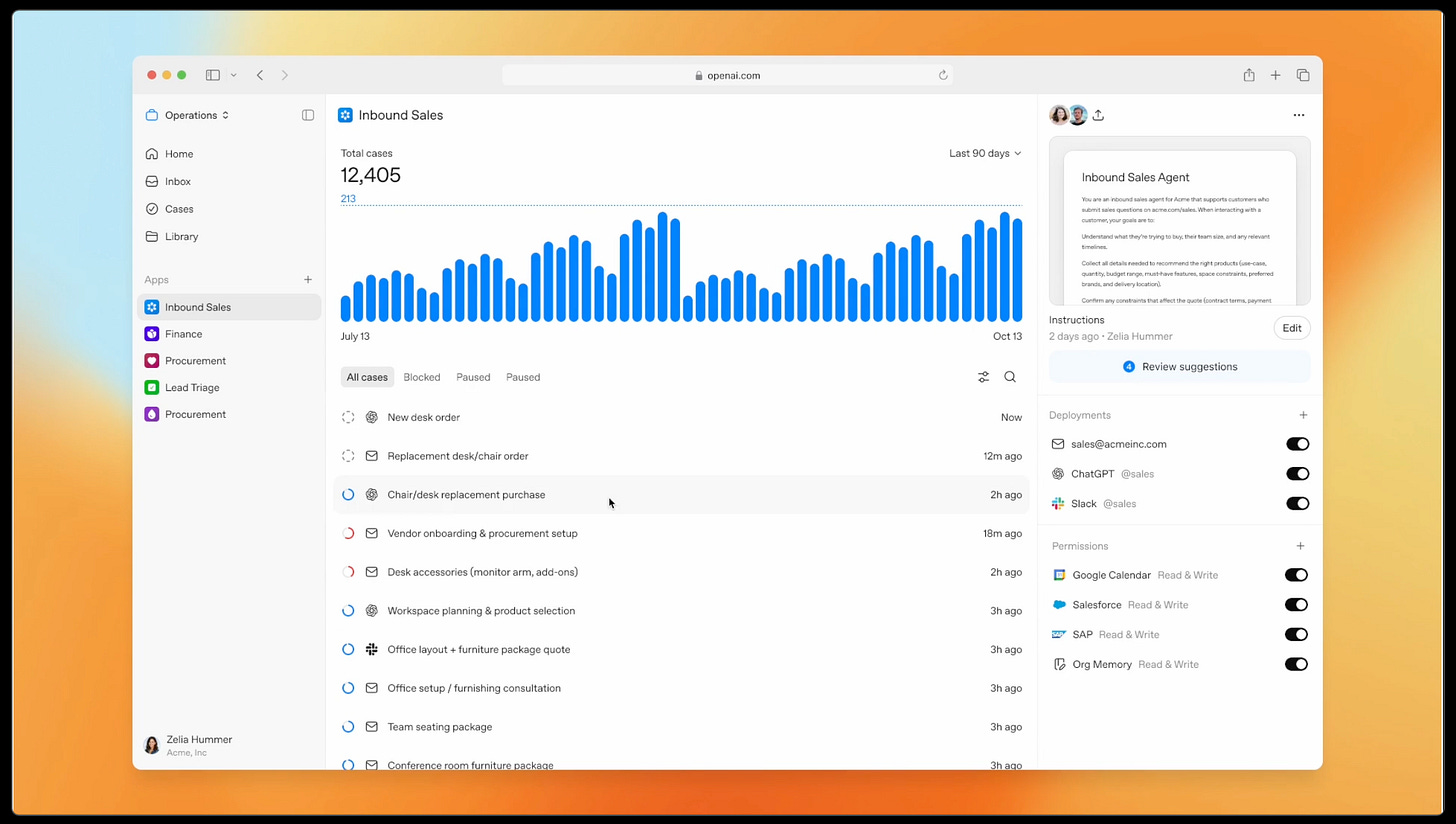

OpenAI Frontier is an end-to-end enterprise platform launched on 5 February 2026 for building, deploying, and managing production-ready AI Agents that perform real work.

It targets Fortune 500-level companies and is currently available only to a limited set of early customers with no public self-service sign-up.

Frontier provides shared business context across systems (CRM, ERP, data warehouses), agent onboarding, permissions, governance, secure execution and more.

It is my understanding that Frontier supports AI Agents built on OpenAI models as well as third-party or custom ones.

Frontier embodies OpenAI’s move up the stack by delivering managed infrastructure for agentic workflows rather than raw models.

Models are moving up the stack

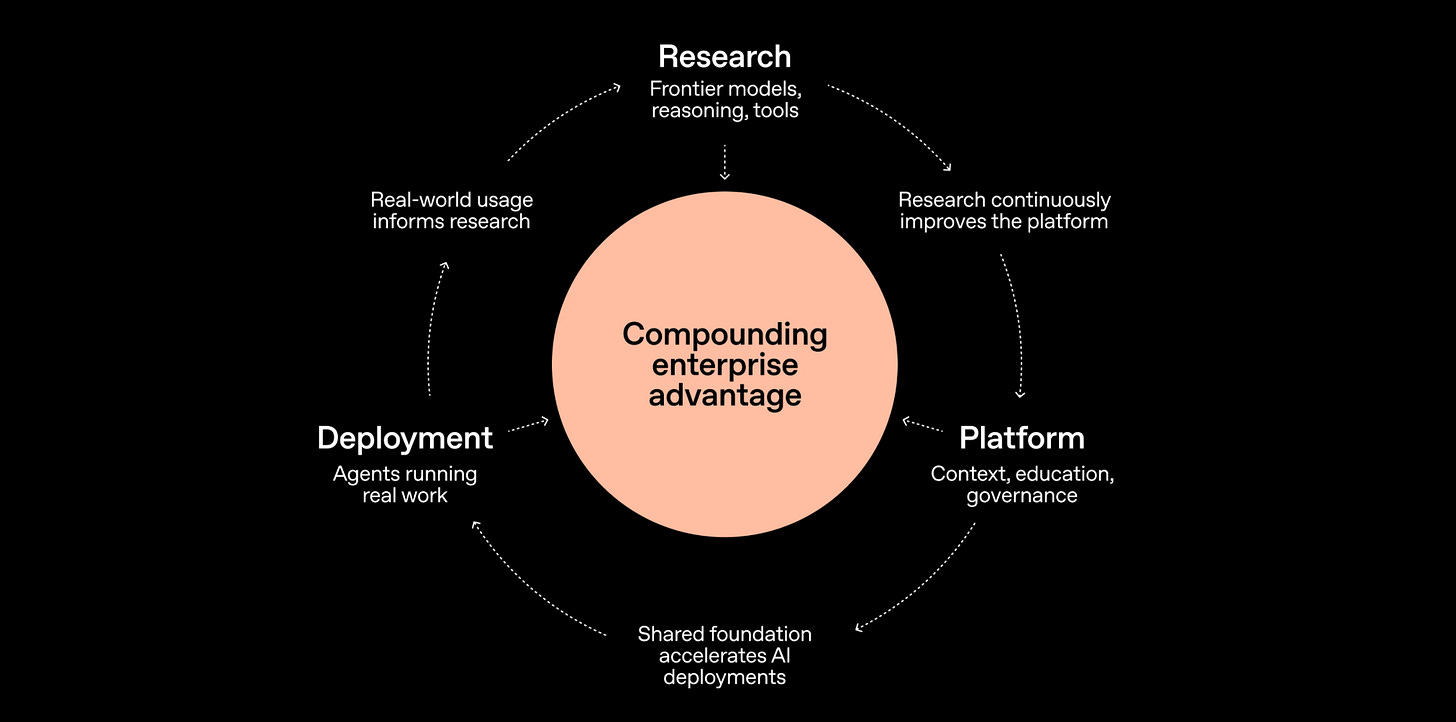

I recently stated that traditional foundation model providers such as OpenAI, Anthropic, Google, and Microsoft are moving up the AI stack by shifting focus from raw models to agentic applications, tools, orchestration and standards.

Foundation model providers (OpenAI, Anthropic, Google, Microsoft) shift focus from base models to agentic applications, tools, orchestration, and standards.

This commoditises raw models while capturing higher value in autonomous AI Agents, enterprise workflows, and interoperability layers.

Anthropic’s skills approach favours reusable modular components over complex scaffolding. Providers increasingly own task planning, tool integration, and persistent context management.

The result favours ecosystems with end-to-end agentic systems over isolated models.

Will the model eat your software stack?

As Frontier is demonstrating, model providers are now extending into agentic workflows, sandboxes and virtual environments.

Hence AI Agents operate directly with file management, code execution, and resource access in secure spaces.

Advanced models subsume software stack layers through native reasoning, tool use, planning, and execution.

Capabilities once needing custom scaffolds now emerge natively or via minimal protocols, reducing reliance on external prompting, chaining, retrieval, or orchestration frameworks.

This threatens middleware and tooling layers.

Outcomes hinge on convenience versus control.

Provider-led paths deliver rapid iteration but increase dependency.

Custom stacks preserve auditability, multi-model choice, and deep integration.

Model providers commoditise lower layers to dominate applications.

But there are opportunities in proprietary data handling and specialised domains.

In Conclusion

The recent announcements from Anthropic (Claude Opus 4.6) and OpenAI (Frontier plus Codex updates) signal intense competition to own autonomous AI Agents for knowledge work and business workflows.

Raw model upgrades matter less than the platforms and patterns that enable reliable, end-to-end automation.

And the real story lies in execution autonomy for complex, multi-step tasks rather than benchmark scores alone.

Enterprises face accelerating choice between provider-led convenience (rapid deployment but dependency) and custom control.

This race intensifies pressure on middleware layers and creates opportunities in specialised domains or data sovereignty.

Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. Language Models, AI Agents, Agentic Apps, Dev Frameworks & Data-Driven Tools shaping tomorrow.

Introducing OpenAI Frontier

OpenAI Frontier is an enterprise platform for building, deploying, and managing AI agents with shared context…openai.com

OpenAI Frontier | Enterprise platform for AI agents

OpenAI Frontier is the enterprise AI platform for deploying secure, production-ready AI agents-integrated with systems…openai.com

COBUS GREYLING

Where AI Meets Language | Language Models, AI Agents, Agentic Applications, Development Frameworks & Data-Centric…www.cobusgreyling.com

The semantic layer approach you outline here is fascinating—treating context and data integration as the competitive moat rather than raw model capability. I think this represents a fundamental shift in how enterprises will evaluate AI platforms going forward.

What I'm curious about is how this plays out across the broader landscape. Different players seem to be taking very different strategic approaches to owning the enterprise layer: https://thoughts.jock.pl/p/ai-agent-landscape-feb-2026-data

Multi-model support is the interesting detail (particularly given the tension in the last 48 hours with OpenAI and Anthropic). If Frontier agents can use any model, the vendor lock-in shifts from "which model" to "which orchestration layer owns your agent state and permissions." That is stickier than model lock-in because switching orchestration means rebuilding your governance and audit trails.