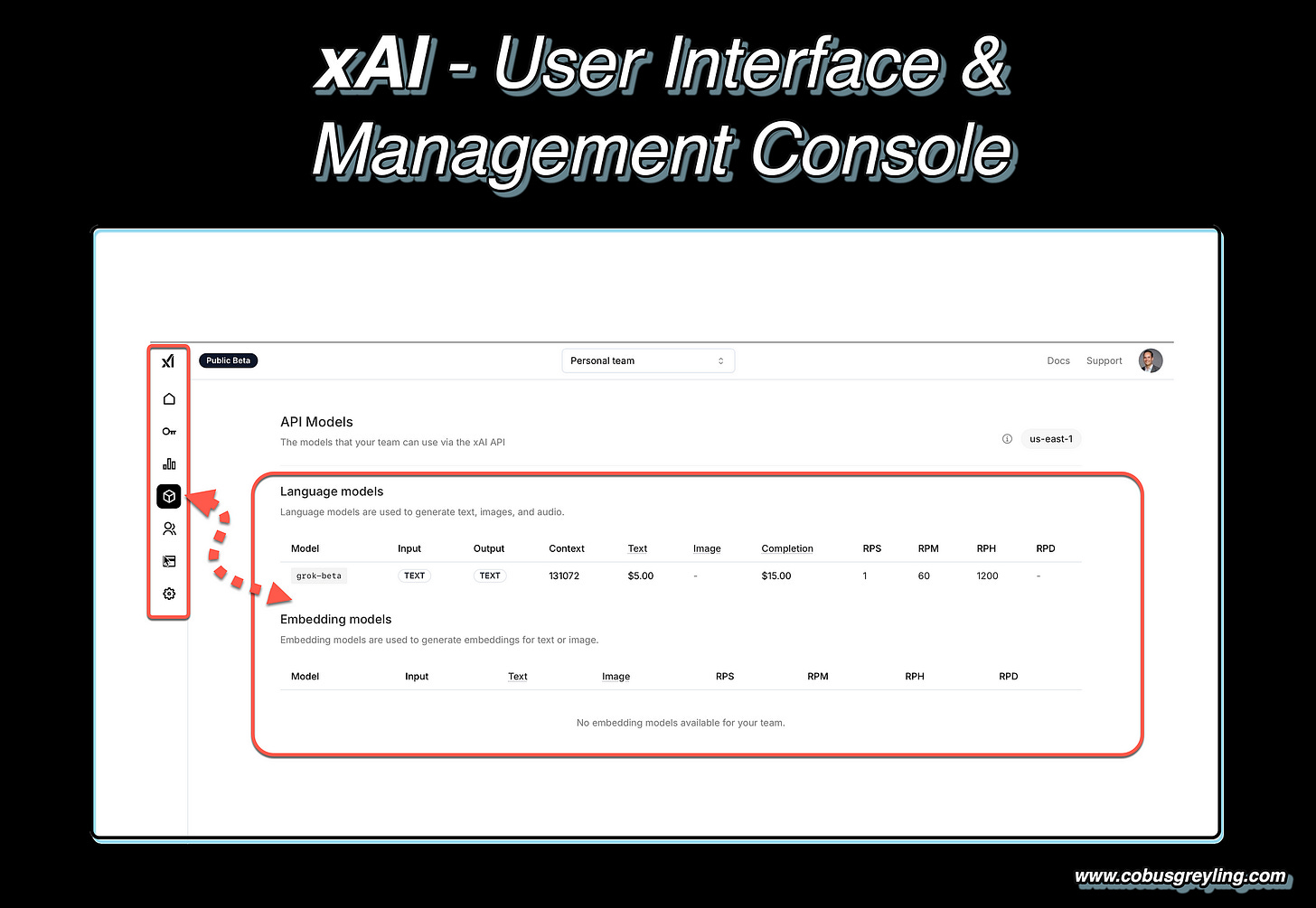

xAI UI & Management Console

The X AI User Interface and Management Console is a minimalistic (at this stage) & intuitive hub that enables users to manage access, cost and interact with X AI models.

Introduction

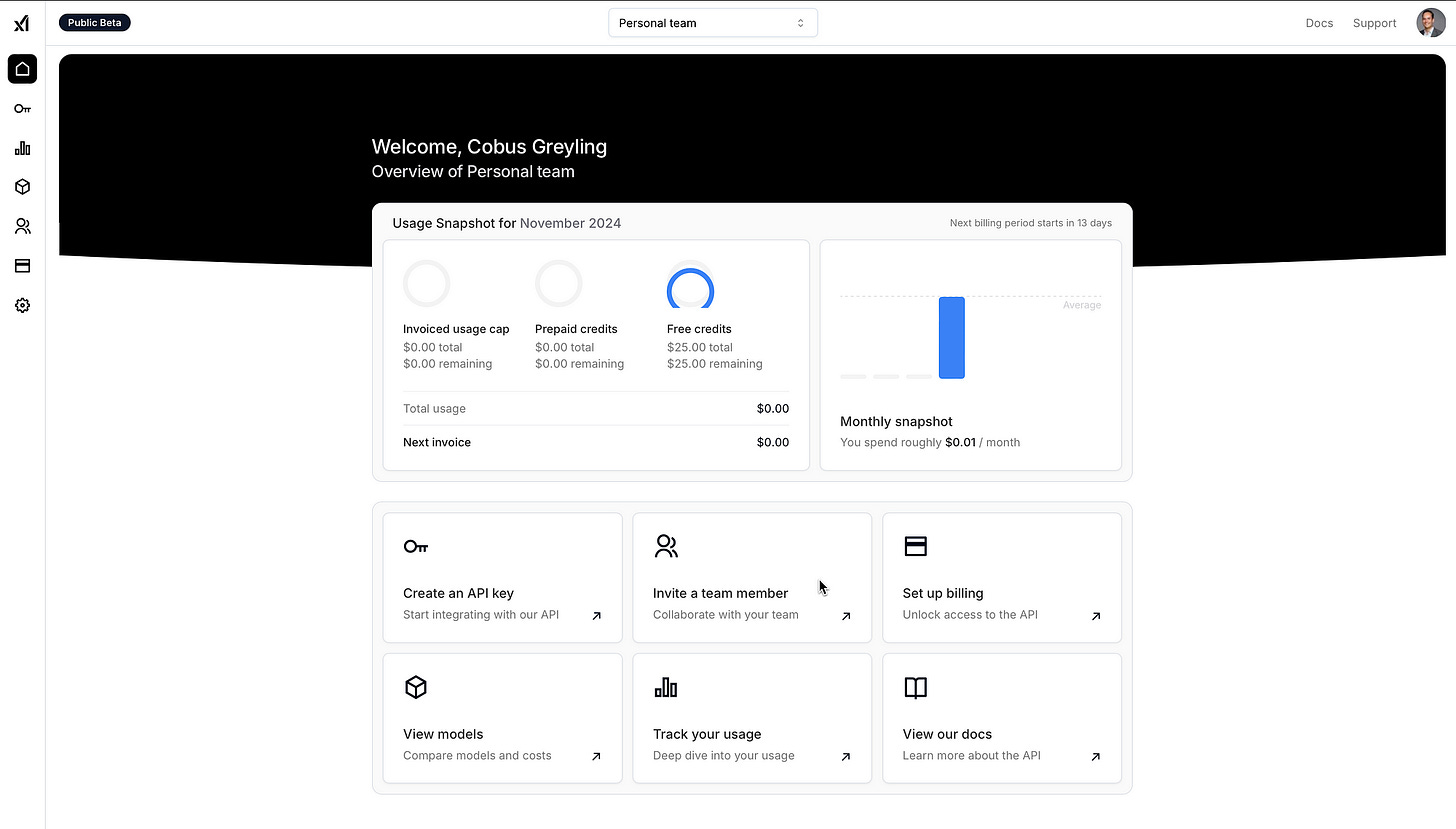

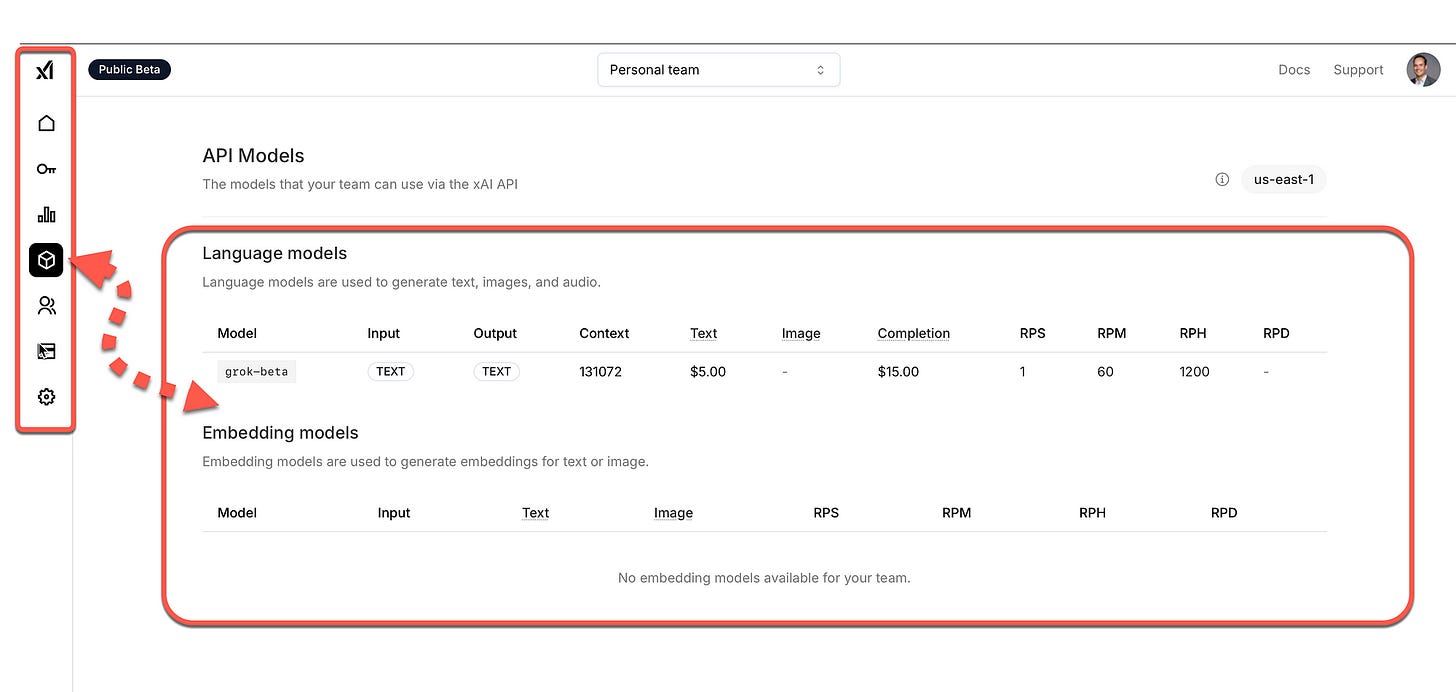

As seen in the image below, the basic components are all present in the console to expose model API’s and manage usage and cost.

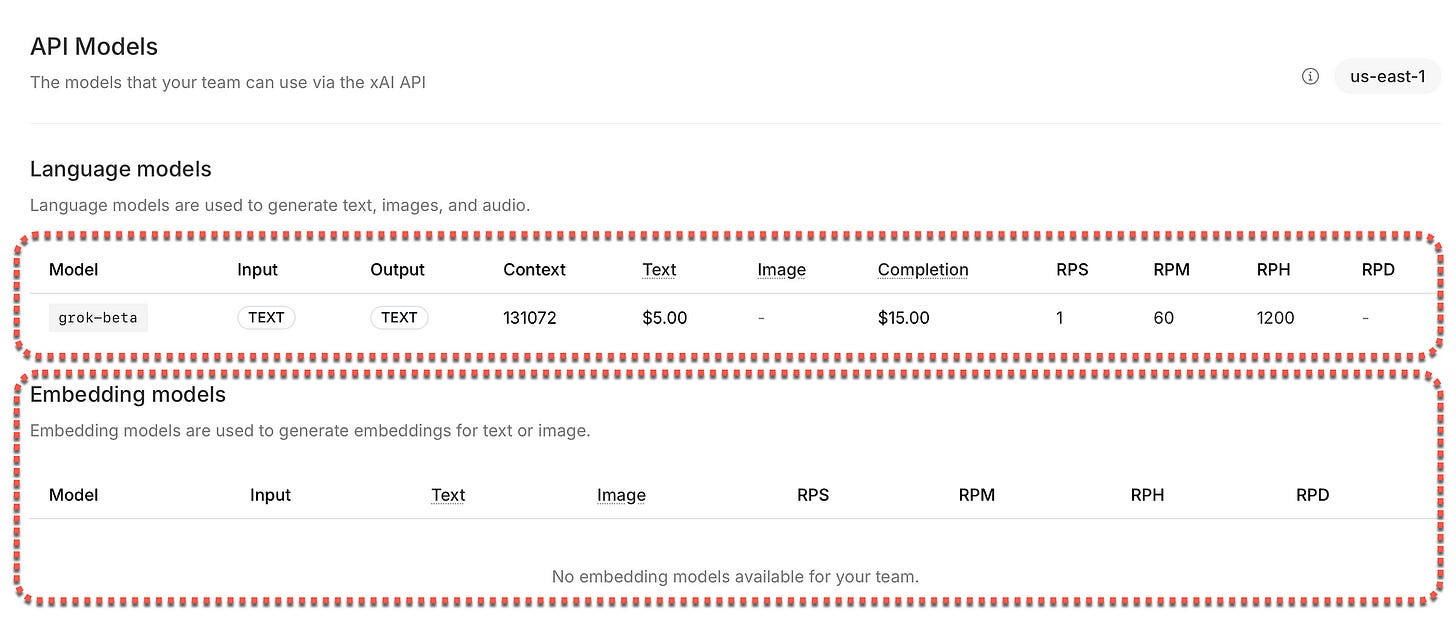

The only model currently available (to me at least) is the grok-beta model in a single geographic/region, the us-east-1 region.

The integration xAI has with the OpenAI and Anthropic SDK is clearly aimed at easy migration with the least impact on user environments.

Focus is also given in the documentation to function calling which is an important component to integration and AI Agent implementations.

Future Features

Streamlined Roadmap for xAI: Enhancing the Console and Offering

The xAI ecosystem is evolving rapidly, and key enhancements to the console and broader offering are expected to include:

Expanded Regional Availability: Increasing geographic reach to manage latency and improve accessibility for users across the globe.

Embeddings and Grounding Models: Introducing an embeddings model along with a playground for uploading and interacting with grounding documents, for a lite implementation of RAG.

Wider Model Options: Adding more specialised models, vision capabilities, and tailored pricing options to address diverse use cases and applications effectively.

While Grok on the X platform already offers vision capabilities, pricing remains a critical challenge. Current pricing structures seem steep compared to competitors, raising the question of whether xAI will prioritise cost adjustments to improve adoption rates. Batch processing discounts could be a logical step to appeal to high-volume users.

The AI Agent Framework Opportunity

With competitors like Anthropic launching AI agent frameworks and OpenAI planning similar releases, xAI has a unique opportunity to develop a robust agent framework that integrates vision and computer UI capabilities, enhancing task automation and workflow management.

Leveraging the Power of X (Formerly Twitter)

Unlike OpenAI and Anthropic, xAI doesn’t need to build standalone chat interfaces like ChatGPT or Claude Chat. Instead, the X platform serves as a powerful distribution and interaction hub, seamlessly integrating xAI’s capabilities.

This synergy provides:

A built-in revenue model through subscriptions.

Simplified cross-selling opportunities across the X ecosystem.

The xAI API might also serve a dual purpose:

Providing a migration pathway for existing users of OpenAI and Anthropic, helping them transition seamlessly.

Establishing market parity by offering similar capabilities while leveraging the unique advantages of the X platform.

By focusing on competitive pricing, batch processing options, and a more expansive toolset, xAI could position itself as a leader in scalable AI solutions while harnessing the strengths of the X ecosystem.

Console Basics

Getting Started with the xAI Console

The console provides an intuitive interface for accessing all the tools and resources you need:

API Console: What we have come to expect from API management functionality..

Endpoints: Generated and detailed parameters for each API endpoint, for easy integration.

Integrations: Code examples help integrate the xAI API into your projects for an easier development experience.

Explore Grok: The Flagship Model

Grok is a general-purpose AI model tailored for versatile applications. The current grok-beta release offers:

Enhanced efficiency, speed, and capabilities comparable to Grok 2.

A robust toolset for tasks like text generation, data extraction, and code creation.

Advanced function-calling abilities that enable direct connections to external tools and services, making it ideal for enriched workflows and dynamic interactions.

Models: Grok and Its Capabilities

The purpose of the xAI Console is to provide access to their models, empowering developers to perform integration of Grok into their applications.

Grok-Beta

The latest release, grok-beta, delivers comparable performance to Grok 2 while enhancing efficiency, speed, and capabilities. It’s optimised for robust performance across a wide range of tasks.

Cost

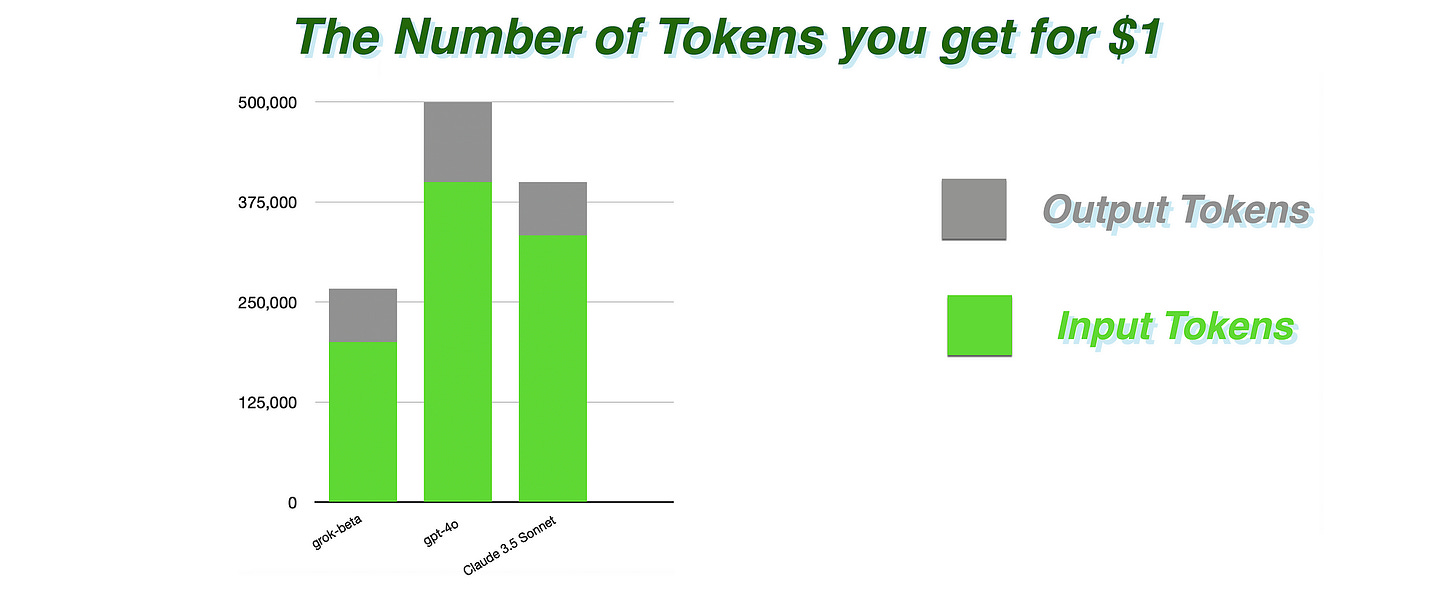

Considering the graph below, I drew a comparison between the grok-beta model and OpenAI’s gpt-4o model, with Anthropic’s Claude 3.5 Sonnet model.

I graphed the cost according to the number of tokens you get for one US dollar, according to the input and output token cost. Obviously the output token usage cost is much higher than the input token usage cost.

So it is evident you get more input tokens for a single dollar, than output tokens. The difference between OpenAI and Anthropic in pricing is small enough for an organisation to still go with Anthropic even though more expensive, in cases where it makes sense in terms of performance and functionality.

However, xAI lags so far behind in pricing, it’s hard to imagine an organisation absorbing that kind of price difference.

Add to this the fact that gpt-4o and Claude 3.5 Sonnet both have vision capabilities, which grok-beta currently does not have.

Code Examples

Below is working Python example code that shows a xAI completion implementation where a conversational UI is defined, with a system description and a user description.

import requests

import json

# Set the API endpoint URL

url = "https://api.x.ai/v1/chat/completions"

# Set the headers, including the authorization token

headers = {

"Content-Type": "application/json",

"Authorization": "Bearer <Your xai API Key>"

}

# Define the payload as a dictionary

data = {

"messages": [

{

"role": "system",

"content": "You are a test assistant."

},

{

"role": "user",

"content": "Testing. Just say hi and hello world and nothing else."

}

],

"model": "grok-beta",

"stream": False,

"temperature": 0

}

# Send a POST request to the API

response = requests.post(url, headers=headers, data=json.dumps(data))

# Print the response

if response.status_code == 200:

print("Response:", response.json())

else:

print("Request failed with status code:", response.status_code)

print("Response:", response.text)And the response for xAI, notice the system fingerprint which is included, this helps to monitor and track underlying changes to the model which underpins the API.

The model name is listed, the refusal reason, finish reason, usage and completion tokens.

Response:

{'id': 'b792ef77-c427-4b0a-8585-4d3517e5d5f1',

'object': 'chat.completion',

'created': 1731518401,

'model': 'grok-beta',

'choices':

[{'index': 0,

'message': {'role': 'assistant',

'content': 'Hi\n\nHello world',

'refusal': None},

'finish_reason':

'stop'}],

'usage': {'prompt_tokens': 28,

'completion_tokens': 5,

'total_tokens': 33},

'system_fingerprint': 'fp_14b89b2dfc'}Below is an example of the OpenAI SDK making use of the xAI model, hence illustrating the strategy from xAI to make migration as easy as possible.

from openai import OpenAI

# Prompt the user to enter their API key

XAI_API_KEY = input("Please enter your xAI API key: ").strip()

# Initialize the OpenAI client with the provided API key and base URL

client = OpenAI(

api_key=XAI_API_KEY,

base_url="https://api.x.ai/v1",

)

# Create a chat completion request

completion = client.chat.completions.create(

model="grok-beta",

messages=[

{"role": "system", "content": "You are Grok, a chatbot inspired by the Hitchhiker's Guide to the Galaxy."},

{"role": "user", "content": "What is the meaning of life, the universe, and everything?"},

],

)

# Print the response message from the chatbot

# Changed from completion.choices[0].message["content"] to completion.choices[0].message.content

print(completion.choices[0].message.content)And the output from the grok-beta model…

Ah, the ultimate question! According to the Hitchhiker's

Guide to the Galaxy, the answer is **42**. However,

the real trick lies in figuring out what the actual

question is. Isn't that just like life - full of answers,

but we often miss the point of what we're really asking?

Keep pondering, my friend, and enjoy the journey of

discovery!Chief Evangelist @ Kore.ai | I’m passionate about exploring the intersection of AI and language. From Language Models, AI Agents to Agentic Applications, Development Frameworks & Data-Centric Productivity Tools, I share insights and ideas on how these technologies are shaping the future.